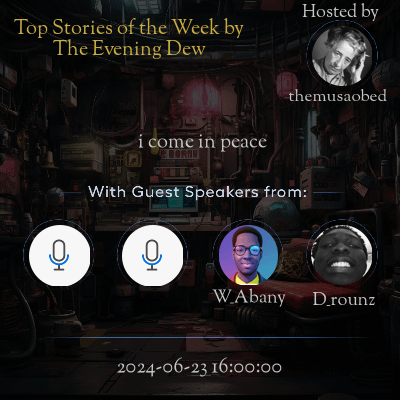

This space is hosted by themusaobed

Space Summary

The Twitter space delved into the capabilities and potential of AI, focusing on learning mechanisms, limitations, and future scope in various applications. While AI excels in data processing and probabilities, its computational nature lacks true cognitive understanding. The discussion highlighted AI's role in education, the disparity in information storage between AI and human brains, and the need for human oversight in ethical AI development. Notable points included AI's potential errors, practical applications, and the distinction between human cognition and AI computation. The session optimistically looked toward AI's future, discussing possible advancements and the necessity for responsible development.

Questions

Q: Can AI think independently?

A: AI currently operates based on probability, lacking true cognitive independence.

Q: How does AI assist in education?

A: AI helps students think for themselves by providing contextual answers based on vast data.

Q: Are neural networks infallible?

A: No, they rely on probability, which can lead to errors and unexpected outcomes.

Q: How does AI compare to the human brain in information storage?

A: The human brain can hold far more information, making it superior in storage capacity.

Q: Will AI replace human intelligence?

A: It’s unlikely; AI lacks true understanding and cognitive reasoning.

Q: What is vectorization in AI?

A: It is a method AI uses to process and organize information based on vectors, following probability patterns.

Q: What limitations does current AI have?

A: AI struggles with understanding context and making autonomous decisions based on real-world unpredictability.

Q: Can AI learn new things?

A: AI learning is limited to the data it is fed and patterns it recognizes, not genuine innovation.

Q: Why is human oversight important in AI development?

A: To ensure AI’s development aligns with ethical standards and societal needs.

Q: What future developments are expected in AI?

A: AI might eventually simulate more complex human-like thinking but is currently limited to computation.

Highlights

Time: 00:01:23

AI Learning Mechanisms: Vectorization in AI

Time: 00:05:12

Probability in AI: Neural Networks Discussion

Time: 00:10:45

Human Cognitive Advantages Over AI

Time: 00:14:30

AI's Role in Education: Enhancing Learning

Time: 00:21:08

Potential AI Errors: Risks of Probability Reliance

Time: 00:25:00

Comparison of AI and Human Intelligence

Time: 00:30:25

Future of AI: Advancements and Potentials

Time: 00:35:53

AI's Introductory Applications: Sector Benefits

Time: 00:40:02

Ethical Considerations: Importance in AI Development

Time: 00:45:27

Ending Notes: Final Thoughts and Thank You

Key Takeaways

- AI learning focuses on vectorization and probability

- lacking true understanding.

- AI's cognitive gap compared to human intelligence underlines the computational nature of AI.

- Neural networks rely on probability which can lead to errors in predictions.

- AI has the potential to enhance education by assisting students independently.

- AI doesn't 'think' like humans but follows predefined data patterns.

- The vast information storage disparity between human brains and AI systems.

- AI could revolutionize industries by streamlining a variety of tasks.

- Exploration of how AI might synthesize new learning from existing information.

- Acknowledgment of AI's drawbacks such as potential errors and limitations.

- Human oversight is crucial for guiding AI's development and ensuring ethical standards.

- Future expectations of AI involve simulating more human-like cognitive processes.

Behind the Mic

all of a sudden, Ilya resigned from open air. Bam. Daniel resigned. Bam. While that guy also resigned. Bam. Okay. One month later, and they were saying that Ilya is back, and he brought his own company called SSI. And that's the most interesting part of this whole thing, that his own company, SSI, is now the thing that everybody's talking about here, because it's. It's safe. It means SSi means safe super intelligence now. And his own whole goal, he doesn't care about shit. He says that his whole mission is to bring back, is to basically make safe super intelligence. That is his whole mission. And they don't care about anything. They only have one tweet that we have had 8.1 million engagements, basically views. And as on Twitter, I can't read the whole tweet now because it's long. But the whole thing, they were saying that they believe that we can achieve AGI within the next decade. His goal, his mission, his products, his roadmap, every single thing is to make sure that they produce safe super intelligence. They want to produce, you know, they want to produce the kind of, you know, Ultron. That's not bad. Aka vision. Still referencing Marvel, age of Ultra. There is no Ultron. That is not bad. Vision is good now. Vision. Well, vision was good. Now is that why you wanted to get into superhero? That's why it was an exception. But what if they want to do exactly similar like they want to you? Let's focus, please. Like, please. I would thought it was really about Ilya. That was. Nah, nah. He's on point so far, so I have nothing to say. Okay, thank you. Thank you. The Infinity Stone. Let's not get into Marvel anime. I'm sorry, Marvel castles right now. But yeah, that's basically the whole news on this thing. So I do have thoughts on that. Well, I'm actually reading, like, I sent you the paper and just like a longer essay that, somebody wrote, about. Yeah. So there's like, this essay that Leopold was his name. Trying to look for this name, Leopold Ashen Branagh wrote, Somebody sent it to me as soon as I posted it. And then Leopold actually is opposite of what he said. He's saying now, what this guy said is Leopold argument now is more on the side of Ilya, that you cannot achieve AGI if all of a sudden, the person, the most creative people in this space that I know Ilya he said in that post. People he has around them and gave a case study to Ilya and Mira Bell and how both of them are introducing the artificial creativity. Now the whole thing that really got me in this essay is, how do I say, the last sentence by Leopold and he was saying, the Ilya is not after the baby. Ilya is after the algorithm that makes a human being _ okay, no, no, let me do this very well. I want to I'm sorry, I didn't say this in the beginning. We're talking about a 41 year old. Understand. Ilya is not after the baby, Ilya is after the algorithm that makes a human being, and that is what makes sentience that model to me from. To end this discussion, like, let's have this discussion even deeper another time, because we're already out of time here. So here's what I say. Yeah. So to any discussion we may probably discuss this more maybe on last week was Friday, but if you have time, you can come on us for Friday, this next week. Okay, fine. We'll book you in an aesthetic call. So for now, to end this discussion, this is a quote from this guy, Naval Navajo said, technology we have right now, that human beings be always underestimates the power of the technology on a short run. No, sorry, we underestimate the power of the technology on the long run and overestimate the power of that technology on the short run. Basically what's happening here with this GPT four and how we are looking at it, basically the two sides of this argument now, Bash and Musa, now you can see that bash is saying Musa is overestimating it. And Musa is like, no, Bash is underestimating it. But yeah, we end this discussion here. Now, if you want to listen to more of this, you have to listen to last, because Friday, which is basically more raw and, you know, very raw. But yeah, I think that's it for the evening due today. And also know what side drone David is on, because he's not allowed to sit on defense. Yes, yes. I'm not allowed to defense. I understand. Oh, my God. I'm on the AI side, sir, where. I understand where Bash is coming from. I'm looking at something now that a human brain can hold more than 1 quadrillion bytes of information, which is equivalent to one petabyte. One petabyte is 100,000 terabytes, is basically the equivalent size of one who data center. That is basically. There is no processor in site that can. So for now, bye. You have to come on Friday. So guys, check. It's a very explicit podcast, but it's very explicit. Please don't. It's all kind of podcast that you don't come with your kids. Thank you. All right, thank you, everybody. Thanks, man. Thank you.