Space Summary

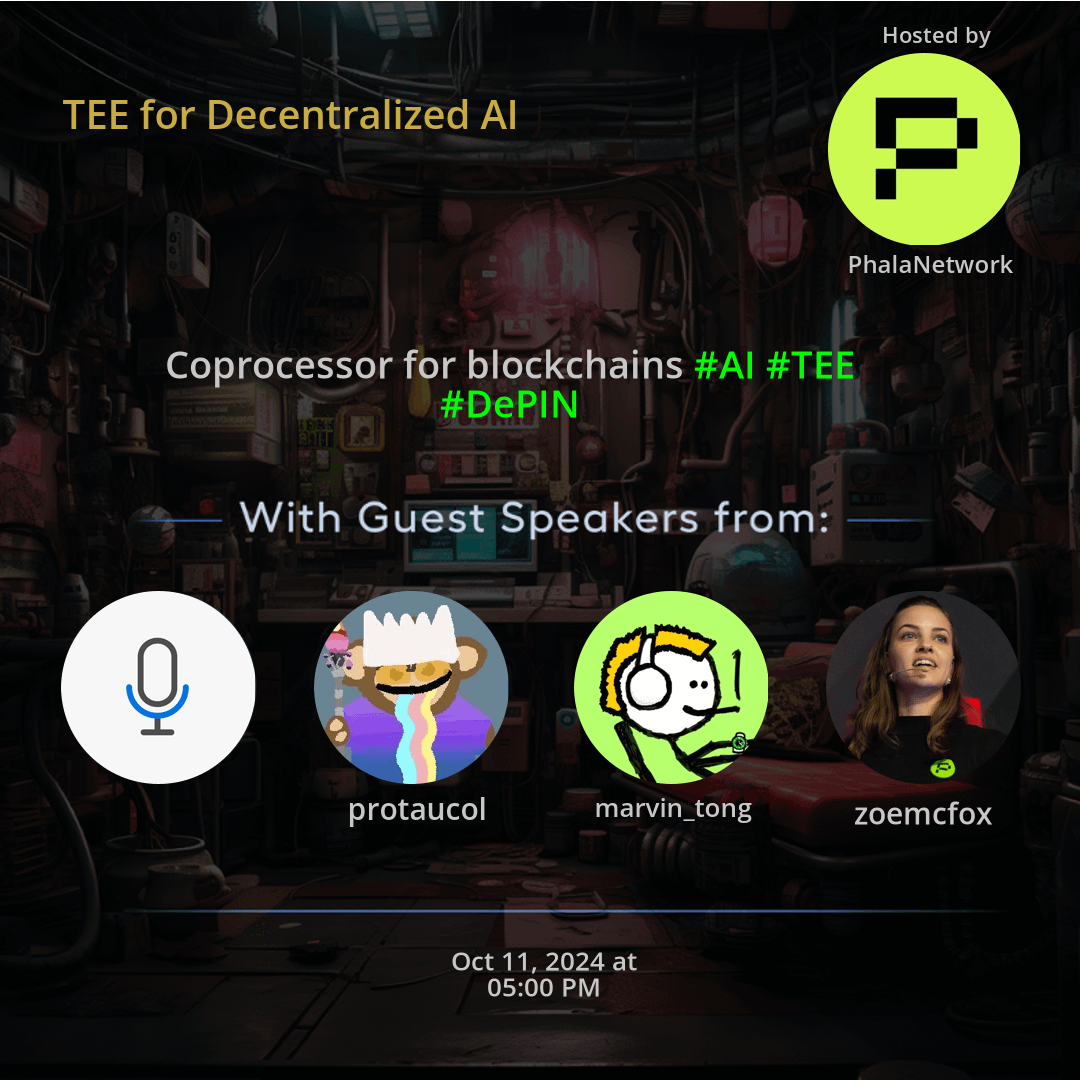

The Twitter Space TEE for Decentralized AI hosted by PhalaNetwork. Explore the realm of Decentralized AI empowered by Trusted Execution Environments (TEE) and Decentralized Private Inference Networks (DePIN). Learn how the convergence of AI and TEE technologies is revolutionizing security, privacy, and scalability in decentralized systems. Discover the potential for innovation and growth in AI applications through the adoption of TEE solutions. Delve into the key insights, discussions, and future prospects surrounding TEE for Decentralized AI.

For more spaces, visit the AI page.

Space Statistics

For more stats visit the full Live report

Questions

Q: How does TEE technology contribute to the security of AI in decentralized systems?

A: TEE enhances security by creating trusted execution environments for AI computations, safeguarding sensitive data.

Q: What role do DePINs play in secure AI computations?

A: Decentralized Private Inference Networks ensure the privacy and confidentiality of AI processes, essential for secure computations.

Q: Why is the synergy between AI and TEE crucial for blockchain applications?

A: AI and TEE combined build trust in blockchain applications by offering secure and private data processing, enhancing user confidence.

Q: What benefits does TEE bring to decentralized AI scalability?

A: TEE technology improves scalability and efficiency of AI processes in decentralized systems, enabling smoother operations.

Q: How can TEE adoption in AI drive innovation in decentralized environments?

A: By addressing security concerns, TEE adoption fuels innovation in AI applications, encouraging new developments in decentralized systems.

Highlights

Time: 00:15:42

Enhancing Security with TEE Technology Exploring how Trusted Execution Environments fortify AI security in decentralized settings.

Time: 00:25:19

DePINs for Secure AI Computations Understanding the significance of Decentralized Private Inference Networks in protecting AI processes.

Time: 00:35:55

Synergizing AI and TEE for Trust in Blockchain Discussing how the combination of AI and TEE builds trust in blockchain applications through secure data processing.

Time: 00:45:30

Scalability Benefits of TEE in Decentralized AI Examining how TEE technology enhances scalability and efficiency in decentralized AI operations.

Time: 00:55:10

Driving Innovation through TEE Adoption in AI How TEE adoption promotes innovation by addressing security issues and fostering new developments in decentralized AI.

Time: 01:05:47

Interoperability Challenges between TEE and AI Exploring the hurdles and solutions for seamless integration of TEE technology with AI systems.

Key Takeaways

- TEE technology enhances the security and privacy of AI in decentralized systems.

- Decentralized Private Inference Networks (DePIN) play a crucial role in secure AI computations.

- AI leveraging Trusted Execution Environments (TEE) enables confidential data processing.

- The synergy of AI and TEE fosters trust in blockchain-based AI applications.

- Scalability and efficiency improvements are notable benefits of TEE for Decentralized AI.

- Understanding the implications of TEE on distributed machine learning models is essential.

- TEE adoption in AI enhances data integrity and confidentiality.

- Interoperability challenges between TEE and AI systems require attention for seamless integration.

- TEE solutions drive innovation in AI by addressing security concerns in decentralized environments.

- Exploring the intersection of AI, TEE, and blockchain leads to novel applications and advancements.

Behind the Mic

Initial Checks and Greetings

It cool. Test, test. Checking if everything works here. Yeah, I can hear you. And Tausi if you're here as well. Perfect. We usually have some background music on. Not sure if you can enable it while some people join. And we give it two more minutes to get started. Thank you everyone for joining so far. Okay, no music available today. That's fine too. But happy Friday everyone. Marvin, Tarsif, how are you guys doing? All good? Good, how are you? Good. Yes, it's a Friday evening here in Berlin. You guys join in from different time zones, so maybe not evening, but very excited to host a space here today. Cool. And hello everyone for joining. I think we can kick it off and start this very exciting space here today. I'm Zoe, VPN, partner at Phala Network and host and moderator of this session.

Session Overview

Today we are going to talk about Tes for decentralized AI. And besides this being an amazing topic, we also celebrate the end of our 60 days of te campaign. It's a campaign that Vala has initiated 60 days ago with several partners where we have hosted already amazing workshops x spaces and released great content about trusted execution environments, about basics of trusted execution environments, how tes can enable different use cases. And today we are closing this campaign with a major partnership announcement, also with ionet, about tes for decentralized AI. So this is the general topic and we are super excited to have Tausif here, who is vp of business development at ionet. And as I mentioned, Whana has just recently announced a strategic partnership with ionet and this is what we are going to discuss and highlight today while we are diving deep into what tes can do for decentralized AI. So Tausuf, if you want to take the stage, first, introduce yourself a little bit.

Introduction of Tausif

I know Ionet does not maybe need like a detailed introduction, but please, for everyone that's new here, the stage is used for an introduction. Yeah, absolutely happy to do that. So first of all, thanks for having me on. It's a pleasure to be here. So for those who don't know me, my name is Tasif Ahmed. I'm the vp of business development here at I O.net and for those unfamiliar with I O.net, comma, we are a decentralized gpu compute platform built on Solana. So we essentially take a latent compute capacity across a variety of distributed sources globally, pull that into our network layer on ML frameworks and libraries on top, making it easier for AI ML developers to build, and essentially offer up that compute to a variety of AI ML startups, enterprises, research labs, all the way to sort of like independent developers. Yeah.

Tausif's Role and Vision

So I think for just to kind of add a little bit more to it. My focus primarily SVP of business development is on the supplier side, managing relationships with all of our independent data centers, crypto projects, crypto miners, lock deal with community and then also focused on sales and demand. The revenue side, working with a variety of AI ML startups and research labs, plus also all things related to partnerships which has brought me to work more closely with Fallout network. Amazing. Thank you so much. And I must say you have a very interesting and powerful bio. Right. You worked with Kraken before, also did like a lot of web two sales and partnerships for AWS, Amazon Music and Deloitte. So definitely a strong bio and great to have you in the web three space working with Phala closely and of course an Xbase hosted by Fala. Without our co founder Marvin would not be the same.

Discussion on Partnership and Strategy

So welcome, Marvin. Good morning to the west coast. If you want to say morning, some welcome works. Yeah, morning. Morning. Hi everyone. Yeah, just want to say hi to everyone here and thank you for joining our space guys. Today's I it just like, I think people don't join Twitter space too much in Friday night so we got very few audience here. But thank you everyone who joined this AMA. Appreciate it. Yeah. Yeah, definitely we see a lot of community members. This space is also recorded and we always like to recap on this discussion afterwards. Definitely. We will discuss good content here today. And we already said this. I think the conversation today is interesting because I O.Net actually states it also on the website, like the biggest compute network. Fala has a similar slogan, but we actually say that we are the biggest compute cloud based on the number of Te nodes.

Collaboration Overview

And this is actually the intersection because we are partnering with you guys with IO.net dot specifically to bring trusted execution environments to the overall GPU or the cloud sphere for the use case of AI. So I think it's very interesting to have these two companies with a similar approach, but with specific expertise on the hardware side or the security side joining forces for the overall use case. And Taos. You mentioned already a bit, of course, the general introduction, but could you maybe recap a little bit because IO.net is around for quite a while, right when the strategic approach towards AI appeared or was it always there? Or like how do you guys, why do you position towards the AI space as of now? Yeah, yeah. Absolutely happy to speak about that. I think that's a really great question.

Understanding AI Strategy

So I think the way we kind of approach it, there's a few different aspects of sort of like the AI tech stack that you can think about. There's the foundational layer at the bottom of the actual compute layer, the pure resources and the computer resources that are needed to make the whole AI sphere function. On top of that, you have the data layer where you actually need to feed in data to a variety of models to train them and things like that. And above that you have, yeah, the actual model layer and then apps sort of like build on top of models. So the overall AI tech stack and sort of like infra application layer is sort of like fairly complex, has a lot of different players and a lot of variety of areas that kind of value can flow to and value can kind of like accrue to. So I think for us, as Ionet, we kind of obviously took a sort of holistic view of this whole sort of AI tech stack and decided to focus and kind of like start off our journey primarily on that foundational layer, right, of essentially being focused on compute.

Challenges and Opportunity in AI

And I think where we start off, whether it's sort of like the reasoning behind why we started off there primarily was, as you can remember, over the past year, the GPU and compute shorted story that's been affecting sort of all AIML developers and startups everywhere, right? It's like with a lot of like centralized incumbents kind of taking up all the GPU compute resources from Nvidia, like you hear about OpenAI and Grok and meta buying up hundreds of thousands of h, forming these super mega clusters to train their massive AI models. It became really difficult for a variety of different, pretty much everyone else in the AI space to get access to compute. And I think we essentially identified an opportunity there to pull together a lot of latent compute capacity that's spread out globally, isn't necessarily coordinated in a meaningful way to be accessible to all developers everywhere.

Future Directions

So this seemed to be a perfect area where sort of like the mechanics of blockchain could be overlaid on essentially a distribution and coordination problem in compute, and then essentially be able to coordinate that and offer it at a lower cost structure to developers everywhere. So that was kind of like the main thesis behind how Ioni formed, why we kind of really doubled down on this decentralized compute story. Essentially tried to build the largest deep end compute network out there. But as we drove from here and looked to the future, I think obviously we have a significant number of GPU's on the platform, but now we're moving more towards solving real world use cases and essentially being able to drive utilization of those GPU's as well. I think as we move forward from here, we'll start to look at a lot of those different layers of the AI tech stack and move into more partnerships or products where we actually can present a more holistic aimlike model development solution to the market.

Collaborative Strategy with Ionet

I can't speak too much about that today, but I think just to give you a preview of how we're thinking about our strategy going forward, you'll start to see us move more and more from the foundational layer into sort of like different aspects of the AIML tech stack. Perfect. Yeah, I mean, we see this, we talk about the specific hardware also in a little bit. But I mean this is why we, why Fala partners with ionet is really also to solve this problem, right? Like you guys have access to this hardware. Everyone knows, like Phala's Te cloud is originally based on cpu and the cpu hardware is provided by the community. So Phala, or hash Forest, the company does not necessarily provide this hardware.

Importance of Collaboration

And that's why now when we enabled like Te on GPU, we needed to partner with companies or teams that have access to this. Right. And that's why this one is very important for us to evolve AI, but also to find the right partners like ionet that has this specific hardware. And Marvin, could you summarize a little bit on the strategy side of why this collaboration, our strategic partnership with Ioneta, is crucial to us? Why is it so important for FaLa's AI strategy? Sure. Yeah. So FalA is aiming to build like security off chain environment to execute these computation programs in a security way. So this is our mission and we aim to do that to scale the capability of web three and blockchains, especially like Ethereum Solana poked out.

Strategic Goals

So all of our strategies like build around based on this mission. So a couple of years ago when we established our network for this p two p computation network security execution environment, which most people know the term is te trusted execution environment, is the, is a core part of, for our infrastructure. So our strategy is like we scale the hardware based on what we want to deliver in software level. So for example, in our software, we want the, we want to engage the, you know, the hard, I mean, we want to engage the programs that enable security, privacy and trustees. So, and we want to be able to open up the capability of multi type of virtual machines program type, you know, not just S 3D program, but also like JavaScript or other type of programs.

Evolution of Technology and Strategy

So yeah, so we are building a software in blocked space instead of hardware. And we accept hardware step by step based on their security levels. So this is why in the beginning, the first two, three years of Fallon network, you majorly see like we accept Intel SGX CPU's as the hardware. But since last year, things begin to change, right? First of all, like AI as a use case are beginning to like just increase rapidly and everybody is using it now. So it means that in the demand and the GPU is also like fitting into like a demand driven market. So in this year, the latest hardware chips like H 100, 200, it's very AI focused GPU type of hardware begin to open up the confidential compute functions.

Advent of New Hardware Capabilities

So it means that before this year, before 2024, there's no GPU te. And this year they open up the te.

Market and Hardware Readiness

So means that both the market and the hardware providers are ready to go into a Te block space. So we begin to integrate the GPU Te into our network and trying to make a good service to the Webster AI use case. So I think this is why now, why not 2022? The timing question, why we begin to accept the GPT. And as you know, Il nine is one of the biggest decentralized computer marketplace in the world of cross l and that also have cpu's. But the most impressive part is you can always run GPU's available on LNT on this. So this is why we collaborated with Elenet team and foundation to offer the security GPU services to web server. And besides that, I mean our collaboration has multi layers, right? Like hardware integration, finding the best AI use case in web three.

AI Use Cases and Collaboration

And just to share here, we also co researching some work to maybe shift the very pioneer benchmark for the latest GPU performance of AI inference, maybe training in the future. So yeah, I guess this is why we collaborate with LN and how they can help us. Great, thank you for summarizing and really important for the audience I think to understand is that the hardware like on GPU side is only ready now, right, to enable Te. So this really enables or opens up a great market and use case that we are discussing today. And Tausif, back to you. It's like not only based on the time, right, that the GPU hardware that you have access to, that you guys own, is now able to enable the security mechanisms. Besides this, why is Ionet interested in working with falla for Te security on the GPU side?

Strategic Partnership and Customer Insights

Yeah, yeah, absolutely. So I think there's some really quicker points that Marvin just mentioned there, right? So I think first in terms of like the actual, you know, the ability of the hardware to kind of like move into ge. Like just, it's a very recent sort of like development that's perfectly dimed, but sort of being able to think about like distributed computer, distributed AI training. Right? So I think fellow network, being one of the leaders in the Te space, it made a lot of sense for us to sort of like collaborate and build a strategic partnership here and sort of explore how both companies can kind of work together to sort of like really progress the mission of security and privacy and confidential compute in the web three and AI space specifically. So I think that's sort of part of where this partnership started from.

Understanding Customer Needs

And I think, you know, to kind of like take a step back a little bit and give a little bit more insight, kind of taking a customer's perspective on this. Right. Because I think us at ionet, we're out there kind of talking to a lot of different types of customers, you know, like startups, enterprises, research labs, and kind of really understanding what their use cases are for AI model training, what their specific, like, requirements are, what they're looking to optimize for. And I think, you know, a lot of what we've heard from the market out there and from specific customers out there is that essentially that, you know, when it comes to distributed compute, there's a whole other variety of sort of like risk factors that need to be optimized and managed, like, especially when you're working in sort of like larger enterprises.

Privacy and Security in Decentralized Computing

And I think this idea of sort of privacy and security when it comes to using a decentralized network of GPU's, it's something that's top of mind for a lot of buyers of the space. So it really comes down to sort of like solving a customer use case and making a lot of customers more comfortable with the idea of decentralized compute when they know that the privacy and security of their AIML workloads and any sort of like confidential or proprietary customer or company data is being secured using these technologies. So that's sort of really kind of like where I think this partnership really works. And I think where ionet's interest in life to use really comes from being able to offer these secure, verifiable computing environments for AI workloads that really high trust levels and strong security guarantees.

Exploring Additional Security Mechanisms

Okay, regarding, thank you for sharing. Also the customer need, it's always super important. It just brought up a question from my side. Of course, te is like, can be enabled on the hardware side that ionet provides GPU related for the AI use case. But is ionet also looking with maybe some other partners into different security mechanisms besides te to offer to the end customers? I think right now te is sort of like the major one I think we look at. Fhe is sort of like an alternative sort of like mechanism, you know, and FHG, for those who are unfamiliar, are fully homomorphic. Encryption is kind of the focus of that. So a different way to sort of like approach confidential compute, I think.

Performance Metrics and Technology Readiness

And I'm sort of more on the business side, but at least based on kind of my discussions with product engineering, we're leaning more towards d, just based on like, you know, because I think it's not only a matter of just like things being, you know, confidential and secure, but it's also about how the ability to provide that confidentiality and security actually manages sort of like other performance metrics that we have to look at. So I think when it came to fhe, the performance metrics for like inference and sort of like the latency of the workloads wasn't quite there yet in terms of what we actually want to accomplish and offer the customer. So I don't think the technology, it's like really exciting technology, but I think it might take a little bit longer for it to get to a point where it makes sense for sort of like production workloads and in terms of performance, whereas, you know, I think with te, the performance is sort of like much better.

Trust in Established Technologies

Yeah, yeah, totally agree. Interesting fhe interesting technology, but maybe not as ready to go as te. And the interesting thing is we discussed tes in like basic of tes in one of our spaces where we also looked into web two use cases, right. Tes are actually like a traditional technology being used in a lot of security, military use cases in web two. So especially if ionet looks into like enterprise use cases, tease is a good technology to convince more like the enterprise customers, maybe because it's like a trusted and longstanding technology and really works and is scalable more than like other security mechanisms that will come and will be valid as well, but maybe not as of now. So yeah, that's interesting to discuss this as well.

Summarizing the Role of TE in Decentralized AI

Marvin, over to you again, a general question. I know you have discussed this over a lot, but since the topic today is te for decentralized AI, if you can summarize again why, I mean, we touched on it right now already, like why te for AI? But what value does te or do tes actually add to decentralized AI from your perspective? Yeah. Would love to like comment on that. And first of all, I totally agree with what Tassie just mentioned. You know, Te is not designed. Like, no matter it's intel, it says Nvidia, they are, they're not design and, you know, create te hardware for web three.

Demand for TE in Web Two

That's something like everybody should know. They created for web two. So it means that even in the broader market, in web two AI space, there's a strong demand for using Te. I think it's been pushing from top to down instead of from down top. It means that for example, in the legacy period, like the machine learning companies, for now, the companies like OpenAI, enterpeak, other companies, Lamar, maybe during their training session, there's multiple participators. To co create a model, you need a massive number of data. Some providers, like Reddit provide those data in a confidential way because they don't want, for example, if I'm ready, I'm kind of signing a contract with Opena and I don't want my data, my treasury data be reviewed or just be totally copy and pasted in OpenAI because next year I need to send another contract to render again.

Concerns on Data Security

So for the data provider, they are worried about the model creators to store their data in some way and maybe some of them are even sensitive data. So the security protection demand for this data set is super important. And for the model creators, there's a typical case is that because there are some key parameters or factor, I would say temperatures like core commercial secrets in each model. And you don't want to be still for these key factors because if you computer get it then you are fucked up. So basically each party who joined these co training and inference session, they are always have concerns about other parties to get their secret. But in the meanwhile they have to cowork together so that we can get, you know, have the next generation model.

The Need for Confidentiality

So this is just a folder training session. Well of course confidentiality is also super important in a consumer and enterprise level inference. Yeah, I think this is the main reason why Nvidia present confidential compute for their next generation GPU's in this year. And h 100 200, b 200, we'll all implement Te as the default setup. But of course for now, if you want to open it up, it takes a lot of time to set up the environment but in the hardware level they are default setting. So yeah, just trying to share the background like why web two AI need tee. And now let's talk about web three.

Blockchain and TE Challenges

So as you all know that blockchain have a good reputation on trustees permission needs and permanently. Right. But it doesn't have a good reputation on tvs. Performance latency faster finalized. So there's a, these features actually some, most of times they conflict with each other. So you can't have them all. You can't have a high performance and extremely decentralized network in the Minville. So you have, there's a lot of trade off. You have to make your choice. So it means that like if you want to bring the AI models into web three, world is very hard to compromise. But in a minute there are a lot of ambition teams working on it.

Efforts for Decentralized AI

For example, Rachel zero gravity, Laura Sentinent Ora there's so many a class teams spending a lot of money and tenants to work out the solution for decentralized AI. And I would say most of these ambitions are related with co owned AI models, verifiable inference and economy based on data privacy. So you can imagine that it's super hard to build establish an infrastructure in just one year or even two years to achieve these missions. But I would say using Te is not a shortcut, but it's a must to go in these technical milestones, because first, you can barely run these models on any current existed blockchain infrastructures.

Off-Chain AI Implementation

There's nowhere to go. So you have to implement AI models in off chain. And maybe that's nothing, not talking about AI models, even just AI agent SDKs. It's very hard to run it in the on chain runtime. So you have to offload the computing somewhere in off chain security way. So that brings the like the demand of GPT web three AI. If you want to have a call on the AI economy and you have your infrastructure need to include both on chain infrastructure design and the off chain part majorly, I would say the onion part is dealing with smart contract assets creations.

Integrating On-Chain and Off-Chain

The consensus mechanism and the off chain infrastructure dealing with skilled training influence these workflows. So the tee part is to connect these on chain and off chain infrastructure as a whole package as a one piece. So this is what we do. And the meaning of FalA is that of course we already have quite a mutual solution to bring them together. We are working very hard to deliver to integrate a pioneer level solutions for tes, so we are easy to go. If you are using FaLa for your on chain infrastructure, you can have it right away.

Trust in Technology

You don't need to wait for years. We are working with many amazing partners on this. Secondly, if you don't trust single tE, for example Nvidia H 100 a single hardware. We are here to help because the nature of fallout is a software level rule of trust. We should be using MPC to measurement the root keys of these TE hardwares so that we can add up even like most security protection than, you know, not that Nvidia, but then the single provider of Tedin. This is our best part. If you don't trust the te, okay, but you have to use it. Come and use follow.

Towards Decentralized AI

So I would say this is why we are in the like, in this kind of a PMF stage for decentralized AI. And just to let all of the audience know that we are not just for web three AI, web two AI also needs. So that's what we are doing now. Yeah, perfect. Thank you for this high level overview and summary of all of this. It's very valuable to understand the difference.

Introduction to Ionet and AI Models

Also taus, if there's anything from your side to add or how Ionet plays into this, what Marvin just described. No, I think Marvin's sort of response there was really comprehensive and I think he covered like a lot of things that I would want to add on to. I think. I think the only point I think, you know, is kind of like really key, at least from the ionized side or at least something that I want to reiterate is sort of like the application in the web three space. Right? Like especially when it comes to this idea of co owned, like model ownership over like distributed network. I think sort of, yeah. I think that the really key point to just like essentially whenever you try to create a model sort of in a decentralized manner, there's a lot of disparate stakeholders that have to bring a variety of pieces of the puzzle to the table to make it work.

Confidentiality and Stakeholders in Decentralized Models

And I think there's probably a variety of requirements around confidentiality and what they're willing to reveal or in terms of what they want to be able to show their hand to other participants, for lack of a better term. Yes. I think specifically that particular model of building co owned AI, ML models over a distributed network is something that's uniquely web three. And I think that's something that's, speaking of PMF, I think that's something that we'll start to see a lot more of in terms of applications of te in the future. Yeah, yeah, nice to put. And it's really like to also remind that it needs to start on the lowest level. Right. Like from the compute level. I mean, we touched on the tech stack of decentralized AI or AI tech stack overall.

Importance of the Compute Layer

And the compute layer is like the lowest level where we need to ensure like the verifiability, like the specific hardware, to then provide these services to the models, agents and so on. So I think it's always important to understand the importance of the tech stack level that we are working on. If we dive a bit deeper into the specific hardware. We touched on this already. Te or GPU Te for AI is much more important than the CPU Te that phala has provided since ever. And now we are moving more towards the GPU side. Tausif, can you touch a little bit? I know you're on the bd side, but this is also what you're selling to maybe explain a bit about the specific hardware.

The Uniqueness of Nvidia H100 and H200

Right, we talked about Nvidia H 100 and H 200. Why is this hardware so unique? To enable AI, or then even decentralized AI by adding te? Yeah, yeah, absolutely. So I think for those who aren't familiar with the GPU world, Nvidia H 100s are currently the top of the line gpu's that are out there for AI. ML workloads. And H 200s are the next iteration of it. These are extremely new, still really hard to get, really rare. They're still being rolled out. And I think we've got an access to some that were able to partner with Shaila to provide access and be able to do some of the benchmarking, analysis and research stuff around that.

Capabilities of Nvidia GPUs

But I think to go a little bit deeper into your question. So I think these, the reason you hear a lot about Nvidia H, especially now with the AIML boom, is that these GPU's are specifically designed for really complex high computation AI workloads, right? Like these, the architectures of these gpu's are specifically like built for unprecedented computational power, for trading and inference tests and deep learning models. And, you know, without going like too deep into the tech side of, you know, there's a variety of sort of like things that they could do around, you know, like number of like floating point operations per second, like, you know, like parallel computation, support transform, like transform models, things like that.

Efficiency and Scalability of AI Workloads

But essentially, these are sort of like custom built to make AI ML workloads work at like the highest performance point possible. Right? So I think for especially kind of when this, you know, when this comes into this conversation of decentralized AI and decentralized compute, I think these machines are really interesting. So obviously in a centralized fashion, I think H 100, sort of like SXM GPU's with Infiniband are kind of like highly preferred in decentralized contexts where you literally have a bunch of H 100s in the same data center plugged in with actual real world plugs to really reduce latency. But that's in a centralized context.

Decentralized GPGPU Solutions

But I think even in a decentralized manner, when we're talking about coordinating a bunch of these GPU's in a distributed cluster into a single cluster on ion edge or something else, these GPU's end up being really important and really fit exactly what we need because of their like high throughput and scalability, but still sort of better. Sort of like cost and energy consumption as compared to, you know, what comparable GPU's from a price point where theoretically you could use a bunch of Nvidia 4000 nineties, but sort of like the corresponding, like power consumption capacity would be significantly higher. And I think, you know, with H, like their ability to handle massive data sets, complex competitions make them really essential in sort of a decentralized infrastructure like ours.

Parallel Processing and Decentralized AI

And they're sort of like parallel processing ability really fits well with decentralized AI, which I think, I know we talk about decentralized AI a lot, but I think when you really cut it down to tangibly, what it means and how it works is essentially about splitting large workloads into sort of like sub workloads that can then be spread across and computed across a decentralized network. I think the architecture of H are very much in line with how we think about processing and running large AI ML workloads across a decentralized network. Okay, thank you. This was a lot of information about the hardware side, but very valuable.

Importance of Hardware in Verification

And I think to add to this again, also that this specific hardware, what you explain why it's important for your eye, is also now enabled to have te verification. Right. This is why we also work together. Just having this hardware and not enabling te on top of it doesn't directly make it verifiable. Right. Marvin, would you like to add to this? Yes. I think the major reason why H 100, H 200 is it depends on who are using it, right. On the target consumer or enterprise.

The Role of H100 and H200 in AI Companies

So I would say like for the, like for bigger AI companies, such as the model companies, if you are building models like H 100 to H 200 is the most popular hardware for now because they can offer. I think the major reason is still like significant performance improvement, like, you know, compared with the other choices, like 800 or, you know, some people also use 1490. It's just a faster, much more faster. And H 200 even is 90% faster, you know, than H 100 LLM models like, you know, the 70 b.

Competition and Performance in AI Models

The fundamental reason is the models competition is getting harder. And all of these AI companies, sorry, I'm just talking about modal company. These modal companies, their competition is very focused on two pain points. Either your model is getting smarter or your model is getting cheaper and faster. If you take out how open AI separate their products, you can see like there's a lot of mini version means faster and cheaper one. And oh, you know, 0140 these are targeting on the like mostly intelligence AI in the world. So either you can provide very smart model or you can provide very cheap model.

Memory Capacity and Model Complexity

So for very smart model part, like each one is playing like I think like a leading role in the, you can barely find any competitor on that and performance on the competition part or just a larger memory capacity. And just to share some background to our audience here, because the models parameters are increasing so big. For example, Lamar Supennae 3.2 is I think 70 b, right at least. So these, like these models implement so much factors in these modal infrastructure so that you just need very high memory capacity to be able to execute skew, no matter training or inference for these models.

Legacy GPUs and New Requirements

And the legacy GPU's cannot just rapid, you have to use the bigger memory gpu's like what we are talking about, H 100 200 for these are complex c models without resorting to complexia, parallelism, technicals. And in the middle, besides just a higher, larger memory, you also need higher memory bandwidth so that you can just connect these gpu's together for faster speed. And of course H 200 and H 100, just a better performance there too.

Necessity of Specific Hardware for AI Companies

So I would say for model companies, especially the big model companies, these hardwares are just necessary and of course the te mode. But more important is you don't get much trade off even though you open up the te mode. So as our benchmark report shows, I think for Lambda 370 B trade off is between 5% and bigger model, you're running the least trade off you're having. So it means that you barely losing any performance even though you are open up the te mode.

Adoption of TE Modes in AI Companies

So the answer to these AI companies is why not, right? I'm not losing anything yet, so why not? I am using te, right? It can have a better protection of everything. So I would say this is the best part. But I also want to point out that in a smaller size or long tailed AI companies today, there's a trending beginning this year that people begin to use 1490 and, you know, like GPU's like a 100 g for AI inference because they are not mobile companies.

Trends in Smaller Scale AI Companies

They're building maybe AI applications, building AI agents, or just fine tuned these based on these open source AI models. So for these companies, the better choice is I just use the cheap chips for the inference demand. This is why there's a big demand increasing for 1490 since this year. And I believe, for example, for p two p marketplace like ilnet. Yeah, it's a good year because it means that you need to collect the long tail GPU's from our community, from everyone, and then gather them together for the, you know, and resell it to the, you know, AI companies.

The Growing Marketplace for GPUs

There's a true demand market for such GPU marketplace. Yeah. But again, h 100 200 is not just it is better, but depends on who we are serving to. So this is why for example, for now, Alfala's strategy is to work with larger decentralized AI companies because they do need to run bigger size of models on your marketplace. And for some smaller one, if you're just doing inference, you might need to use te for AI agent inference protection or verification instead of modal training or modal inference.

Summary and Conclusion

So, yeah, just trying to add up some comments on the background side. Perfect. Yeah. Thank you so much, Marin. This helps to put it into perspective. And when you talk about, like, we have to think who we provide the service to. Right. I would like to ask Tausif and like, okay, we talk about GPU Te mainly for the AI or decentralized AI enablement. But when we partner now together, thousands of other use cases is where you see the GPU Te service being very valuable too, because, I mean, Ionet is like, also not only serving to AI customers, right?

Customer Demand for Decentralized Compute

I think those type of areas when you have to like deal with more, you know, like highly. Sort of like highly secure customer data and. And sort of like workloads like that. Those sort of areas are kind of like where we start to see a lot of this GPT demand kind of start to fit together. Of course, I think, you know, like to get it to be production ready. We still have a long way to go, but in terms of sort of where we're seeing the earliest customer demand and sort of what we're really trying to work towards to solve for, especially from a decentralized compute aspect, and how we can make it more sort of private and secure, those are some of the types of customers that we've been seeing would fit really well with this.

Expanding Use Cases and Engagement

Yeah, this is great insights on additional use cases, although they sound like they're not web, three core use cases, but they use the decentralization and the security part of it. So very interesting to see that we can also expand gpute into dimension use cases. I would like to let the audience know in case. Because it's a small group here. In case anyone has specific questions in the last minutes, feel free to raise your hand. Maybe we can get you on stage if this works. Well, I will ask another question, so don't be shy. If there's anything you would like to ask Marvin or Tausif, let us know. Maybe to another question to Marvin for also the Fala community members here, because Fala is known as the Te CPU cloud, right? With over 40,000 cpu nodes verified by te or Te CPU node. Sorry, Te CPU nodes. My question is to you, Marvin, is how does.

Integration of GPU and CPU Technologies

So first of all, Tegpu cannot work alone. So Fala is the first company who deliver on chain verification based on no gpu tee modal inference. So I don't know if everyone like remember like just two months ago we present a proof of work or it's just a PoC in a proof of concept that you can verify the AI inference results based on and track back, you know, what's happening in the tes for Lamar models. And to make that happen, the Nvidia GPU and not work alone, you have to use like a CPU Te and connect this. Yeah, I will try to simplify the technology part, but the workflow is like you have to connect the CPU Te together with the GPU one and wrap it up as entire transcription environment to make sure the AI inference result can be verified and executed from TDX server in Te mode.

The Role of Legacy Technology

So that to make sure the remote test station is verifiable, TLDR is GPU cannot work alone for Te, you have to implement Te GPU cpu's together. So this is where our legacy technology can play a part. But besides that, I think funnel up blockchain is not designed just for CPU. We didn't support GPU just because there's no GPu t before. So. Yeah, so this, yeah, so we began to implement GPU Tes and our on chain, our own chain infrastructure can totally support GPU as a hardware into the block space. Quite mostly, I would say. So, yeah, that's the answer. It's just that our browser is never designed just for GPU. We are, we abstract what is the.

Understanding Trust Execution Environments (TE)

Oh, sorry. Okay, I'll go a little bit deeper here. So TE is not bounded with specific hardware, it's not bounded with CPU is not bounded with GPU. Even in CPU there's a multi PE standard like Intel SGs, Intel TDX, AMD and CV, or just Amazon natural. Right. In GPU we got Nvidia H 100. So TE is like a software system description instead of hardware technology. But of course hardware technology is part of it. But Te is a system, it's not a hardware standard. So this means that when final blockchain kickoff four years ago, we abstract the software nature of Te as a system instead of, you know, like just running based on whatever intel provide.

Advantages of a Non-Hardware Specific Design

Now, we didn't do any abstract very, I would say, elegant way to match what the nature of this trust execution environment system looks like and what they need. So it means that the benefit of it is, although we take a lot of time back then, but today it pays off because when there's new hardware is coming up, we can implement these hardware smoothly into our network because we are not designed for Intel SGX. Yeah. So that's a reason why we can integrate GPU's smoothly. But of course for fellow community it means that we need some time to upgrade our tokenomics so that the GPU provider can receive rewards as the Internet. And that's what we called FALA 2.0.

Future Developments and Community Engagement

We are, we are finalizing some details on the design part and we throw out a proposal for public vault in our community before the end of this year on this. So by then you can see all of the details, how FaLA 2.0 white paper looks like, how the technical infrastructure looks like, and yeah, how we can be more friendly to Eastern builder and how GPU provider can be part of the tokenomic game. So all of this information will be released before the end of the year. Amazing. Thank you so much Marvin. This was a great summary or for the audience to also understand how FalA is positioned based on the specific hardware, Te GPU and Te CPU.

Looking Ahead to Collaborations

Great, thank you so much. I didn't see any hand up here that anyone wanted to ask questions. So we are about to close the space for today. But maybe the last words back also to Taussov is there. Besides of course the collaboration between Fala and ionet, because we are not only working on the hardware side, we also invest time into finding the best joint use cases and research, further research on TE, on GPU. What are you most excited about? Maybe in different use cases coming up on ionet to be launched or partnership? Yeah, what's next? House?

Excitement for Future Innovations

Yeah, yeah, for sure. I think obviously really excited about everything that we're going to build together as part of this strategic collaboration between ionet and Swallow network. I think there's, you mentioned some of the benchmarking and research papers that we're working on, which I think we can talk about in the future. But I think sort of in the near term, sort of what I'm most excited about is the whole concept of. I think I kind of touched on this a little bit earlier on when we started the space, when I talked about the different parts of the AI tech stack and how I just thinking about it. But realistically, now that we've been focused on this foundational compute layer for a significant portion of this year, I think really starting to crack these next few use cases of what does decentralized compute really enable and how do we start to take on some of these other use cases that currently are the purview of centralized compute?

Benchmarking Performance in Decentralized Compute

I think how we enable use cases, distributed training over a decentralized compute network and how do we match performance benchmarking to centrally trained models or something? That is a key thing that we're working on and essentially trying to figure out and help researchers with support would like computer to be able to do that. So I think that's something that you'll start to see us kind of talk more about in the future. And I think something I'm personally very excited about in the near term. Amazing. Yeah, this sounds definitely exciting. And as you said, I mean we are quite advanced in the space with like providing the specific hardware for DAi, but there's still a lot of research involved.

Collaboration and Research Synergy

Right. I think to everyone joining the space here today, keep your eyes open on more and more research that being published and then hopefully also very quickly executed because we can really enhance by pushing the overall decentralized AI space forward by combining all of the expertise from all of the partners. That's very exciting to me as well. Marvin, last words to you, maybe an exciting use case or specifically on the collaboration between Phala and ionet.

Exciting Upcoming Research Releases

Yeah, I think we are good just to stay trained because we are going to publish very interesting co research paper between FalA and Ele and that would be a super helpful job for no matter academy or industry pioneers. Yeah, I think I can. Since we got very smaller size of audience today, I can share some alpha here. So this will be around the very pioneer H 200 GPU's. And you can barely find research papers around h 200 because people are wondering why should I get? It was the benefit of it. So we are co working with elenet on this research paper and it's go. It's already done pretty much then.

Research Paper Release Timeline

So it's going to be released maybe next week or in two weeks. Stay tuned. That would be like a super interesting thing, like a solid partnership on this for AI. And furthermore like Fallout will create implement mode GPU tes in our fallout 2.0 network. And I believe that Il nine will be one of the most significant provider to our ecosystem on this. So as you know, if you are providing GPU's and using GPU's on LNAt, you can get rewards, right? And if you use it and you know, and render GPU's for phala, maybe you can even get actual rewards. So no one knows what's going to happen but stay tuned.

Short Term and Long Term Expectations

In short term were going to publish a research paper together and in the middle term I believe that I can be a strong supporter on follow 2.0 te network. Yeah. Wow. This is amazing. Thank you for sharing the alpha. You guys have heard it here first so yeah, exciting times to come. This is a very important collaboration for Fala, for ionet as well because we bring really in the te expertise and stay tuned for the bigger announcements and like all this, the benchmark and the information coming out based on our research.

Conclusion and Gratitude

Thank you so much everyone. Thank you for joining. I hope you all learned about the overall collaboration, how tes can really help the security to build a better decentralized AI landscape. And thank you Marvin and Tausif for joining and sharing your expertise on this Friday today. We see you all soon. Thank you. Take care. Thank you. Bye bye. Thank you Tausif for joining. Thank you everyone.