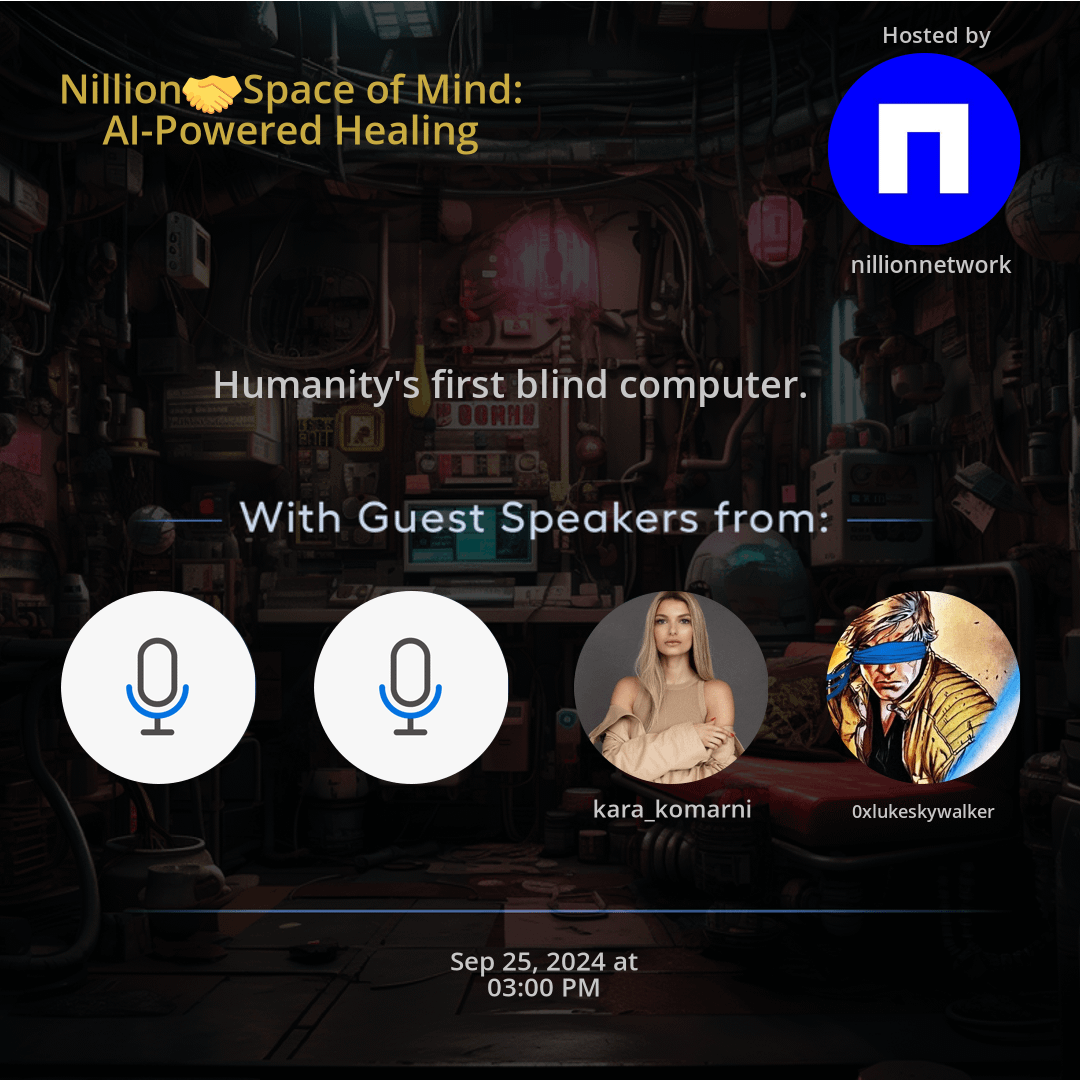

Space Summary

The Twitter Space Nillion Space of Mind: AI-Powered Healing hosted by nillionnetwork. Dive into the innovative world of Nillion, where the fusion of AI and emotional intelligence revolutionizes mental health treatment and personal growth. Through the concept of AI-Powered Healing, Nillion challenges traditional therapy methods, emphasizing ethical considerations, and human-machine symbiosis for profound healing experiences. The space showcases how AI contributes to personal transformation, redefines mental wellness practices, and offers innovative tools for emotional resilience. Explore the evolution of AI in mental health within Nillion, paving the way for a holistic approach to mental well-being.

For more spaces, visit the AI page.

Space Statistics

For more stats visit the full Live report

Questions

Q: How does AI enhance mental health treatment in the Nillion space?

A: AI in Nillion redefines therapy by offering personalized and innovative approaches tailored to individual needs.

Q: What role does human-machine symbiosis play in mental well-being?

A: The synergy between humans and AI in Nillion fosters deeper emotional connections and facilitates profound healing experiences.

Q: Why is ethical consideration crucial in AI-driven healing processes?

A: Ethical awareness ensures that AI applications in mental health prioritize user well-being, data privacy, and informed consent.

Q: How does Nillion challenge traditional therapy methods?

A: Nillion introduces unconventional AI-powered techniques that transform how individuals engage with their emotions and mental states.

Q: What key principles guide AI-powered solutions in mental wellness?

A: Technological empathy and human-centered design principles form the foundation of AI innovations in mental health within Nillion.

Q: What makes the Nillion space a groundbreaking platform for mental wellness?

A: Nillion showcases the seamless integration of advanced technology and emotional intelligence, offering a holistic approach to mental well-being.

Q: How does AI contribute to personal transformation in Nillion?

A: By leveraging AI capabilities, individuals in Nillion experience profound self-discovery and emotional growth, transcending traditional healing methods.

Q: What opportunities does AI-Powered Healing present for mental health care?

A: AI-Powered Healing revolutionizes mental health care by providing scalable, accessible, and customized solutions that cater to diverse individual needs.

Q: How can individuals benefit from the fusion of AI and emotional intelligence in Nillion?

A: The collaboration between AI and emotional intelligence in Nillion offers users a unique toolkit for self-awareness, emotional regulation, and mental resilience.

Q: In what ways does Nillion redefine the landscape of mental wellness practices?

A: Nillion introduces a paradigm shift in mental wellness by combining technological innovations with empathetic support systems that empower individuals in their healing journeys.

Highlights

Time: 00:12:45

The Evolution of AI in Mental Health Exploring how AI advancements redefine traditional mental health practices in Nillion.

Time: 00:25:18

Human-Machine Synergy for Healing Witnessing the transformative power of AI-human symbiosis in enhancing emotional well-being.

Time: 00:35:59

Ethics in AI-Powered Healing Delving into the ethical considerations surrounding AI applications in mental health care within Nillion.

Time: 00:45:32

Innovative Therapeutic Approaches Discovering unconventional AI-driven techniques that redefine traditional therapy methods in Nillion.

Time: 00:55:21

Empathy-Driven AI Solutions Embracing technology with a human touch – the core of AI-Powered Healing principles in Nillion.

Time: 01:05:14

Personal Growth through AI Integration Exploring how AI contributes to personal transformation and self-discovery in the Nillion space.

Time: 01:15:29

Revolutionizing Mental Health Care Understanding the paradigm shift brought by AI-Powered Healing in the field of mental wellness.

Time: 01:25:18

Tools for Emotional Resilience Empowering individuals with AI-backed emotional intelligence tools to navigate their mental well-being.

Time: 01:35:42

Holistic Approach to Mental Wellness Uniting technology and emotional support for a comprehensive approach to mental well-being in Nillion.

Time: 01:45:55

Emotional Intelligence Revolution Witnessing the dawn of a new era where emotional intelligence and AI converge to redefine healing practices within Nillion.

Key Takeaways

- AI enables profound advancements in mental health treatment and personal growth.

- The Nillion space highlights the potential for symbiotic relationships between humans and AI.

- Embracing AI in healing opens new pathways for self-understanding and emotional support.

- Human-machine collaboration can revolutionize traditional therapy methods, offering more personalized and effective solutions.

- Exploring the depth of AI integration in mental health unveils new perspectives on consciousness and emotional well-being.

- The Nillion space showcases how AI can act as a catalyst for emotional healing and personal transformation.

- Understanding the ethical implications of AI-driven healing processes is crucial for responsible innovation in mental health care.

- AI-powered solutions in mental well-being emphasize the importance of technological empathy and human-centered design principles.

- The concept of AI-Powered Healing challenges traditional notions of therapy and self-improvement, paving the way for a new era of mental wellness practices.

- Nillion illustrates the fusion of cutting-edge technology and emotional intelligence, creating a harmonious synergy for mental wellness.

Behind the Mic

Introduction and Excitement for Real-World Challenges

Alrighty, well, let's kick things off. Thanks, everyone, for joining. This is another session with one of our builders partners on the NEtwork. It's always super exciting to have those sessions and to dive deeper into the different products and the different synergies that exist with respect to the new network. But I'm particularly excited always when it's a session about real world problems, real world challenges. I think that we have seen a lot of exciting use cases already that were sometimes a little bit more on chain based. And of course, that's very important. That's very exciting. But I personally get particularly excited when we are talking about product challenges that touch the real world, that go beyond what we've seen in crypto today. And today, this is definitely one of those occasions. We have a really exciting guest today with Kara, who she's building essentially, space of mind, really exciting company in the mental health space. We met a couple of months ago, and ever since then are very excited to work together because there are a lot of technical and also product overlaps here that we identified. And that's why I thought it would be good to bring Cara with us today so she can tell us a little bit more about her vision, what she's building in space of mind, what the product journey looks like, and also where the overlaps are with respect to the Nile network. So thanks again, everyone, for joining. And without further ado, I will pass on to you, Cara, to start with a quick intro.

Kara's Introduction and Background

Hi, everyone. Thank you for having me and thanks for the intro, Lucas. Yeah, I'm a founder of Space of Mind. Welcome, everyone. Just a little bit about myself. So I actually am not from a mental health space. I started in very different industry in VR learning. We were scaling enterprise training during the pandemic. So it was pretty good timing, helping people kind of learn in VR. But about three years ago, I had my own story with mental health. Had been diagnosed with PTSD and really dug deeper into the health space of trauma care and sort of the broken treatment model that we're dealing with. So here I am building the solution for myself and many other people who are dealing with an exact same problem, which I'm sure we'll delve deeper into. But thanks for having me.

The Importance of Addressing Mental Health Challenges

Yeah, absolutely. Thanks for coming, Kara. It's really exciting to have you on, and I'm really passionate to dive deeper into this, which is really arguably one of the biggest challenges that we are facing in society. I think even today still, a lot of people may underestimate how many people out there are struggling I actually took a look at it. And you care? I probably have better stats on this, but I took a look at it just before this call and found basically things like one out of five americans struggling with mental health illnesses and serious mental health problems, even touching up to 6% us adults. Not sure what the global statistics are, but it definitely is a pretty shocking number. And I would say that certainly the fast paced lifestyle and particularly social media, the emergence of it that we have experienced over the last 1015 years, has made things more difficult for a lot of individuals. And certainly then on top of that, with the COVID pandemic even more so. This is a massive global issue, and I'm always fascinated by entrepreneurs that are trying to improve that situation, either by giving better access, more affordable access to people, to now have access to these treatments that otherwise they wouldn't have. So I'm really excited to have you on and dive deeper into this, maybe kicking things off with the beginnings of space of mind and how you sort of look at the mental health space more broadly and holistically today in the US, but also beyond that, of course, globally.

Kara's Focus on Trauma-Informed Care

Yeah, absolutely. So my kind of particular area that I'm focusing on is trauma informed care. And there's a big reason behind it, because as I've gone through this experience myself, I started doing the research and it turns out that over 70% of the population actually struggles with traumatic experiences and very much limited access to help. When it comes to finding the avenues to get support, you'll have three options. You can go to a therapist where you'll face in the US about an eight week average wait time, and over 60% of therapists don't even accept new patients. And then even a minority of those are trauma informed, which means really looking at how does your past influence your present day challenges and helping you overcome those. Then you have your primary care, which pretty much, as we know, very strapped for time, insufficient training in trauma, and then there's big overlaps and medication, which really addresses those root causes. It's more like a band aid on top of a bigger wound. And then you also have emergency care. So mental health care is actually the biggest driver of unnecessary emergency department use. So certainly not the place where you'd want to end up if you are struggling. And for me, the bigger picture really is how do we meet people where they are and create the right solution that allows them to have this access on demand at a lower cost, which is what we're offering.

Offering Affordable and Comprehensive Mental Health Solutions

We're offering group coaching, led by therapists at only $20 per session, which is on average 80% cheaper than any sort of therapist led session you'd be able to access. And then there's this unique combination that we offer of sort of peer support, therapist led coaching, and then AI powered tools to really help individuals build what we call this psychological immune system where you can really practice coping skills like emotional regulation, cognitive restructuring, so that they become second nature. And this is really what's missing right now. And a lot of these tools, they might add a resurface level, like standardized content, which doesn't necessarily help you dive deeper, or you'd have peer support groups that are free, but there isn't really any moderation there. So we're trying to combine a multiple different solutions in one destination to truly create that adherence and access that I believe everyone should have.

Combining Human and AI Solutions in Mental Health

Yeah, no, that's fascinating. And what I love about this is what you just said here. Right. It's. It's really trying to take the best of all these worlds that exist, maybe as single products, but it's trying to essentially combine them into one comprehensive offering that takes the best from all of these different approaches. I mean, I think one thing that we have seen people trying in the past is obviously just purely fear P with groups. We have seen people with individual therapists. We also have seen digital products that were focusing solely on AI. Basically, having your health chatbots that is there for you is trying to support you on your journey. But I would say that obviously, particularly with generative AI emergence one and a half, two years ago, got a lot better. But still, even then, I think what a lot of people that I know struggled with, if it was purely AI based, is still the lack of ultimately human exposure, being groups with people that go through similar experiences, and also ultimately having a therapist with the right qualification and the social emotional intelligence that I think is difficult still to replicate, despite the fact that AI is improving so dramatically. So I love to hear that there is really sort of a combination, as it seems, of the very strong human element of it, but at the same time still the best of AI. Do you agree with that, Kara? Did you see a similar sort of development over the last year? So why would you say, have some of those products taken off or not taken off that much just yet in the past?

Addressing the Challenges of AI-Powered Mental Health Solutions

Yeah, absolutely. I mean, if there is a bigger question around all of these AI powered solutions, it's one thing to want to build them because we're looking at accessibility, but it's another to ask, are people actually wanting this, and a lot of research has been done to show that, yes, there's an appreciation for the ability to access the support whenever, whether it's 01:00 a.m. and, you know, there is no one around. But the problem is there is this kind of ceiling that you hit when interacting with the bodies you mentioned, when it comes to emotional intelligence, when it comes to the depth of questioning and context. So this is really why we decided to really still have that therapist shine through and lead the experience. But then really, AI steps in at two key points of kind of where therapy currently struggles. One is adherence, usually a drop off rate on any average intervention would be two, three sessions. And we already seen research showing that self help interventions combined with human encouragement can be as effective as traditional therapy. We have therapists guiding our sessions, but afterwards, your AI actually remembers key points, provides personalized summaries and tools and exercises to keep you engaged in practicing.

Continued Engagement and Self-Reflection in Mental Health

So this is sort of going beyond the session. It's been already shown people forget what they learned in therapy and struggle to implement those tools day to day. So we changed that. We really kind of have those AI tools acting as those assistants reminding you of the skills learned, providing those practice opportunities. Think of it almost like going to the gym. It's like you need that regular, consistent effort to build muscle. I think I lost you, Kara. Not sure if it's just me. Oh, hello. Hello. Yes, now I can hear you again. Maybe it wasn't on my end. I hope it wasn't, but I can hear you now again. Okay, excellent. So, yeah, as I was saying, though, AI tools here, the way we're implementing them in self-paced work is informed by session transcripts and the content of what's been delivered by therapist. But it didn't remind you of the skills learned that you can practice in your own time to really kind of make sure that they become second nature. And I was comparing it to sort of like going to the gym with that kind of regular, consistent effort to build muscle. And most of those solutions, you know, you have your one session with therapist and then you wait for the next one. There isn't any follow through. So that's really what we're big on, is making sure that happens. And early pilots we've done already showed there's over 60% of uptake of those skills practices in between sessions. So there's clearly the demand for people to want to understand how to do this for themselves and how to empower themselves with the right resources.

The Role of AI in Mental Health

So AI can really kind of hold up a mirror to these unhelpful thinking patterns and help you break free from these negative cycles, help you using session transcripts, look at endorsing patterns and really start understanding what is it that keeps coming up for you. So then you can bring that to the session and it will make the session way more informed and engaged from the user perspective. So there's a lot that we're doing to copilot therapist, but we're not anytime soon planning to replace one. I think another really important aspect here is avoidance. A lot of mental health care solutions right now tend to put a blame on the patient for drop off in treatment. We're reporting on, you know, 40% drop offs for kind of exposure therapies. But really we should start asking ourselves, how do we make that experience more engaging, so that it's something people want to do, not that they have to do. So this is why we're really putting a lot of emphasis on making this coping skill development convenient and easy. And yeah, these are probably the key highlights, I would say.

Engagement and Therapist Support

There's also therapist's perspective, which again is less talked about. But I think important AI really helps bridge that gap between sessions by recommending those relevant exercises. And I think that's where therapists cannot usually be present based on purely the demand that we talked about. There's already massive supply shortage and the kind of cognitive reframing support. If we can have AI trained on your anonymized data and therapist expertise, it can help practice those reframing techniques and deep rooted thinking patterns, which again therapist cannot be there holding your hand, you know, in between those sessions to be able to do that. And for them, it means that they have a lot more time for that kind of rapport building in the session with less admin work. Cause they don't have to spend 2030 minutes writing up the session transcripts and doing any follow ups. This is all kind of done for them. So yeah, I would say there's quite a few unique things to what we're trying to achieve.

User Journey and Personalized Support

Yeah, that's awesome. And it's fascinating for me to hear what you just said there. I mean, I guess you touched on a lot of really good areas of the product offering. What I would love to hear from you is maybe you can shed some light more on the actual user journey. So let's say you have a certain individual who is struggling with a certain thing. They come to your website. What are the different product offerings that you have? And how exactly does the balance work of AI, and at the same time, the group element of it and the fear p element of it. I know you have different product offerings there, from the veteran program to also the chat one, but I would love to hear from you from a user experience perspective, exactly how that would work. So we first started having a full on sort of assessment, which is kind of the PCLC based assessment that therapists would use to help people identify if this is the right solution for them.

The Healing Process and Group Support

But we have found that we don't want to be kind of making people feel like there's something wrong with them, that we're assessing their impairments. We really changed that to helping people choose how to heal. And this is why we have dedicated faces to kind of different life transitions that people might struggle with. Whether it's grief, whether it's kind of veteran support, as you mentioned, whether it's a founder who's dealing with burnout, whether it's mothers struggling with kind of juggling career and motherhood. So really it's trying to put people together on similar growth paths and give them that access to support on demand. So you essentially choose a self select a space you'd like to join. And we have a weekly session, either as a drop in or you can sign up for an eight week program, which is a bit more intensive, that introduces you to those key coping skills every week.

Psychoeducation and Skills Practice

And we provide a combination of what we call psychoeducation, which is really helping you understand why what you're learning is important, how it's incorporated into kind of larger scheme of, you know, well being, and then sort of skills practice. So you really get to understand how does this work in a day to day, for example, if it's regulating your nervous system or if it's reframing a negative thought? We have those kind of practices like Socratic questioning, for example, that really have the ability to help you identify evidence for and against the negative thought, really go through that deep questioning to start reframing the way you think and really shed those kind of old conditioning that is very difficult to do otherwise. We have a one one peer matching option. So after the session is finished and after you sort of completed a short assessment, which we do, to track your quality of life improvements.

The User's Personalized Toolkit

You have an option to kind of stay accountable with another peer in that same group. We usually it's about ten folks in one session and follow on from that. It's all about kind of self paced work, as I mentioned. So you have sort of your own personalized coping skills toolkit, which we will use to help you practice what's been learned in the session. And what we started doing in the Bay Area here is kind of these deeply immersive in person coaching circles as well. We saw a big need for connection in person. So we're kind of doing these activation events now to really kind of expose people to some of this information and content in real life and then really transition them to these online spaces. Our main kind of go to market right now is B two B.

Organization Partnerships and Accessibility

So we would work with different organizations, kind of prepaid programs to offer this to their teams. We work with a lot of professional organizations in healthcare physicians association to really extend this access also to people who help. So really helping the helpers. And the focus here has been on this high stress profession, really those folks who usually would be strapped for time but really need to support the most. Awesome. And the initial step in figuring out exactly which group is the right one for me and which treatment is the right one for me and sort of which plan I should follow. Is that all determined by AI sort of questionnaire that fill out and then AI is sort of pointing me in the right direction, or is that for me to choose sort of when I go for that plan?

Self-Selection and Coping Skills Menu

So this is still a self select in terms of the space you'd like to join. But our programs have this curated menu of evidence based coping skills because there are actually over 636,000 ways to meet TSM five criteria for PTSD or trauma. So that experience really varies from person to person. So we offer this many of coping skills where you will be exposed to different modalities and choose for themselves. You know, people can choose for themselves, really, what resonates with their personal needs. And we kind of really emphasize that there's no one way that fits all and give them all these different tools and ways that they can then dive deeper into once they know what resonates.

Affordability and Access to Support

And in terms of the affordability aspect, I recognize that one of the key propositions that you guys are offering, obviously, is not just the blended dual world of AI and the human element of it. But it's also the affordability aspect of it, right? The ability to reach much more people who may in the past not have had the ability to access it. Can you maybe describe a little bit more what the common barriers are usually, and also how you achieve the more economic model that you have put in place to enable a wider range of people to access it? Is that purely because of some of the aspects being AI based, or do you have any other, you know, product concepts in place that enable you to sort of just be more affordable?

Barriers to Entry and AI Solutions

Yeah, absolutely. I mean, so the barriers to entry are really quite a few. There's obviously the high cost that we mentioned. An average therapy session in the bay will be like $200 an hour. There is limited availability of therapists, which we discussed. I think the most recent statistics said that if were to have one one weekly sessions with a therapist 101, only 7% of the population in the US would be able to have access to that. So it really is quite some way to all of us being able to access this form of support. And there's also a lack of personalized support that can hinder this healing process. I talked about those different ways you can meet the criteria.

Customer Alums and Personalized Recommendations

And, you know, therapist certainly would not have the capacity to spend more time and dive deeper into those kind of intricacies and your own kind of context, which is where AI can really step in and learn more about you as you go through the sessions, read the transcripts. And really our goal is to kind of create those more customer alums as we go, so that we can be more proactive around recommending those different kind of resilience advice across your personal life, your relationships, your career, and even your health. You know, eventually we can. And that plugging in wearable data to actually show you know, the ways that you could be improving on.

Cost Reduction and Group Dynamics

So the cost aspect here, of course there's group support, which it helps reduce the cost for us. So $20 times ten, we're able to meet that therapist rate. But actually, I wouldn't say it's a compromise in any way, because the number one benefit people say in joining our sessions is they no longer feel alone in what they go through. So it's as much also combating loneliness as anything else. And I think group work is sometimes adding much more value than any one one session ever would. And of course, as AI gets more mature that way, we can rely less and less maybe on having therapist for a full hour, maybe we can start decreasing the time with being exposed to a therapist and really do more of that self paced work I mentioned.

AI and Initial Stages of Support

This is something we'd like to work towards to really increase scale. And there's a big component of what AI can do at those initial stages to really increase access with that initial series of questioning and really triaging folks to sessions. So, you know, with time we want to increase kind of, you know, introduce an even lower priced subscription which can have just those self paced tools available if someone is not high risk and perhaps doesn't need that kind of, you know, regular access to a therapist. So that's really going to be the next stage of making sure that even those with very minimal resources can have help.

Collective Experience and Benchmarking

And another really cool thing that we're doing here is really looking at quantifying collective experience. None of those platforms are looking at benchmarking what types of modalities and tools can be most beneficial for different experiences. We ask people to track those and kind of assess those at every step of their user journey post session, after the self paced work, to start creating this kind of data set. And really for people who have absolutely no resources to be on those sessions to find out for themselves, we can at least give them access to this information so that they can, you know, maybe we can accelerate access to the most effective care that way.

AI Component in the Mental Health Journey

Awesome. Yeah. So basically the AI component, as far as I hear it out from what he described, is basically a combination of different things, right?

Grading Summaries and Personalized Support

But one of them is potentially grading summaries of conversations that happen in groups. So let's say you have your regular therapy session being able to actually concisely get a summary of what the conversation was about. That's obviously one part of it. But then an even bigger part of it is having actually your personalized support in your pocket who you can chat with. As you eloquently explained before, it's really fascinating to me to see the impact here, because usually, as you pointed out, you have a session and then maybe you see each other a week later, in more frequent cases, probably a month later or whatever, and there is nothing in between. But now you have your personalized you in your pocket, you can chat with someone, ideally that's in sync with the sessions that have happened before. So I guess it's that combination of using AI for some nice to haves, I guess, such as summaries, but then really must haves to bring it to the next stage in terms of defectives of all of it, by having your essentially personalized version in your pocket that you can chat whenever you want at any point in time. Is that accurate where you utilize AI or other things as well?

The Importance of Remembering Therapy Insights

Exactly. This is the main big deal that we really do not remember what we learned in those sessions so that adherence and effectiveness suffers because there isn't any follow through. So that's number one is ability to practice in between sessions, but also identifying patterns of thinking. This is something that it's very difficult to do on your own and maybe sometimes can take years of therapy to identify. So really what I mentioned about AI being sort of this way of holding up a mirror to unhelpful thinking for us, and this is where working with you really comes in, is to be able to integrate this cognitive distortion analysis and do that in a privacy preserving way. I know this could be a great use case to really reduce this avoidance about integrating. I, because we know in mental health this is a big concern is privacy. But yeah, also actually being able to analyze those session transcripts with time, become more and more aware of your own kind of traps that you fall into so that you can essentially get out of your own way by practicing the self awareness and creating those new habits of thinking.

Engagement and Interaction with AI on the Platform

Yeah, awesome. Definitely excited to talk about the also technical intersections in a second because I think they're super exciting and fascinating exemplification how important privacy is particularly. But before we get there, I would love to hear, and maybe there are no stats yet, but how often do people, I don't know if you have any stats on that, but how often do people actually use AI on the platform? This is something they are interacting with every day. Does that very much depend on the person or is there some pattern that you identify? What are your thoughts there in terms of the usage of, let's say, the chatbot product in your overall product offering? Mm. So it really varies based on whether it's kind of a prepaid pilot program, which of course, you know, encourages more utilization because this is a cost that's already covered for our users versus kind of drop in session engagement, which is kind of more a b two c offering.

Utilization Rates and Education on AI Tools

So you can kind of try it on and not necessarily commit to like an ongoing support for those eight to twelve weeks. So add those kind of prepaid b two B engagement level. We are seeing about twice a week people coming back to the platform doing the self paced work. And interestingly, we also compared the utilization of AI powered tools versus just regular kind of audio practices you would see on other platforms. And there was a 60% increase in uptake purely because of the ease of use and I think what made a difference is being able to explain to people actually, how this informs your learning progress as you are in the session. And I think this is also a big aspect of educating the users on sort of how this can be helpful. Where we're seeing less engagement is when that education doesn't happen. So if it's just made available to people, but they're not necessarily understanding of, you know, how to do this or why they're doing it.

Feedback and Improvement of AI Capability

So I'd say that's been incredibly encouraging. We, of course, get feedback that sometimes they wish that AI could go in a bit more depth and that series of questioning could be more sophisticated. But obviously we're going to, the more mature this AI gets in good domain expertise, we can kind of achieve that right now in a kind of testing mode. And there's of course, the kind of seeding we're hitting with how much in depth we can be in our interaction with users. But definitely we've seen an increased uptake with those tools when they're informed by the session content, and there's a clear continuity there. Yeah, it's fascinating. I mean, I can see this growing into something really exciting, right. And it's a perfect example for how useful AI can be for solving real world problems.

AI and Future Personalization in Therapy

Oftentimes we obviously spend a lot of time thinking about the dangers of it, and that's a good thing to do, of course, as well, because we need to be aware of what could go wrong and we need to be ready for that. But this is a perfect example for utilizing AI in a space where we definitely need help and where it can make a dramatic impact. And I can see the growth journey here. You mentioned that. I think at the moment it's probably a general chatbot that's available. Maybe I'm wrong here, but it sounds like it's a general chatbot that's available for anyone. But the next stage there obviously could be personalization, being able to fine tune your chatbot that knows you really well, has access to all of the data, has access to basically everything that makes you.

Further Development of Personalized AI Support

And then the whole experience is obviously getting to an even higher level than what it might be if it's a more generic chatbot. And then of course, also you can have agentic capabilities of agents that can take actions for you, recommend products for you. I think this is something you're obviously also looking into to actually also come up with action calls of what a user, an individual can do and should do on the basis of maybe some of the sessions. The basis of how they might have changed their tone, how they are writing all of this information is extremely high value and if processed correctly and personalized correctly, I think can be incredibly impactful for people. So that's really fascinating to hear. Is there some sort of personalization element already in there or is that what you refer to as sort of a next iteration in the upcoming months?

Current Personalization and Future Goals

Yeah, so we already have a fine tune model that really integrates some of this domain expertise since we are putting emphasis on the kind of trauma informed aspect. So that's something we've already done as a customization, but we're still keeping therapist in a loop there to approve and reject the kind of detection in those cognitive distortions. So this is very much test mode and we want to do this responsibly. We work with a lot of really amazing therapists that have, you know, work at National center for PTSD. They're doing a lot of research on usability and acceptability. So we're definitely integrating the learnings there and doing this in a way that doesn't necessarily open up in a window for any hallucinations or kind of incorrect analysis.

Pilot Studies and Effectiveness

And I think this is why it might take us a bit longer, but we just want to do this right. And then, yeah, the next stage over the next six months is going to be much more personalized approach on one basis, as opposed to just kind of group note summary and, you know, kind of the practices informed by your. Your progress in the session. So. Absolutely. Yeah. Awesome. No, I mean, that's. It's super exciting to hear that. And, you know, the impact of it, I think will be absolutely massive in terms of the story so far. Which stage are you guys in? Actually, like you mentioned before that you did some pilots, you validated the impact of it, which is super exciting to hear.

Results from Pilot Programs

Yeah, so I think to date we had about 270 sessions run on a platform and we've done two official studies that actually tracked the effectiveness of those tools and some really encouraging statistics there. We saw a 40% improvement in quality of life, particularly across self care, within eight weeks. For context for trauma interventions, usually it takes about 40 sessions to see any sort of meaningful change. For CBT, it's slightly less, it's about 20 sessions. So this is really proving you don't really need years of therapy to move the needle towards well being.

Community Impact and Future Collaborations

And I think this is certainly important and our goal really here is land and expand. So we're using this pilot for strategy to really identify is this effective for the population we're working with and then really scaling from there. We did quite some work with nonprofits as well, terrorist attack victims, struggling with trauma, kind of three plus years in a post incident, and got incredible feedback on just the sort of community aspect, people being able to look forward to these sessions because, you know, they struggle with loneliness around that experience and the exposure to the tools being so actionable.

Addressing Mental Health Needs in Various Settings

I think we saw a lot of kind of ability to implement, which is what I think the numbers are showing us. The kind of increasing quality of life across the board in romantic relationships and career education is really kind of a testament to that we really want people to be able to implement those and kind of be their own best source of support without having to rely on that third party and, you know, starting to do some work with the government and I'm not able to announce that yet, but certainly passionate about kind of helping fill the gap in therapist shortage there. And hopefully we'd like to also work with schools and universities.

Identifying Student Mental Health Needs

There's an increase kind of PTSD diagnoses among students and we would love to be able to increase access to support given, you know, campuses are having like two, three counselors at a time, so certainly not enough time, not enough resource to tap into there. So there's so much need and really this palace for strategies allowing us to showcase in different contexts how beneficial our approach can really be for that kind of high touch approach, but really scalability also being enabled by this kind of AI integration that we're doing.

Blending Human and AI Support in Therapy

Love it. Yeah, no, and I think also, particularly as you had at universities, I think are a really exciting place to focus on. I mean, not that long ago since I was there, and I think it's definitely a place where this one would be incredibly valuable and impactful, for sure. Awesome. I mean, one thing we touched a lot about today, touched on a lot about today, obviously, is the sort of blending of the human element and the IMF element. I think we're fully aligned in terms of AI is amazing.

The Role of AI in Human Wellness

And it's a tool that has dramatically improved over the last years and will continue to dramatically improve today. It's less about replacement, it's more about how it can be used effectively to strengthen insights and in your case, obviously in a really important health aspect of it, rather than replacing individuals. And this is a great example for an implementation that focuses on exactly that. Do you think, though, that. But out of curiosity, let's say, if I continues to advance, if the accuracy with which it diagnoses things and also agentically acts for you on your behalf gets better and better over time, do you ever see a potential future where it is purely AI?

The Future of AI and Emotional Intelligence

Out of curiosity? I think today we're fully learned it shouldn't be, of course, and I personally am a big believer in emotional and social intelligence. Just wanted to hear your thoughts on whether you think they can also become that emotionally and socially intelligent at some point, potentially actually be the one and only tool. Is this something you're thinking about or are you a big believer in it will always be a tool that will strengthen us, but not a tool that will at any point in time replace actual real world therapy?

Preventative Measures in Mental Health Care

Yeah, well, to me it's actually about the level of support based on the need. So certainly where it can come in is in that kind of preventative aspect, which is so important. So really gathering data sources from kind of mobile phone usage, your social media usage, to really start detecting maybe the early signs of needing support. So I certainly am passionate about looking into that and even collaborating with platforms that already exist, which we have, for example, one that kind of tracks your mobile phone usage to track kind of your daily wellbeing score.

The Role of AI in Mental Wellness

And for me, you know, of course, right now it's about leveraging AI to augment those human capability. But I think as we're able to increase access to just the kind of general wellness support without maybe needing this more involved kind of therapist support, this could certainly be the way. And this is where we're looking to go in terms of this kind of lower tier subscription. If someone is just maybe looking to explore and is not ready to commit to have access to support in real time on a live session, it could definitely be a way of increasing this kind of resourcefulness around resilience. And how do you build that kind of psychological immune system, as I mentioned, in a self paced way, and there's a few platforms doing this, I think, where it's going to become important still to have, whether it's just peer support and not necessarily only a therapist support is accountability. I think for all of us, we always struggle with implementing these new habits and it's easy to fall into the pattern of old conditioning.

Accountability in Building Resilience

And this is where I think we really do still need that kind of touch point with someone who is going to keep you accountable to really help us create this kind of new neural pathways for responding to adversity and being able to practice and stay on it. For those who are already highly motivated, I think this could be an excellent way to access help without perhaps needing that access to the live session. But I think the majority would still need that kind of support system beyond just having those AI tools. Yeah, absolutely. Awesome. Cool. Well, all exciting stuff. I think one thing that you started touching on before Cara that we didn't, haven't gotten into depth in yet, but we'll have to open that page now, is of course, privacy. I think it's very obvious, Taiwan, that this is incredibly impactful.

Concerns About Data Privacy

It's incredibly valuable to use AI in combination with human led fear p but of course, whenever we touch AI, we have to think about what data do I feed into the AI and also where does data ultimately go and where is it stored and how is it potentially used. I think this is probably one of the best examples for an area where the data is incredibly sensitive, incredibly personal. It couldn't be more high value, I would say, than this one, because you're sharing ultimately things with, well, ultimately technology that you might not necessarily want to share with individuals, with a company who can then take that information, potentially share it, potentially exploit it, potentially sell it, whatever it could be. And I think this is also a massive product differentiation potential here that you obviously are going after to ensure that a lot of these AI aspects that are in the platform are privacy preserving.

The Importance of Data Control and Trust

They are credibly neutral. There is nobody who has any insight directly into the underlying information when it's processed or when it's stored. And this is obviously where I think Nilin can play a really exciting role. And that's why we build what we build. We build it because we believe there are a lot of real world use cases out there where data should belong to you and you only, and it should not be exposed to a range of individuals who may take advantage of that information and who may get access into your secrets and your ultimate, well, weaknesses or areas that you want to develop in. And I think this is so important to emphasize. So what I'm getting really excited about, we'll have to hear your take on this as well, Perea, but what I'm really excited about is the potential to be able to use those bot tools and get action calls, get recommendations of what to do.

Private Interaction with AI Tools

But by chatting with that bot, by chatting with that tool, knowing that a lot of these information, a lot of the diary entries that I provide, they are ultimately private, they're ultimately secret, and they're not shared with a right range of third parties that I may or may not know. I'm also excited, I think, about the potential to sort of see transcript analysis happening from these sessions, but also that analysis sort of happening in the privacy pursuing manager summaries are generated. But again, you don't have to trust a single server. You don't have to trust a single company or individual to take care of the information. I think those are two sort of immediate overlaps that I am really excited about, of course, with the potential to develop into smart agents, smart private agents where agentic capabilities could flourish, all of it in a fully private preserving and decentralized manner.

Addressing Privacy Concerns in Mental Health Tools

But, yeah, I mean, this is what got me excited when I heard about it. I strongly believe it's one of the biggest problems that need to be solved, and at the same time, it is one of the areas where data is incredibly sensitive and personal and does a perfect overlap for not just what Nilin is building, but more broadly, what privacy enhancing noggies are being built for. Right. That's exactly the use case. It's exactly the vertical that I think we are building this for next to many others. But that's a great one to highlight. What got you excited about this? Or when did you sort of decide that privacy shouldn't just be nice to have, but it needs to be a core focus of your product implementation? Yeah, so I mentioned I work with National center for PTSD, a few folks there that do the research on really understanding what the users actually want.

Understanding User Concerns About Privacy

And the presentation I attended a few months back really showed the number one concern and kind of a barrier to adoption is privacy. And, you know, it's of course, completely understandable. And these are the very sensitive topics being discussed, and that data should be encrypted in some way to make sure that, you know, there's a truly, you know, a sense of peace of mind around sort of the fact that when you do share it's not going to be leaked in any way. And also the user controlled aspect, I think we've heard about a lot of leakages of data that had sensitive information, 23 andme being just one example. And I think certainly this is not something that we want to be even exposed to as a possibility. So I always knew the level of sensitivity we're working with in our kind of data set.

Ensuring Data Protection and Privacy

So this has to be protected. And particularly, of course, working with governmental organizations, even the next stage of security, which is completely understandable. They have their own kind of processes that have to be followed and that security has to be there. And any sort of enterprise rollouts that we're going to be doing will require that of us. So I looked at this space, and of course, as you mentioned, a lot of it is open source work, a lot of it is readily available. And kind of the inputs that you have in those models tend to be, they could end up being leaked publicly in one way or another. So we have to be responsible in the way we're going to be rolling this out, going to be on these pilot studies, and how do we scale this and still have those privacy concerns addressed, and how does this data encrypt the user controlled, really to foster that trust and encouragement?

Future Directions for Privacy in Data Management

Participation and, sorry, participation. I think that's really where I started exploring solutions. Obviously heard of a few different options, and I think blockchain tech certainly has a space to fill there. Awesome. Cool. Well, before we wrap it up, what are the next steps for you guys? So you touched on some really exciting progress. You also touched on some of the conversations that are currently happening to roll out potentially new pilots and direct product implementations. Do you want to share anything, sort of next three months, next six months, anything that you're working towards? Yeah, so I think we already touched upon this, but the kind of personalized and scalable approach combined is pretty unique and it's hard to do when it comes to this kind of trauma informed care model.

Progress in Trauma-Informed Care

So really looking at these more custom LLM work and being able to become more informed from a user perspective going into these live sessions so that progress can be accelerated, I think this is something I'm really passionate about, developing more and then also being able to track progress more objectively. We want to start looking into voice analytics to provide these more kind of more data driven insights into any symptom improvement as opposed to kind of self reported measures. So looking at tonality of speech, you know, really, how do we help you gauge improvements without sort of any bias in your answers there? So that's another really important aspect. And, you know, in the future, I think we talked about, you know, how do you integrate those diverse data sources to really help with proactive intervention?

Integrating Various Data Sources for Support

And this is somewhere where we could look at different partnerships and collaborations to feed those in so that, you know, you can really become and more aware of maybe the times that you might need support. And we could become that kind of notification in your day where it's like, you know, seems like you've been experiencing high levels of anxiety for X number of weeks. Maybe it's time for a session. I think, you know, if we can become that nudge and a source of support where people might not even be aware they might need it before things get too hard, I think that would be wonderful if we can get there. So that's something we're also looking forward to doing and, yeah, scaling the work that we do with different companies and different organizations and really seeing the impact we can have in different kind of types of communities is definitely something I'm gonna, we're gonna. We're excited to see.

Concluding Remarks and Future Collaboration

Awesome. Well, super exciting. And I can't wait to see the progress. Well, thanks for joining, Kara. We'll wrap it up here, but I'm very excited to see what are you building? I strongly believe that there is tremendous potential for this dual approach of using AI. But at the same time, not forgetting about the ultimate real world VRP sessions that are so critical and leveraging the strengths of AI to improve the performance and enable people, particularly also within sessions, to continue having essentially a friend in their pocket who knows them well and who can help them on a 24 hours basis. I think this is all really fascinating. What I'm even more fascinated about is your focus on privacy.

The Critical Role of Privacy in Technology

I think this is something that sometimes the one or the other company might think about last and oftentimes too late, I would emphasize, because usually it becomes important when everything got leaked. But I thinking about that before information gets leaked, before all that sensitive information is spread around, and before potentially companies use the data, sell it, exploit it, share it. That's really phenomenal and really unique, to be honest. And really fascinating to see that you're that early, that focused on that critical aspect of your product as well. So really excited to work closely with you, really excited to see your product go from strength to strength. The first numbers that you shared with us here as well are super exciting.

Closing Statements and Future Engagement

And yeah, I can't wait to work closely and see the progress. And everybody here today who joined and everybody who will listen to it in recording, please check it out, the space of mind product, and also share it with your friends, share it with your colleagues, because I think it's really a platform that can make a big impact. And yeah, from that perspective, everybody, thank you very much for joining in. As always, a pleasure. Is always very excited to talk with prospective builders and partners on the NIN network. We are building privacy for a reason. We're building it because we really want to make a big impact, the way that sensitive, high value that's dealt with. And I think this is a perfect example for that.

Final Thoughts and gratitude

So thanks, everyone, for joining again. The next session will come up soon. And Kara, thanks again for your time, and thanks for sharing your vision and your current incredible results. Thank you so much. Thank you. Great to connect. Awesome. Thanks, everyone.