Space Summary

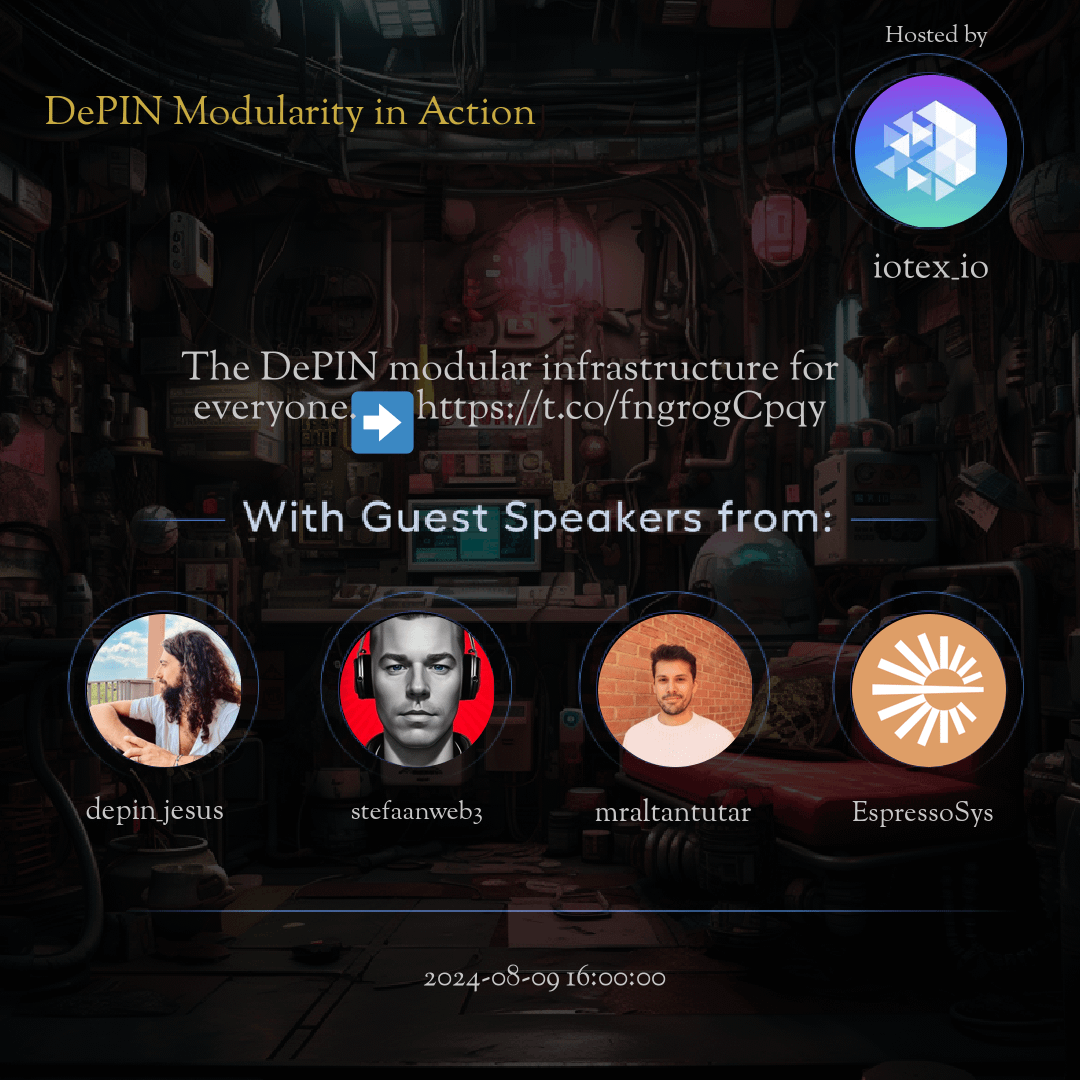

The Twitter Space DePIN Modularity in Action hosted by iotex_io. DePIN presents a cutting-edge modular infrastructure solution catering to diverse industries with its flexibility, scalability, and commitment to user-friendly experiences. Through innovative architecture and robust security features, DePIN ensures efficiency and adaptability in a rapidly evolving market. Community engagement and sustainability practices further enrich the ecosystem, while strategic partnerships enhance the value proposition. Continuous development efforts solidify DePIN's position as a technological leader, providing users with a dynamic and secure environment for growth and success.

For more spaces, visit the Infrastructure page.

Questions

Q: How does DePIN cater to different industries' needs?

A: DePIN's modular infrastructure offers flexibility to adapt to the specific requirements of various industries.

Q: What advantages do businesses gain from DePIN's scalability?

A: Scalability in DePIN allows businesses to grow without compromising on performance or efficiency.

Q: Why is inclusivity crucial in DePIN's infrastructure?

A: Inclusivity in DePIN ensures that users with diverse needs can easily utilize the infrastructure.

Q: How does DePIN ensure security for its users?

A: DePIN implements robust security measures to protect data and maintain user trust.

Q: What drives the continuous innovation in DePIN architecture?

A: Innovation is key in DePIN to enhance efficiency, cost-effectiveness, and user experience.

Q: What role does community engagement play in DePIN's growth?

A: Community engagement fosters collaboration, feedback, and improvements within the DePIN ecosystem.

Q: How does DePIN contribute to sustainability?

A: DePIN's eco-friendly design and practices showcase the commitment to sustainability.

Q: Why are partnerships important for DePIN?

A: Partnerships and collaborations boost the value of DePIN's infrastructure through shared expertise and resources.

Q: How does DePIN stay ahead in technological developments?

A: Continuous development and updates ensure that DePIN remains innovative and aligned with industry advancements.

Highlights

Time: 00:15:29

Flexibility and Adaptability of DePIN Exploring how DePIN caters to diverse industry needs with its adaptable infrastructure.

Time: 00:25:17

Scalability for Businesses with DePIN Understanding how businesses benefit from DePIN's scalable solutions for growth and performance.

Time: 00:35:40

Inclusivity in DePIN's User Experience Examining how DePIN promotes inclusivity with its accessible and user-friendly infrastructure.

Time: 00:45:11

Security Features of DePIN Infrastructure Delving into the security measures implemented by DePIN to protect user data and privacy.

Time: 00:55:28

Innovations Driving Efficiency in DePIN Discovering the innovative advancements that enhance efficiency and cost-effectiveness in DePIN.

Time: 01:05:17

Community Collaboration in DePIN Ecosystem Exploring how community engagement fuels growth and development within the DePIN ecosystem.

Time: 01:15:50

Sustainability Practices of DePIN Highlighting DePIN's eco-friendly initiatives and sustainable practices within its infrastructure.

Time: 01:25:33

Partnerships Boosting DePIN Value Illustrating the significance of partnerships in enhancing the value proposition of DePIN.

Time: 01:35:19

Continuous Development in DePIN Understanding how ongoing updates and innovation keep DePIN at the forefront of technological advancements.

Key Takeaways

- The flexibility of DePIN modular infrastructure caters to diverse needs in various industries.

- Scalability and customization options make DePIN a versatile solution for businesses of all sizes.

- The accessibility of DePIN's infrastructure promotes inclusivity and user-friendly experiences.

- Innovations in DePIN architecture drive efficiency and cost-effectiveness for users.

- Seamless integration capabilities of DePIN empower businesses to adapt to evolving market demands.

- DePIN prioritizes security features to safeguard data and enhance trust in the infrastructure.

- The community engagement surrounding DePIN fosters a collaborative environment for growth and improvement.

- DePIN's commitment to sustainability reflects in its eco-friendly design and practices.

- Partnerships and collaborations enhance the value proposition of DePIN's modular infrastructure.

- Continuous development and updates ensure that DePIN remains at the forefront of technological advancements.

Behind the Mic

Introduction to the Event

Hello, hello, welcome, everyone, to our modularity for Deepin X space. We're getting everyone all joined and settled, so we'll start in just a few minutes. Cool to see so many friends around. Nothing better than talking modularity on a Friday. I know a lot of people are out in New York this week for SBC and the deep in summit, but we're going to have our own powwow here, virtually. So excited to have everyone. Just a couple more minutes while we have the last speakers join and we'll get started, everyone. All right, I think we got our awesome crew of speakers all on board. Let's get started, everyone. Thank you so much for joining our x space today on deep end modularity in action.

Purpose of Today's Space

The goal of today's space is just to bring together some of the best infrastructure builders across the web three sector that are working on different pieces of the modular infrastructure stack, not just for deep end projects, but as deep in reaches the masses and is commanding more and more traffic on blockchains, the demand for battle tested infrastructure, sound infrastructure, and just best in class infrastructure is growing. So we wanted to bring some of our friends and some of the top projects in the space in this category together just to talk about what the opportunities are, what they're working on, and what the current pulse of the modular infrastructure sector is. So, without further ado, I want to just start with some introductions.

Introducing the Speakers

So today we have Giuseppe from Iotex, Stefan from protocol labs, and Akave Mash from Risc Zero, Jill from Espresso Systems, and Alton from Nuffle. So I'm going to have each of them just give a quick one to two minute intro about themselves and a little high level about their project just to kick things off. So why don't we start with Jill from Espresso? Hey, yeah, thanks so much. Good morning or good evening, wherever you are. My name is Jill. I'm one of the co-founders of Espresso Systems. I am also the head of strategy there, meaning I oversee all things go to market. So great to be on here with a bunch of fellow infra players who we love and work with.

Jill's Introduction to Espresso Systems

So, Espresso Systems, we are working on a sequencing layer, and what that means is that within rollups, within any blockchain, really, there's a specialized role of a transaction sequencer who, as the name suggests, orders transactions and puts them into the order in which they will be ratified and ultimately finalized on the chain. We create software that enables roll ups to coordinate that sequencing or that block building with each other such that they can achieve interoperability and synchronous composability with each other, effectively offering a user experience as if they're back on the L1. There's a bunch of other traits that come along with this that rollups can benefit from using us for, such as decentralization, optimizing their sequencer revenue, that they're capturing other things like this. But the name of the game is really roll up interoperability.

Overview of Project Developments

So, yeah. Excited to dive into that topic alongside you all and explore the ways in which this is applicable to the deep in ecosystem. Awesome. Thank you, Jill. Let's have Stefan go next. Hey, good morning. Thanks for having me. Or. Good afternoon, I'm Stefan. I worked at protocol labs for a little bit over three years on building out the Filecoin network and helped grow one of the largest decentralized storage stacks with Filecoin. And obviously, I'm hoping that everyone knows who Falcon is, but we've grown a ton. And so as part of the customer requirements, we have actually built an L2 to support customers with on-chain data lakes.

Stefan's Introduction to Protocol Labs

This L2 really helps users with bringing their off-chain datasets onto a decentralized storage network, as we have seen, is that a lot of customers, and we can talk about it today as well, even in the deep end space, are still using web two infrastructure to host their data assets. And so to make that transition easy and being able to connect to their existing applications to a decentralized infrastructure, we have built Ekave as a way to provide a seamless user experience to easily connect their web two applications to a web three backend. And today we are seeing a ton of use cases that are popping up that are not only taking advantage of decentralized storage to reduce cost, but also completely change the way they charge back their costs to the users and, you know, essentially take advantage of the composability that can be unlocked with decentralized stacks to change business models that lead to better ways to monetize their datasets.

Mash's Introduction to Risc Zero

Thank you, Stefan. Let's have Mesh go next. Hi. Hi. I'm Mashiat Armash. I work at risk zero. I am part of the partnerships and growth team. And, yeah, so I can kind of go talk a little bit about what we're doing at risk zero. We are essentially building the universal ZK infrastructure for the future, and our bread and butter is really focusing on launching. You know, we were one of the first teams to launch the world's first general purpose CKVM that uses like a RISC-V architecture. Our goal was essentially to make deploying VK applications super easy and helping developers get to market in days rather than months or weeks.

The ZKVM Development

The way that we've kind of went about designing our ZKVM is making sure that developers only have to write guest programs, whether in Rust and Go or C, rather than ask do cryptography or build their own circuits. And our goal is really to use this innovation to help ZK technology go through rapid development and rapid advancement. So we've been fine-tuning our ZKVM for the past two years. We've also been working with a lot of layer twos and roll ups, specifically optimism to build out Zepp, which is our first open source ZK Ethereum block prover. And quite recently in June, we released our latest version of ZKVM 1.0. This is the first production-ready, general-purpose ZKVM that when it’s paired with our universal verifier, it really enables Dapp developers, chains and infrastructure providers to leverage CQ universally.

Alton's Introduction to Nuffle Labs

We're continuing to optimize our performance across our stack. Our goal is really, again, to help a lot of application developers deploy ZK or access ZK as easily and as seamlessly as possible. And yeah, we're really excited to explore, we've been obviously collaborating with Iotex for a while, and I'm really excited to explore how our decentralized kind of vision fits in with Deepin and its journey. Thank you so much, Mash. And let's go with Altan next. I'm Altan. I'm one of the founders of Nuffle Labs. Nuffle Labs actually spun out a near two months ago. So we are the team that built near the a, which is the data availability layer solution that's built on near.

Nuffle's Data Availability Layer

So it exposes near's data availability for rollups. But nuffle isn't just about DA. The next thing, the next frontier for us is building a fast finality layer. That's an Eigen layer, Abs, that allows any network, any L2 pretty much to access information from other networks in three to four second finality. So that allows you to build any multi-chain application or fast bridge or fast liquidity movements between different L2 s and yeah, so those are the two products that nuffle labs is known for. Awesome. Thanks Alden. And let's go with Giuseppe.

Giuseppe's Introduction to Iotex

Sure. Well, first, I'm quite excited to be here with everybody. My name is Giuseppe. I'm part of the Devrel team at Iotex. And Iotex is the infrastructure that puts all the friends that are up here on stage with us today altogether, why? That's to basically streamline the process of building deep end applications. And so we have various modules that we present to deep end builders that go from all the way where the data is sort of like an inception level from hardware. So from hardware abstraction layer all the way to the blockchain layer for token economy incentivization.

Details of Iotex Modules

And throughout all that, there are many different modules that are run on decentralized networks. And some of which we do provide in house, some of which we do leverage existing technologies and working with our friends up here on stage today to provide to deep end builders. So I'm excited to go into the details of this and why this is important. And yeah, hear from all of you guys as well. Awesome. Thanks to our speakers for these amazing introductions. I don't think I've introduced myself yet. My name is Larry, head of business development at Iotex, and I'll be moderating our awesome x space today.

Understanding Modularity

So, for those of us just joining, the topic of today is modularity in action for the deepen sector. For those that are kind of new to this modular concept, modularity is basically the use of individual and distinct functioning layers of technology that can be aggregated together and recombined together to achieve a larger purpose. Long story short is ways of putting best in class components together in order to achieve a larger goal. And this goal is to power deepen, which we're going to focus on today.

Deepen Application Structure

So a lot of different modules are needed to make deepen work. As Giuseppe mentioned, I Iotex is doing a lot of things on the device side. Devices are kind of like the crux of the deep end sector. So we have products like IO ID and IO connect that help give on-chain identities to devices. We have other partners that help to transmit this data and these services from their devices into different types of databases. And a lot of our partners today, once they have the deep end data, act upon that data in different types of fashions, whether to store it in the case of filecoin, whether to sequence it in the case of espresso, whether to run zero-knowledge proofs on it as the case with Risc Zero, and make it available in kind of the data availability layer, as is the case with nuffle.

Exploring the Layers

So I want to start kind of one by one with each of the layers, kind of in that top down flow that I just mentioned, and just give each of the projects a little bit more time to explain what is unique about their solutions. In tackling the module that they focus on. So why don't we start actually first with the sequencer layer going back to Jill, what makes espresso different and why is sequencing transactions as kind of a first step for handing it off to some of the other modules important for web three and for deep end? Yeah, absolutely.

Jill on Sequencing Transactions

So as I mentioned earlier, all really comes down to interoperability for us. If you think about the way that a blockchain works today, blockchains and roll ups indeed end up being pretty much in silos, in large part because they each have their own way of sequencing transaction. They all have their own, you know, software that's doing that. In some cases, as with many roll ups today, they have their own centralized entities that are doing the transaction sequencing. In the case of Ethereum, of course, today you have this huge kind of supply chain now of proposers and block builders and then even solvers who are working together in tandem and have economic arrangements with each other to sequence transactions.

Interoperability as a Key Focus

Our aim is to create a layer that can sit across multiple chains and coordinate sequencing between them. And so what you think about in the context of the DPin space is, you know, if you are running a DPIn network and you've earned some tokens, bye know, running, whether it's Wi Fi software, collecting images for use in AI training, or whatever it is from your device, whatever it is, the action that you've done that has allowed you to earn some tokens on that network. If you want to then go be able to get liquidity in those tokens from a Dex, say, that sits on a different chain, that sits in different network, you're going to need to bridge to that DeX, or even more ideally, just be able to interact with that Dex on the other chain as if it was on the same chain as you.

Wrapping Up with Espresso's Mission

And that's what we're aiming for, that's really what we're about, is by coordinating sequencing across multiple chains, we can provide developers and users with something like that experience. There's a lot going on under the hood. We have a sequencing marketplace which is somewhat akin to a block building auction, if you're familiar with how those work. In Ethereum, we have a fast finality and data availability layer that are not really meant to be standalone products. Again, it's all in service of making the sequencing layer function in a way that's very fast, efficient, and again, gives users that L1 like experience without sacrificing the modularity of being able to build your own chain as a developer.

Importance of Efficient Data Management

Awesome. So really important in the deep end sector to take this unstructured and raw data and sequence it in a way that makes it more compatible and interoperable. So now that we have the sequenced deep end data, the next step is really to find a place to store it, whether it's for long-term storage or immediate use in the DA layer. So why don't we go to Alton and maybe share a little bit more about what nuffle is doing on the DA side?

Introduction to Data Availability

Yeah, sure. Yeah. I'll start a little bit about our story. The way we got into DA was I used to work at near foundation and our team used to work at the labs team, which is pagoda and obviously the foundation. When this started to take off and we started seeing a lot of l two s like optimism. Op stack launching and base being a very successful launch, we look back at near tech stack and said, hey, what are the things that we can build here that is useful for rollups? And the basic thing that actually Nir sold in 2020 by building a sharded blockchain was data availability. So the reason for that is if a shard goes down, for example in Nier, because Nier is a sharded blockchain, the other shard should be able to come in and should be able to reconstruct that shard. And with that there's a very robust data availability layer built in NIR. And for that reason, because Nier is cheap and it basically has a bunch of users, almost like 2 million users a day, it was need to be built cheap and scalable.

The Importance of Data Availability for Deep Applications

And why is that important for deepen? A lot of deep end applications actually need a lot of transactions, so they need to be storing tons of transactions somewhere. So for that reason, nuffle, which is near DA, is a very compelling solution for a lot of folks. And the other thing is efficiency, to be able to actually finalize the block finality is almost like 2 seconds on here, so you can actually finalize your sequence pretty fast. So some of the things that we always talk about is for that reason, it's very useful sort of application for any deepen roll ups. Amazing. So yeah, the DA layer is essential to make the data available and usable by different parties, right? I think there's a lot of things, different things that deepen data can be used for. But finding a place to store this for the long term and running analytics on top of it is one important use case.

Akave and Long-Term Data Storage

Another is to generate proofs of real world work off of it. So that's really what Akave and Riscro are working on. So maybe we can start with Akave. Tell us a little bit more about your former work doing things with Filecoin to store data in the long term, and some of the new things you're doing with Akave to run analytics and AI over that data. Please go ahead. Yeah, thank you. So, obviously, with Falcon is the largest decentralized storage network today, and it is also the largest ZK snark enabled network that's out there. So what that means is that we're using in Falkorn zero knowledge proofs to validate that your data's integrity is maintained over time, which is super important, because what is the problem when you're managing large datasets, like video, audio, anything that starts to become too large to actually store on chain, you have to find a way to easily validate these off chain datasets in an on chain matter. And so file point has successfully accomplished that and has been running for the last four years at really large scale.

Filecoin's Methodology for Data Integrity

And the way Falcon does it is by enabling these. First of all, the data gets converted into car files, and cryptographic hashes get stored on chain that represent the actual off chain dataset. And it's these cryptographic hashes that get validated on a regular basis, aka on a daily basis, to ensure that data integrity is maintained. And then that platform is today used for storing large media files and also is a great repository or is a great place to store backup or archives of chain states, transaction states, you name it. So if you're looking at long term data availability, Falcon is the right place to store it. Now, the way to store it into filk one is where aka Vik comes in, because what we have seen in the last three years is that users would come to us and say, hey, I would like to take advantage of Filecoin instead of Amazon.

User Experience and Integration with Akave

Can you please give me an s three interface? So, I would like to get all the benefits and the advantages of a decentralized network, which include, obviously, the verifiability, the lower cost, the scale locality, etcetera. But I do still want a similar experience as Amazon for now, so that I can easily transition my off chain datasets in a very transparent, like, a very user friendly way. And so this is where Kavi came in. And so we started, by the way, Kavi is a spinoff of protocol Labs. I'm the CEO of that. And so we took a couple of engineers and we started building this, because what we're seeing is that you can have the best technology in the world. If users don't have an easy way to adopt it, right, it doesn't get adopted. The easy way to adopt it today is by providing these interfaces.

Long-Term Goals and Demand for New Data

And that is what we're building with coffee now, the end goal, because in a lot of cases, it wasn't just to preserve data. It's like you said, it was to analyze, to run their ELT workloads against it, or because they wanted to share these data sets to their community in a verifiable way so that they could track who is actually consuming it and then charge back to those consumers in a way that is creating new revenue streams. And so today, with this new AI wave that started last year, we're seeing a ton more demand for data sets that are either created from LLMs, like synthetic data sets, or generated aids. So it's basically, and also more curated data sets that are collected by deep end devices that have a much higher value now than just data that originally was available.

Creating On-Chain Data Lakes with Akave

And so to enable these users, these new workloads, we've created a cave which essentially gives you this ability to create an on chain data lake where you can then easily ingest your datasets through your, to your web, two applications, because you can just point to an s three endpoint or an s three compatible endpoint, and at the same time, you can still take advantage of smart contracts and act on those data sets and build, you know, new business models, etcetera. So, yeah, that's where we're coming from. And you can dive deeper into some of the use cases if you want to, but there's a lot of momentum now, I think I would say, like, last year, we didn't have deepen as a real class cyber class. Now, I'm really happy to see that this is like a new, like, trend that is bringing a lot more ecosystems together, because that's what it takes to, like, move some of these workloads from the cloud into a decentralized infrastructure.

The Future of Deep Applications and Zero Knowledge Proofs

Absolutely. We're all really excited to see Deepin kind of flourish. And I having the ability to store and analyze deepened data is a huge use case and going to bring a lot of opportunities. Another huge use case is taking this data that's sequenced and available and stored and generating proofs of real world work. And these days, zero knowledge proofs. Everyone's migrating over to this philosophy of using ZK to verify everything that's happening off chain. And for Deepin, of course, a lot of the real world activities are done off chain. So I want mosh to share a little bit more about what risk Zero is doing on the ZK proving side, both for web three and also for deepen.

Risk Zero's Contributions to Decentralized Proving

Yeah, of course. I think first kind of maybe touch on how were definitely partnering up with iotex. I think iotex has been an amazing kind of ecosystem partner, friend of Risk Zero, and taking us and hand holding us through the deep ten journey. We've been collaborating more on iotex's web stream there too. And this is really where we get to shine for bringing in, for helping Iotex and deepen. Builders on top of iotex generate ZK proofs for any of the raw data messages that are being supported on that layer and then make them verifiable. The way that risk works is that we're really focused on making DK as accessible as possible and as performant as possible.

Enhancing Performance and Applicability of ZK Proofs

And a big part of how we kind of do that is through two ways. First, of course, we use, as I mentioned, that RISC V architecture, which really opens up the door for developers using rust and folks from a more mature developer ecosystem to come into ZK. And so now developers deep in builders who know Rust don't need to learn solidity. They can essentially write up their guest programs and run that through our ZKVM. For the way that, like, you know, we've been kind of doing it with web stream. The way that I know that it works is that we know, essentially, you know, fetch the raw data messages from the supported infrastructures and then they process them to our ZKVM to generate the ZK proofs.

Optimization Techniques within ZKVM

How we really make sure that these proofs are performant is through a very specific innovation that is done within the ZKVM known as continuations. So anytime that there is a guest program that is coming in, we essentially break that down into equal size segments and then independently prove them in a parallel fashion. And we've been really optimizing this performance a lot within our ZKVM 1.0. And we're able to get down our snarks, proving our snarks to around 15 seconds. And then of course with other general purpose zkvms, we are proving the risk five cycles around two times faster as well.

Universal Verifier and Its Significance

In addition to ZKVM 1.0, a big piece of what also enables us to basically allow for highly performant proofs on top of Iotex. As you know, layer two and other layer twos is our universal ground 16 verifier. And you know, Iotex has deployed a version of this verifier on their layer two. And this is really enabling folks to access cheap on chain verification as well. We really want to make these proofs useful. We want folks to be able to utilize these proofs and proofs of the derived data insights to build more scalable applications.

Core Components and the Future of Decentralized Applications

And we want them to have very seamless integration into our ZKBM and our universal ZK stack as well. And that's really why we're really building out all of these core components of not just our ZKVM, but other pieces such as the verifier libraries, support for multiple languages. All of these things are super important for us so that we can get more deep in builders to build on top of Iotex and other deep in layers and kind of scale their applications as fast as possible. I will say like a really unique feature that we do have is our steel library. This is a new feature that we added in with our ZKVM 1.0 and it's available to all deepen builders that are accessing proofs on top of web stream.

The Role of the Steel Library in ZK

This enables our ZKVM to actually act as a ZK coprocessor because you can essentially access a lot of historical on chain data, and you can then offload a lot of complex calculations within our ZKVM. You can use different functions within that library to add, subtract, multiply. You can generate multiple proofs of multiple data, multiple historical on chain data points, compose them together, aggregate them into one single proof, and then post that single proof on chain. And that really enables you to also access a lot more scalable benefits and really help not only, you know, l two scale infinitely, but to also help a lot of dapps and deepen dapps that are building on top of Iotex to scale infinitely.

Wrapping Up Insights on Decentralized Data Integration

Amazing. Thanks so much for sharing a little bit about what's going on in the ZK space and how it applies to deepen. So we covered a lot of the independent modules, from DA to long term storage, to analytics, to sequencing and now to ZK. But I want to invite Giuseppe to kind of wrap it all together. How does Iotex put together all these modules and make them applicable for deepens to build on? Yeah, Larry, that's a great point. And if, you know, if you've been following so far, each of, you know, each of the other speakers highlighted basically what they bring to the table.

The Infrastructure and Ecosystem of Deep Applications

And if you think about it, I mean, each component is a project on its own. So building you know, as a deep end builder, a decentralized application that leverages all of these components. And more obviously, because we're not covering the entire data pipeline, it becomes an enormous undertaking that requires many different skills. So it requires somewhat of a large dev team. On top of that, it has a pretty high learning curve and a pretty long go to market. So the whole point of putting all this together as Iotex and the whole point of this modular infrastructure was to make the go to market much faster, allow all these components to sort of like, talk to each other on top of the ones that Iotex provides, which I'll talk about in just a second, and make it much faster, much easier, and much more streamlined for then deep in projects to exist.

Decentralization and Eliminating Single Points of Failure

And again, why? The reason is because when you look at the industry right now, basically, I think almost all, if not all, deep end projects at the moment are sort of like, let's say they have three main components, right? So they have the decentralized network of physical devices. That's one component. And there is the whole on chain aspect of the project where usually the token lives, and maybe there is some sort of like, token economy or business logic attached to it. And then there is this third huge component, which is basically normally a centralized infrastructure.

Destined for a Decentralized Future

So we want to move away from that for obvious reasons. And they're the same reasons that, you know why we want to the, you know why the whole web three exists, right? We want to eliminate a single point of failure. We want to have more transparency, we want to have more composability, so not, so nobody has to, like, build or reinvent the wheel every time they want to basically create a new application. And there is more interoperability, which then opens up a whole set of new use cases for people to just build on top of this data coming from all these various deepenets.

Identity and Hardware Abstraction in Iotex

And so on top of the modules that each speaker was highlighting, I think something that besides the layer one blockchain, which is basically the module or the component that I started with back in 2019, I think two components that are majorly important are the identity layer and the hardware abstraction layer. And while the hardware abstraction layer is sort of like the first entry point for data, in a way, coming from these devices, so it's more of, like horizontal. The identity layer is more vertical.

Decentralized Protocols and Peer-to-Peer Communication

What I mean is it's a component that basically, it sort of like, touches all of these other modules together, because it's essentially a decentralized protocol to manage the identity of devices, the identities of certain project of a deepen project within all these infrastructures and manage the identity of the owners of these devices that get basically rewarded based on, let's say, of what the device is doing, the changes in the physical state, be it maybe a sensor network or, you know, any sort of like deeper network. And it's quite complex because basically it's decentralized protocol.

Enabling Communication and Functionality through Hardware SDK

So it allows for like peer to peer communication between all of these players without a centralized entity. Sort of like to orchestrate all of this. And on the hardware side, our hardware SDK, it's actually the first of its kind that is obstructed enough in a way that can work with various other types of platforms. And that is because it has sort of like two layers.

General and Specific Aspects of Hardware Abstraction

One layer is a little bit more of a general layer, and that sort of like, has general aspects that are valid for like any platform that's like a raspberry PI being an Arduino or being arm, etc. Etcetera, and then some specific layers.

Introduction to Pal Layer

That's called, this layer is called Pal, and it's a specific layer based on whatever devices you have in your network. So without getting too much into the weeds, basically the whole point, just like the identity layer or just like maybe the off chain computing layer, is to create something, an infrastructure that is specific, but yet obstructed enough so that anybody can basically participate into this infrastructure, like the various filecoin, espresso, risk, zero nuffle, etcetera, and make it easy to orchestrate all of this in a decentralized way.

Modular Security Pool (MSP)

And I think one of the coolest aspects of how this is done, it's not done with just magic. It's done through this MSP that we launched when we launched the itox 2.0 a few weeks ago. And this MSP is basically this modular security pool. And how does it work? It's basically a pool of assets. It's a way of basically having these, we call them dims. It's like deep infrastructure modules. It's basically, it could be like a web stream, it could be like a sequencer network. It would be like a DA layer, etcetera. These networks, because each of them is basically a network that runs on its own consensus.

Security Inheritance through Restaking

In a way, they can inherit the security of like Iotex and maybe other major layer ones through restaking. So, you know, we are all pretty accustomed to restaking and how it works, but the point is that it becomes a win situation for validators, for infrastructure providers, and for deepen projects. On the other side of the equation, because all of a sudden, validators can be rewarded by infrastructure providers. Infrastructure providers have these secured, decentralized networks that they can run infrastructure on. And then at the end of the day, the deep end projects have. And so, and these infrastructure providers have, you know, these sets of, quote unquote clients, which are the deep end projects.

Decentralized Service Execution

And the deep end projects finally have somebody that can run whatever service they need in a decentralized way. And I will stop here because I think I covered a lot of stuff, but the whole point of this is then to open up. I think, Larry, you mention it often, all of this is not really the goal. It's a means to an end. And the next step, I think, is interoperability. And then the next step after that is when all of a sudden anybody can build on top of these deeper networks, on top of the data from all these deeper networks, and all of a sudden we start seeing applications that aggregate all this data, and it can only be done in a deepened sense, where you can get a lot more granular, you can get a lot more specific, and anybody that participates in these networks then gets rewarded.

Future of Deepen Technology

And I see that's a huge benefit of deepen, and that's sort of like where I see it going and how I see it becoming larger and larger. Absolutely. Yeah. I agree, Giuseppe, that a modular system of decentralized components is kind of like a system design. Verification across all layers of the deep end devices and the deep end data is the goal or the output of that modular system design. And the opportunities that stem from a verified set of data and verified set of devices is truly endless.

Analytics and Interoperability

We're talking about running analytics on top of the data, interoperating the devices, aggregating resources across different deep ins together. It really requires us to follow this decentralized and verifiable approach. And I heard a lot of people mentioned decentralization and verification, so I want to double click into that and hear from each of the speakers about what is the real need for decentralization and verification within your own niche. And how do you think that applies to deepen in the future? Maybe we'll go in the same order as last time, starting with Jill.

Decentralization and Censorship Resistance

Yeah, sure. Well, I think that the need for decentralization, it always comes down to two things. One is censorship resistance. I mean, to some degree, that is what this whole industry is founded upon. Right. You know, if you can trust a centralized intermediary, then you're probably going to better off, from a performance perspective, at least to do so. And then kind of the corollary to censorship, resistance, or credible neutrality, I would say, is just how open your system is for others to work with.

Walled Gardens and Centralized Control

You know, there's this idea of walled gardens that exists in web two you can think about. The Apple App Store is a great example of a walled garden, you know, not very interoperable with other systems. There's, again, kind of a centralized intermediary, okay, maybe not censoring what's going on the App store, but at a minimum, with that kind of centralized control, curating it. And that makes the App store, again, not very interoperable. Really not playing nice with a lot of other systems.

Challenges in Crypto Applications

A lot of crypto apps have experienced this as they've tried to get listed on the app store. It can be a huge struggle. And so that really, to me, is what the value prop of decentralization comes down to. Whether we're talking about it in the context of a deep in system, whether we're talking about it in the context specifically of the sequencing component of deepin chains and roll ups in general, is really just that. Again, credible neutrality or censorship resistance. And then the openness of the system that really is our goal at espresso systems is to create more open systems through a credibly neutral layer.

Importance of Decentralization

That's why it's important to us to have our system be decentralized, and that's why it's important to us to bring decentralization of the sequencing layer to roll ups and chains in Ethereum and beyond. Incredibly well said. Thank you for that. Alton. What do you think from the DA side about decentralization and verification? How do you guys approach that, and why is it important? Yeah, it's a good question.

Centralized Data Storage Risks

So, I mean, as you all know, you can actually store your sequence in a very centralized manner. You can store your roll up sequence in an AWS node. But the downside of that is when you ask that AWS node, it might not just serve the data to you. That's the most important thing about data availability being decentralized, is that when you ask the nodes, in our case, it's near nodes to provide that data, we get something back.

Importance of Data Availability

So for that reason, the reason why we use near for this as well is near has been a network that's been running for more than four years. It has a strong sort of quote unquote crypto economic security, meaning there's a lot of stakers that commit to run validators, and we literally tap into NIR's validators to do so. So that's what we care about mainly in terms of decentralization. And we believe that VA as its own could be decentralized even not only with near DA, but also other DA providers as well as we know.

Maximizing Decentralization

So not only relying on one service, but eventually one roll up, relying on a bunch of different das at the same time, just to make sure that you max out your decentralization. Absolutely. The permissionless access to the data is definitely a prerequisite for the data being available at all times. So it makes total sense. Stefan, from Akave's perspective, what do you think is the, what do you think of the main drivers of your designs around decentralization and verification for data storage and for data analytics?

User Control and Transparency

Yeah, you know, in general, like, what is really important is, outside of the other topics that were mentioned already, is the ability to control your data. And so I think for us, we're very much centered around data integrity. Falcon has obviously all the primitives built in to ensure that and data integrity, I mean, and make sure that data integrity is maintained over time, but also the ability to control from a user's perspective, who has access to that data.

Control and Transparency in Data Management

So it's not just like guaranteeing and that the data was stored in the same format as it was intended to, but also like, you can control who has access to it and how you share that in general. So it's this notion of like control, and then there's this notion of transparency. Again, it was mentioned earlier, but for data in particular, the goal is to have end to end transparency. So as data gets moved across multiple providers in a decentralized network, you, not only as a user, have control where that data is landed, how it's being managed, how it's protected, but also you have full on chain proof and guarantees that it's stored in an immutable and I consistent way.

Decentralization and Control of Data

And so the fact that you have control across the stacks, meaning across the different providers in the network, and you're not stuck with one single vendor, which is typically what we're seeing when users come to us is their pain points with Amazon, Google, Microsoft, or any of the cloud providers that are more centralized, is that they're stuck with high egress costs when they want to use the data or they want to pull it back, or they are stuck into the offerings that have been provided by those providers for all their data sets.

Monetizing Data Assets

While in fact no single dataset is the same and no single value is the same, meaning as data is like the core IP in the end of an organization, or that's where we're seeing the pocket going, it's like, hey, data is a much more valuable asset than we used to think, and also now we can actually monetize it. Decentralization comes in handy where we can demonstrate integrity and can give back user control. And so that seems to be one of the biggest asks when users come to us, specifically when they're looking at building new workloads, new proof of concepts that are supporting these new LLMs that are being deployed to really find insights on their new data sets.

Emerging Urgency of Data Control

And then they're starting to realize the customers that hosting those data sets with central entities is not a safe bet. It is a bet, but in the long run, there's better ways now to protect and monetize it. And we're seeing now actually users coming to that inside, even in the enterprise space, more like the emerging verticals. But in general, it's a big change from where we were last year, where that was still a question.

Urgent Need for Decentralization

Now it's actually more urgent because if you don't do it as a business, if you're not trying to monetize your data sets, you're competitors. Well, so it's really just a race, but yeah. So this is where we see the need for decentralization to ensure that data integrity and control are sort of maintained across these new workloads. Absolutely. It reminds me of the mantra of, you can't control what you don't own, right?

Benefits of Decentralization

So another benefit of decentralization, as Stefan has put it, control over your assets in the form of data. Let's go to mosh to talk a little bit about what risk zero prioritizes as far as decentralization and verification. I know verification is baked into the ethos of ZK, but why is decentralization important for risk ego? Yeah, absolutely.

Guiding Pillar for Risk Zero

Decentralization has been kind of our guiding pillar for this year. I would even say while in the beginning I think a lot of the sentiment was like, yeah, it's nice to have. We first need to get these proofs working and these proofs accessible. Now it's really become a must to have. And it's particularly important because we ourselves, you know, when we started out risk as risk zero, our first kind of production ready version of the ZKVM was centralized.

The Need for Decentralized Proving

And, you know, right now we still have this offering up which is known as bonsai. It's our kind of centralized proving. As a service, it's available to a lot of folks on the commercial side that really want like kind of a trusted entity proving, generating the proofs that they need. And it really is helpful in many ways, because you are able to request production ready, highly performant proofs without running any of the software on your machine, and you can essentially just give that to us.

Trustless and Censorship-Resistant Proofs

But what we've really seen in the ZK space is that in addition to the need for generating and verifying ZK proofs to be trustless and censorship resistant, I think there's also a big importance on liveness as well. And as we speak to a lot of folks and a lot of our partners, making sure that our proving service has very strong likeness guarantees and that the network is really going to stay operational and reliable regardless of whoever is requesting proofs is super important to them.

Ownership of Data in ZK

I'd also like to echo, like, you know, as Stefan said, like, having ownership of your own data is super important. And I think that's a big pillar of Ziki as well. It's really a pathway to having more self sovereign data. And that's also the reason why I really got into this space.

Client-Side Proving and Decentralization

As we're speaking to a lot of partners, as well as becoming abundantly clear that enabling client side proving is going to be absolutely key, we are working very hard to make that happen at around Q four and Q one of next year. And we're working with a lot of partners who themselves are pushing the agenda forward in, you know, allowing folks to be able to generate proofs on their phones, on their machines, just so that we are able to kind of uphold that pillar of folks being able to own their own data and reduce any kind of other forms of centralization.

Building a Decentralized Prover Network

Centralization risk. A big piece of ZK is that this year we've really seen an inflection point with general purpose zkvms. I think as we've seen the rise of new zkvms coming into the market and new zkvms that are using the risk five architecture. Our hypothesis and why we bet on developing a risk five general purpose ZKVM has been supremely validated, but I still think there is a huge hurdle when it comes to implementation.

Access to ZK and Modular Proofs

We're really doing our best to make access to ZK very feasible and seamless for developers, but there's still a lot of barriers. I think a lot of developers have to rewrite a lot of their programs into rust to be able to access the benefits of our Zkvden. There's of course, like, you know, latency improvements that we are continuing to get better at. We want to keep the cost as low as possible, both the cost of generating proofs, but the cost of verifying proofs as well.

Democratizing Proof Generation

And we really want to be kind of the purveyors and the pioneers of opening up like, you know, what the proving marketplace should look like and keeping that modular, not keeping that bound to like, any specific chain or any specific roll up. And so this is a pretty exciting kind of announcement, but we're actually looking into building out a decentralized prover network. And essentially the way that we're thinking about doing it is that it's going to be a very modular design, a set of smart contracts that could be deployed to any roll up or any chain.

Future Directions for Risk Zero

And we really want to democratize access to proof generation, make sure that, you know, we can essentially be a place for other provers to come in where they can have access to novel, like, bidding strategies and also like, you know, generate and meet the demand for highly performant proofs whilst there's like heavy workloads. And I think all of that combined is really why going down the decentralized route and making sure that, you know, this next iteration of risk zero is going to be decentralized from the get go is super important.

Decentralization and Its Benefits

So yeah, I hope that kind of explains, you know, how we're kind of going down the decentralization route. And of course, all of the benefits of our prover network will, you know, a ripple effect to a lot of our partners such as Iotex and other partners in the deepen space. Awesome.

Insights on Deep DPIn Decentralization

Thanks for giving a peek into the world of ZK and how it's kind of decentralizing over time. I want to go to Giuseppe. We talked a lot about how the different decentralized layers are creating technologies that accomplish specific goals, but all of these things contribute to the larger goal of powering deepen. From your perspective, how do you think deep DPIn is going to decentralize and become more verifiable over time, starting now? Yeah, 100%.

The Importance of Verifiability in Deepen

I mean, you know, you guys all talked about, you know, on the, like, on the infrastructure level, and I agree with all these points, but yeah, if we can, like switching it to DBN, right. I believe first of all, like, deepen is a, you know, deep in applications are a special type. There is a financial incentive that is tied to whatever physical action, physical change of state it is that you're trying to prove. So in a way that has to be verifiable to a degree that then you can then, you know, orchestrate rewards accordingly.

Transparency and Trust in the Deepen Network

Now, from the end perspective, from the end user perspective, I think if we don't get to a point where there is a trustless system to orchestrate this and there is like a trustless way of proving business logic that goes behind the rewards in a deeper network, then we haven't gone anywhere in the industry as a whole. I want transparency when deepen application x is deciding what the reward should be for my contribution to the network. Otherwise it's the same as a web two scenario. And in the same way, I don't want the centralized orchestrator to then like Jill was saying earlier, to sequence the data or package the data in a way that can maybe favor one person or one player over another.

The Essential Nature of Decentralization

And so yeah, I don't see decentralization as a nice to have in the deep end industry as a whole anymore. It was kind of like, quote unquote okay for like the first wave. But right now I think it's totally a must have. And there's going to be more and more like pressure from the industry and from communities for applications to start decentralizing. And the largest benefit I think in deepen is that once you sort of like, you know, open up, you know, decentralization, that brings deepen interoperability.

The Importance of Building in an Interoperable Manner

Like, you know, Larry, we said earlier, and why is that important? That is important for a number of reasons. One, like I was hinting at earlier, I don't have to like build sort of like in silos. And that is really important both for builders and for the space as a whole. Number two, all the use cases that open up thanks to decentralization, you know, then all of a sudden are possible. And number three, which is very specific to deep in, and I find it more to be like less on the technical side, more on the like, social economic aspect, is that we are seeing, for example, that DBN are sort of like thriving where general centralized infrastructure is starting to fail their quote unquote clients or customers. So what does that mean?

Value Proposition for Under-Served Areas

It means that maybe we have some projects in the Iotex ecosystem. They are based in Africa, in areas that are like generally less served in terms of infrastructure, where maybe services are expensive because maybe they're like fossil fuel based and there is not really incentive for any centralized company to just go somewhere there and all of a sudden run like a cellular network. Deepen, on the other hand, can, because first of all, it then boosts the local economy. Second of all, local people still earn. So where there is a central, maybe a central infrastructure provider that doesn't see any value in going in a less served area, then there is a ton of value for the local people. And the same thing we're seeing with a ton of our projects that are based in Latin America, southeast of Asia, etcetera.

Excitement for Future Deepen Developments

Yeah, I try to cover, you know, a little bit of tech, a little bit of, like, social, economical, and a little bit of, like, you know, the ethos of, like, deep in as a whole. But I think those, for me, are the main reasons. Amazing. Amazing. Thanks for that, Giuseppe. I really agree that, you know, the decentralization and the verification for deepen is what's going to bring it to the next level.

Next Steps in Decentralization

You know, deepen has the word decentralization in it, but I think we're just on step one towards it. And all the amazing speakers that were part of our space today are definitely helping to advance deepen to become more decentralized, more verifiable, and therefore attract more utility to these networks. So I know we're just a little bit over time, but I just want to give all of our speakers one chance to share a little bit about any announcements, what's coming up, and maybe something that they're excited for the deep in space for the rest of the year.

Looking Forward: Deepen Space Announcements

So I think that Jill from Espresso had to drop. If you're listening and you want to jump back on stage, shoot me a request here. But let's start with Alton. What's going on with nuffle for the rest of the year? And what are you excited about in Deepak? Yeah, I mean, I. The one thing I'm excited about, maybe I'll give you the example of witness chain and verifiable compute.

Innovations in Deepen's Ecosystem

Like, I think the next things that we'll see is we build a lot of blockchains, but I believe in a world that's restricted, can do a lot of the cool things that deepen can do. So I believe in that world, and we've already started building in that sort of another primitive that is essentially aggregating all restaking. So you can think about a way of, like, there's Eigen layer, obviously, there's now Babylon, there's a bunch of stuff launching on Sol. So we're thinking about internally, a pretty much a thin layer on top of resting platforms across different.

Future Developments with Witness Chain

Different blockchains. So that's kind of where we see sort of nuffle going. And actually, different applications can use that to to verify anything, pretty much using restaked assets. Very cool. Very cool. Yeah. More security at lower levels of the stack.

Excitement for Decentralized Compute

Definitely going to propel the industry forward. Stefan, you want to go next? What's going on with the cave. And what are you excited about for deepen for the rest of the year? Yeah, well we're looking to release our testnet later this year, so excited for that as we have a ton of decentralized compute partnerships.

Adoption of Decentralized Compute

So what we're seeing is definitely an adoption of decentralized compute, which is driving the need for data verifiability. And so we're very excited to enable these new workloads because last year we've seen like we saw some early interest in decentralized compute. This year it's basically mass, a massive amount of stacks or networks that are popping up. And we're seeing definitely a transition from just AI. If you look at the AI workloads, we're seeing a transition from just doing inferencing to now fine tuning.

Future of Decentralization in AI

And next we'll be training LLMs in a decentralized way. And we're definitely seeing already some solutions popping up and all of them are very focused on verifiability. So having some, excited to see some of these coming to life and obviously with Akave being the data layer for that and Falco and the final repository for this. So yeah, we're seeing a lot of great movement there in the decentralized AI. It's called ecosystem in that space because I think that is driving all of our deepen architectures together definitely now.

Ready for Future Events

So excited to see come to see that come to fruition. And then we'll be at Defcon in Thailand. So I'm excited for that personally to see a lot of partners again. Awesome, thanks for that. Thanks for that. Yeah, decentralized compute definitely taking over and excited to see what Akave is going to put out for the rest of the year.

Upcoming Innovations from Risk Zero

Mosh, do you want to share a little bit about you shared a lot about the product suite of risk Zero, but share a little bit more about what we look forward to this coming year and what you're excited about in deep end. Yeah, don't want to give away too much otherwise my marketing manager will kill me. But what I can say is that we are going full steam in the decentralized route and we're definitely building out the initial designs of our prover network.

Development and Community Engagement

We will be doing a permission devnet just with a few handful of partners, but at the same time we're still supporting a lot of partners with integrations with our ZKVM 1.0. And some of the things that I'm super excited about is having folks that are building more DAPP applications like in Defi, in gaming, in the identity space that want to access or leverage more interoperable benefits of ZK, I highly recommend please DM me.

Invitation to Engage

Please reach out to me. I'd love to talk to you. We really want to build out an ecosystem of Dapps that are leveraging our ZK coprocessor, our steel library and our universal verifier too, so that they can deploy their application to any chain and they can also reap the benefits of cross chain compatibility. And I really want to see that ecosystem, that part of the ecosystem thrives. If you're building in that arena, please reach out to me.

Future Goals for the Prover Network

If you are interested in learning more about our prover network, about our permission Devnet, you know how we're kind of thinking about it. Please reach out to me. My DM's are usually always open and if they're not, I will open them. But yeah, I think that's kind of what's our goal for the next few quarters, is really doing different iterations of our prover network, seeing what the market thinks, seeing how we can really improve our developer experience, seamless integration and ease of deployment is top of mind, and then taking that feedback in so that we can build out our testnet and eventually our main net kind of structures.

Community Meetups and Events

We are also planning on hosting a few local meetups in New York and SFD, so please keep a lookout for that. I'd love to see everyone here in person and meet you in person. And we will also be, you know, I believe there's a big part of the team going token 2049 and then I'll also be there in Defcon. So we will be keeping the community here posted on our new developments and our new optimizations as well, so that we can make CK proving accessible, cheap, affordable and fast.

Excitement for Industry Progress

Awesome. Thank you Mosh. And finally, Giuseppe close us out today with some thoughts about what Iotex is going to be up to for the rest of the year and what you're most excited for in deepen. Sure, let's see. So I think what I'm most excited about, I mean, first of all, is to have been working and continue to work with all of you guys and make this infrastructure, you know, reach so many more, you know, exciting deepen projects.

Collaboration and Ecosystem Growth

I'm excited for the projects that are building in our ecosystem, but at the same time I'm also excited for the deepen convergence, which I want to see more ecosystem like coming together. And I wanted to see so that it's regardless of the fact that like maybe you're on Solana maybe you're on Ethereum, maybe you're on Iotex. I just want to see applications starting to work multi chain, and I want to see more and more deep in action.

Future Deepin Applications

I think maybe, Larry, I counter question to you, and I think you would be, as I had a BD, the best person to say, what are you excited about now? Thanks for that opportunity, Joseph. Yeah, I think Deepin as a really interesting crossroads right now, where, you know, we're evolving out of the baby steps of just standing up the supply side of a network, and we're seeing several deep ins showing how the right way to build sustainable demand is going to look.

Verifiability and Demand for Deepin

I think the things that are going to unlock the next levels of demand are really around verifiability of what's going on in these deep ins. Right? I think, you know, unlike other industries within crypto, such as Defi, where everything happens on chain and is therefore pretty much verifiable, if the l one that they launch on is trustworthy, so much stuff in the defense sector happens off chain and therefore needs to be verified before large enterprise customers or consumers pay for the services, the data, the resources from these decrement.

Challenges in the Deepin Sector

Unfortunately, we've also had a couple hiccups along the way where unverified dpins were putting out very large numbers and damaged the brand around the demand for these dpins a bit. But iotex's big goal for the next year is to really lean in and work on influential use cases around the verification of dpins. Not only issuing verifiable on chain identities to devices, but verifying the data coming from these devices, verifying the proofs that stem from that data, and ultimately providing a trusted form of utility that I think will propel deep ins to the next level.

Vision for a Multi-Blockchain Approach

So this is not just for dpins that are launching on iotex. We really want to be a service provider to dpins across all blockchains, l one s and l two s, to verify, deepen, and help bring the industry to the next level. So you're going to hear a lot more from the Iotex team around our new initiatives and new partners around verifiability, which includes, of course, our amazing speakers today.

Continuing Research and Definition of Deepin

But for those that want to get in touch more with the topic of today, which was modularity of the deep insect, really encourage everyone to check out our Iotex 2.0 white paper and some of our other research papers where we define what each of these layers are meant to do in relation to deepen and how these different layers and modules are going to evolve over time. So with that, hopefully that was enough of a teaser for what's going on at the end of the year.

Gratitude and Future Engagement

Really want to thank all of our speakers for joining incredible space and we really look forward to building the future of Deepin with you guys. Hope everyone has a great weekend and we'll be back next week with more spaces. Thanks, everybody. Thank you, guys.