Space Summary

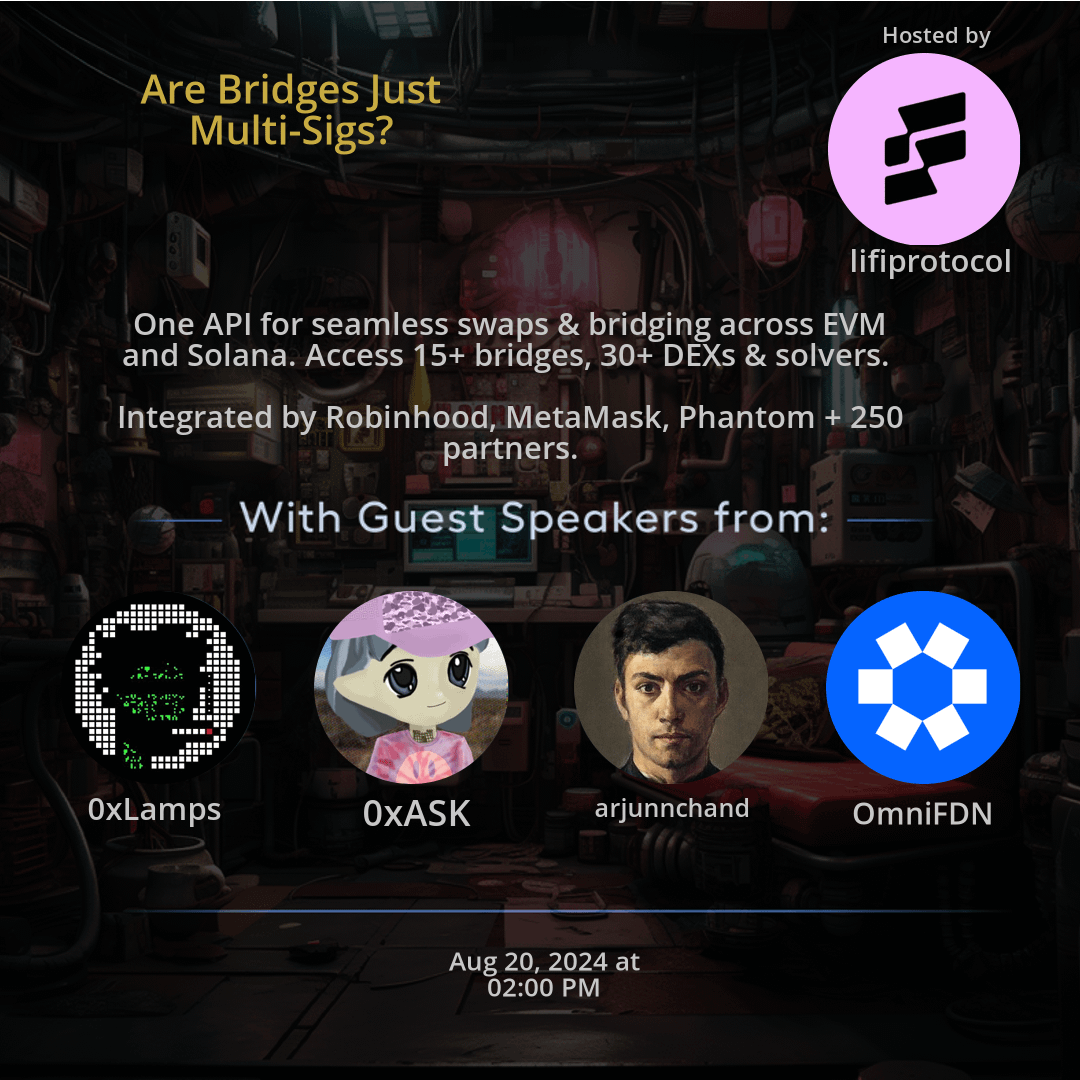

The Twitter Space Are Bridges Just Multi-Sigs? hosted by lifiprotocol. The space 'Are Bridges Just Multi-Sigs?' delves into the world of bridges and multi-signatures, focusing on their pivotal roles in enabling seamless swaps and bridging activities between the EVM and Solana ecosystems. With a vast array of bridges, DEXs, and solvers available, users benefit from a comprehensive ecosystem that emphasizes efficiency, security, and accessibility. Through integrations with prominent platforms like Robinhood and MetaMask, the space showcases a commitment to broad adoption and usability, while partnerships with Phantom and over 250 collaborators contribute to a thriving trading environment. Overall, the space underscores the significance of bridging technologies and multi-signature security in facilitating smooth interactions within the DEX niche.

For more spaces, visit the DEX page.

Questions

Q: How do bridges enhance blockchain interoperability?

A: Bridges facilitate seamless transfers of assets across different blockchain networks, promoting interoperability.

Q: What security benefits do multi-signature wallets offer?

A: Multi-signature wallets provide enhanced security by requiring multiple approvals for transactions, reducing the risk of unauthorized access.

Q: Why is integration with platforms like Robinhood and MetaMask significant?

A: Integration with major platforms signals broader adoption and usability, expanding the reach of the space.

Q: What is the primary focus of the API discussed in the space?

A: The API prioritizes efficiency and accessibility, offering user-friendly solutions for swaps and bridging activities.

Q: How does the partnership with Phantom and 250+ partners contribute to the ecosystem?

A: The partnership underscores a robust ecosystem supporting the space, providing users with a diverse and comprehensive trading environment.

Highlights

Time: 00:15:45

Bridging EVM and Solana Exploring the seamless asset transfers between EVM and Solana facilitated by bridges.

Time: 00:25:22

Multi-Signature Security Discussing the enhanced security measures of multi-signature wallets in the context of transactions.

Time: 00:35:10

Integration with Major Platforms Highlighting the importance of collaborations with major platforms like Robinhood and MetaMask.

Time: 00:45:55

Diverse Offerings and Partnerships Exploring the extensive range of bridges, DEXs, and partners within the space.

Time: 00:55:30

User-Friendly Solutions Emphasizing the user-centric approach of the space by providing accessible solutions for swaps and trading activities.

Key Takeaways

- Bridges play a vital role in connecting different blockchain ecosystems for seamless asset transfers.

- Multi-signature technology enhances security by requiring multiple approvals for transactions.

- The space provides access to a diverse range of bridges, DEXs, and solvers for users' convenience.

- Integration with renowned platforms like Robinhood and MetaMask indicates widespread adoption and usability.

- Understanding the importance of interoperability in enabling fluid interactions between EVM and Solana networks.

- Exploring the benefits of utilizing multi-signature wallets for enhanced protection and risk management.

- Efficiency and accessibility are the focus of the API, offering user-friendly solutions for swaps and bridging activities.

- The partnership with Phantom and 250+ partners signifies a robust ecosystem supporting the space.

- Diverse offerings with over 15 bridges and 30+ DEXs present users with a wide array of options for trading and asset management.

- The space emphasizes ease of use and convenience by providing a centralized solution for interacting with various platforms.

Behind the Mic

Initial Greetings

Hey, I can hear you loud and clear. Okay, perfect. Hi, Philip. Hi, Philip. I'll just message the omni team. Awesome. So we'll just wait for Austin to join. In the meantime, Matisse, you can probably post it on discord. Okay, cool.

Waiting for Guest Speaker

For everyone who is here, we're just waiting for Austin to join. He's the guest speaker and also the co founder of Omni. And then we'll start in a few minutes. Okay, I see him in the crowd now. Hey, Austin, can you check your mic?

Checking In

Yes, sir. Yes. Perfect, perfect. How are you doing? Doing well. How are you doing? All good. It's nice to finally actually talk to you. This is the first time we're talking. Yeah, it's funny, I feel like this happens to me a lot where I chat with people a ton over Twitter and telegram, and then it isn't for a while until I actually, like, hear their voice.

Setting Up the Conversation

So, yeah, good to be chatting with you live for the first time, man. Yeah, likewise. So we'll just get started with this space. I think we have almost 20 people in the crowd. So, yeah, just to set it up, last week there was a Twitter post that went viral, and it effectively called out the security of messaging protocols. And the post basically argued that some of.

Discussing Messaging Protocols

Some of the messaging protocols are kind of putting billions of dollars of user funds at risk. So I thought that I would chat with you. You're building a messaging protocol yourself and kind of just get your thoughts on the current standard of security and bridges. And if you saw the post, if you have any thoughts around it. Yeah, yeah. This is definitely a spicy conversation point, because objectively, the reality is that a vast majority of the value being passed around through these bridge and messaging systems is being passed around with very brittle security properties.

Risks of Current Messaging Systems

So if anybody in the crowd is a big advocate or community member of other protocols that are focused on interoperability, I'm probably going to have some spicy things to say here, but I don't think anything I'm going to say is inaccurate. I think it's just kind of this odd reality that we've walked into where I think, at least in my personal experience, I've been working on interop specifically since, like, 2017. This, like, this used to be a huge point of focus for people, like, the actual security properties of interop systems.

Changing Focus on Security

Back in kind of the 2017 to 2021 era, I think people had, like, a huge fixation and focus on actually doing this in a secure way. I honestly think it's kind of changed, though. I think the user preferences they don't really care as much these days. I think you can zoom out and say, well, okay, that's probably because we haven't had a massive like multi hundred million dollar hack in the last couple of years. So people who are using crypto and like haven't really seen how bad it gets, but I think we have seen like a very like massive proliferation of insecure messaging protocols come about.

Emergence of Insecure Protocols

And this is largely, in my opinion, downstream of the proliferation of execution platforms. So like roll ups and stuff. And it is hard to securely connect all these things with just like very high security properties. So the landscape has changed. But I also think we have a new set of users who just haven't seen how you can just have your money evaporate because of this, like behind the scenes messaging protocol that like you didn't necessarily know was even in play. like you don't even necessarily need to have used a specific bridge to get rugged by it, just because a lot of these assets are kind of secured implicitly by them.

High Level Perspective on Risks

so I mean, that's kind of like my high level perspective. Happy to dive into specific things here, but yeah, I do think it is a pretty risky path that we are on today. I mean, so just to like summarize, are you saying, that bridges are actually multi six and that's the reality and that's the current state of bridging.

Bridges and Multisigs

I would, you know, I'm not going to say bridges at large, but I would say a vast majority of the largest bridges are definitively bridge or multisigs, like, from just like an empirical perspective, wormhole for example, is a multisig. That is simply the case. there's, I believe, 19 nodes. Maybe they've increase the nodes a bit. But what we saw was originally people were using multisig bridges and Axelar I believe was the first to at least add crypto economic security on top of this kind of like multisig arrangement.

Security Measures in Bridges

Wormhole never shipped that. So even to this day. And they have had a multi hundred million dollar hack that was actually the smart contract level. But they as a project have faced what it looks like when you have a nine figure hack. But there's still a multisig today. And so I'm not going to say bridges at large are multi sigs, but if you ask me about a specific bridge wormhole in this example, for sure it is a multisig.

Defining Multisigs

Okay. And also just so that we get the semantics, how would you describe a multisig? Because I mean, at the end of the day, anything that has validators that sign on things, that's technically a multisig, like how you explained Axeli in a way that's also multisig, but they have 75 validators. They have crypto economic security, they have quadratic voting. So maybe just define multisigs for the. For the listeners.

Understanding Multisig Definitions

Yeah, yeah. So, like, with that definition, you could technically say ethereum is a multi sig. Like, at the end of the day, we just have nodes that are all coming to consensus with one another. One of the most basic and important things you can do on top of that is make it so you aren't just trusting what people say. You actually make it in their rational interest to tell the truth. And one of the best ways to do this is make them stake some capital, and if they lie, they lose all their money.

Incentives and Security

And so wormhole does not do this today. But, for example, Axelar introduced this model, where in order to participate in this node arrangement or in the network, you do have to stake capital. And if you attempt to misrepresent reality, there's a high risk that your capital is going to get taken from you. And so this kind of brings us back to the incentive design of the space. Like, all these crypto systems are built so that you don't have to trust the other people in the network.

Driving Rational Interests

We design these mechanisms so that it is in people's rational self interest to drive things towards an outcome that we want. In the case of bridges and interop, ideally, that would be telling the truth about what things look like on other chains. So Axelar created this system where they have to. The nodes have to stake capital, and if they lie, they're going to lose that capital. So it's in the rational self interest to tell the truth. Wormhole, for example, does not have that.

Empirical Perspectives on Security

And to be clear, I don't have specific beef with wormhole. I actually, like, know a number of people on the team, and I like all them. But I'm just talking from, like, an empirical engineering perspective here that wormhole definitely has far more brittle security properties than a number of these other networks like Axelar. Got it.

Reputation and Economic Security

So I think. I don't know. So whenever we've discussed, like, wormhole security. So, like you said, there are 19 guardians, right? And the argument is that these are specialized validators, like public entities that kind of specialize in running validators, and they have their reputation at stake, so there's actually no incentive for them to rug users, and they're very good at their job. So do you think that's actually enough disincentive for these validators or is this just like another meme?

Integrity and Incentives

Like, I don't know how people say, some people say economic security is a meme. Is reputation among proof of authority validator sets also a meme? I mean, I just think that's simply dishonest in the sense that, like, there is no incentive, like you just said that because, you know, there is a reputation system here. There is no incentive to not tell the truth. There is, and it's hundreds of millions of dollars that they can misrepresent the reality of different chains and they can extract hundreds of millions of dollars from the system.

Motivations Behind Actions

So, you know, no matter how much you care about your system or your reputation, $100 million is $100 million. So there's actually massive interest or incentive to misrepresent reality if you are a participant in that network.

Introduction to Layer Zero and Security Models

I see that we also have lamps from Stargate here. We haven't talked about layer zero yet. They kind of have this interesting model where they, I mean, effectively it was just an oracle system, but they've kind of made it pluggable. How to deal with different, there's like dynamic security properties. So, like different corridors like from chain a to chain b will have different security properties. I think you can actually have multiple routes from chain a to chain b because people can choose the different verifier networks. So layer zero is another protocol that has designed things in a kind of spectrum type way. And the lowest end of that spectrum, I think, is just like a Google Cloud server. And the highest end of that security spectrum, actually, I'm not sure exactly. Lamps like what the most, like, highest security verifier in the layer zero network is. But, yeah, layer zero has taken an interesting perspective where they kind of allow both of these paradigms. I don't know.

Evolving Security Configurations in Blockchain Ecosystems

Yeah, I don't know what the most secure level that they achieve is, but I think this has let them be very nimble with adjusting to the reality of many chains. Lamps. If you want to join, please feel free to send in a request. But I think, like you said, so this is another trend in the bridge ecosystem. Like, all of these teams have started offering something called like a configurable security stack where, like you said, security is a spectrum and it's a choice for all the teams building on top. And what we've seen is that the default setup is, I don't know, the lowest security or not the lowest security, but like usually run by the teams and some combination of some other security. So for example, for layer zero, it's polyhedra plus layer zero and or Google Cloud and Layer zero. But then at the opposite end of the spectrum, there are also combinations that involve Lagrange, ZK tech stack or they also have a rest taking deviant.

Security Needs Varying by Application

So do you think that this trend here is here to stay? And are you guys also offering something at Omni? Hey lamps. Good to see you, sir. Yeah, we can talk about what we're doing at Omni, but I want to give lamps a second to hop in. Just given that we discussed layer zero a bit. GM, I was just going to quickly say, but I think Arjun actually responded about layer zero just as I was hopping in. But I think the idea of security when building on top of the layer zero protocol is that each application needs a different level of security. And I think, I mean, what people will hear Brian say a lot is that if you're a $1 NFT, you need a certain level of security. You don't need the same amount of security that a multi billion dollar bridging transaction needs. Or if you're like a large oft, you may need more security.

Flexibility in Security Choices and Infrastructure

And I think the thing about the layer zero general messaging architecture is that you can actually choose the level of security that you want. So I think, Austin, you mentioned that different pathways will have different levels of security, but actually even different applications on different pathways can choose different levels of security. If you want to use all, I think there are 58, maybe 60 dbms live today. If you want to use all 60 dbns from ethereum to base when you're moving your oft, you can do so, or you can choose a required 30 and ten of the other 30 or whatever you want. It really depends on what the developer is looking to achieve. And then additionally on top of that, I think what it means is that the security and the DBNA ecosystem can scale with new forms of security.

Dashboard Insights on DBN Usage

So, you know, Arjun, I think you mentioned polyhedra ZK lite client, which was part of the layer zero v one and is now a DBN. As well as some of that ZK tech scales and improves, you can now include those as new dbns. I think that's what's super interesting. Lamps. One thing that I haven't been able to find, but I honestly would be super curious to dig into is do you know if there's any dashboard out there that shows the utilization of different dbns along these different paths? I'd be curious to see kind of like what are people opting for today? In the spectrum that is available for them. Yeah, I don't think there's. I don't think Layer Zero themselves have released one yet. I think they're working on it, at least from what I've heard.

Current Trends in DBNs and Future Outlook

There was a couple dune dashboards that were built or released last week. I know at least on, like, the most popular pathways, or what you might expect to be the most popular pathways. Stargaze DVN, and kind of stargaze dBN architecture dominates a lot of those pathways, just because Stargate does a ton of messages on layer zero. But, yeah, it's a little interesting to see, I would say, on each pathway. Again, on the more popular pathways, there's probably about up to ten to 15 dbns that are used. But again, some of those Stargate's dBN, layer Zero's default, dBN being some of the more popular ones. But I do think that dashboard is coming. Cool.

Interest in Verification Methods and Security Constraints

Yeah, I definitely would be curious to check that out, just because I know, you know, I think it's like, I don't know, around 50 or 60, this point of, like, different verification methods that you can use. But I'm curious to see what people are actually using out there in prod, just because there is definitely a balance of these different things. Lance, one thing, maybe this is kind of more. I know you're focused on Stargate, but, like, maybe this is one thing that you think about. Do you guys, does it ever, like, as people are onboarding these different verifier networks, given that it can just be like. I know they're called decentralized verifier networks, but they can just be like, me with a node.

The Balance of Permissionless Operations and Security

Like, it's kind of arbitrary the way that you can verify it. Do you guys ever, have you talked about putting, like, different constraints on the lower bound of this in the event? Because I imagine it would kind of suck if, like me, I just set up a node and I get some protocol to use my verifier network, and then I just, like, lie and rug them. Is this something that you guys have discussed, like, kind of putting a lower bound on security constraints at all from. I mean, the building of a devia? And again, I'll kind of. I'm not really sure exactly how layer zero think about it, but from what my conversations with them have been, building a DBN is intentionally very permissionless.

Marketplace for DBNs and Developer Flexibility

And so, as you said, it could just be you, and you run a node and you verify some of these messages. And the idea behind the dashboard then would be, how often do you lie or how often do you not verify a message intentionally? Or how often is your DBN down? And so an application that is now deciding to build on layer zero goes to this marketplace of 60, or maybe at that point it's 100 different dbns and is able to select the dbns that have the most uptime, have the quickest response time, things like that. So I don't know whether there's a goal of setting the lower bound of how good or how trustworthy a certain DBN has to be. Because ultimately, if me as a developer on a Sunday afternoon, I want to deploy an oft and I want to use my own DBN just because I'm playing around, then that should also be possible.

Thoughts on Security Ecosystem Development

Yeah, that makes sense. I'm totally with you on the permissionless side of things, Arjun. I'm curious to hear your perspective on all this as well. I know that many people in the space have been thinking about this for a number of years, but I know you specifically have spent a significant amount of time thinking about this. I know you've been around for a while. So what's your take on kind of where we're at in the ecosystem in regards to the current security structure? You know, like I said before, I kind of feel like we're experiencing like freshman syndrome where like people haven't seen or like people who are here now weren't around in prior like massive scale hacks and so they care less about the security implications.

Protocols Responsibility and User Awareness

That's kind of my perspective. I'm curious to hear how you are thinking about it, Arjun. I mean, so I think there's a responsibility on the protocol teams to kind of, you know, battle harden the defaults, because if defaults exist, they will be used. Right. And that's what the data shows as well. Like you asked lamps about a dashboard and then the dashboard also shows that naturally layer zero and like some of the default setups are being used more often. And that's okay, right? Like these are built for convenience. I just feel like there needs to be a bigger push towards educating some of these apps about the other options.

Encouraging Security Best Practices

Like there is the ZK lite client option, there is the rest taking option, and it's on them to actually use these resources. As you rightly said, you can technically just set up a verifier over the weekend and that's also okay. And it's on the app to actually use this setup for their application. So how I would use this verifier is probably in combination with a default setup. Let's say I, I use a layer zero default and then I add my own verifier on top saying that, okay, layer zero should definitely verify the message, but it only goes through if my own validator also verifies it. So there are levels to the security that we can stack on top of each other.

Final Thoughts on Security Configurations

And I feel like this optionality and just the different, the flexibility and the different options should be available. But the fact that defaults are there, they will be used. So we definitely need to do a better job at, I don't know, just having better thin multisigs as defaults. Yeah, I totally agree with that. Lamps, do you have any thoughts there? Given that layer zero is more configurable? Do you know what the like, is there kind of an in protocol default that is established today with layer zero lamps? Do you know that?

Introduction to Interoperability Protocols

So lamps is back in the crowd. But there's no in protocol default right now for layer zero or for any other messaging bit. So I think Hyperlane has a similar design. They have isms instead of deviants and there's no in protocol default. It's all available for teams to use. And naturally, more teams just gravitate towards using defaults. Yeah, yeah. And I mean, like, I'm not gonna, we can talk about like, our perspective on, you know, how we're doing this at Omni, but I'm not going to make it sound like people are just being reckless by implementing lower security properties because the reality is a bunch of chains are springing up and it is hard to go establish connections to all of them and maintain the same level of security. Again, not talking ill of any specific team. Just with that in mind, the hyperlane team as well, with their isms, I know that they have these defaults where.

Security Considerations and Challenges

Yeah, like objectively, there are lower security thresholds for some of these isms out there. What this enables them to do though, is have this permissionless interoperability type product that they're offering to people. The way that I hope this goes is I've seen a number of really large scale bridge hacks at this point and I've personally lost like a decent amount of money in these things. I do see technologies on the horizon here that can make them substantially more secure at pretty high performance properties, both in terms of speed and cost. Really, that comes down to restaking and ZK, in my opinion. I feel like it's an arms race right now. Let's really try to advance these technologies so that we can do this in a secure enough way. But like, in the meantime, there is a pretty clear business incentive to expand across all these different platforms where it is pretty hard to do it in a secure way. So that's kind of where my head is at with this.

Discussion on Defaults and Security

Lamps. Do you want to add anything? No, I think I kind of hopped in and out there, but I think, Arjun, you covered what pretty much my thoughts on the defaults are. I think the defaults are important for the developer that wants the defaults there. And if your protocol needs, or if your application, your omnichannel application needs more security, then you should be able to add more security to it as well. Got it. So, Austin, on your point, what are these other options? Like you mentioned, there's ZK, there's rest taking, but restaking, like we know there's no slashing right now. And with ZK, where do we currently stand? Yeah, yeah, that's a great point on restaking.

Omni's Model and Future Plans

So to give everybody a bit of context, if you're unfamiliar with Omni, the model that we use is similar to Axelar in the sense that we have a decentralized network of people who are staking capital. What we have on top of this, though, or will have on top of it once there is slashing, is restaking. And so kind of the idea here is originally we built a defi protocol on l one and scaled pretty quickly. We pulled in like 50 mil of TVL in like two days. And so we wanted to expand this across different roll ups because even though they were quite young and like immature, at the point that were building this, we knew the future of Ethereum was going to be roll up based. And so we wanted to make sure that our protocol could thrive as we kind of made this transition as an ecosystem instead of being narrowed into one place and through a journey of exploring different designs.

Collaboration with Eigen Layer

We ended up meeting the Eigen layer team about two years ago at this point. And a lot of it just clicked for us because we're like, all right, we're Ethereum developers. We want to build with the confidence that Ethereum gives us today. And so what if we designed a system that allowed us to build applications across multiple roll ups, but didn't deviate too far from these properties that Ethereum can offer? And so that's kind of where the restaking idea came in. It's like, can we secure how these different roll ups are communicating with one another, with restaking? So all of that is dependent upon slashing, which we still don't have. Symbiotic has shipped this to Devnet. I personally still have not seen these interfaces from the Eigen layer team.

Expectations and Delays in Development

It's been kind of, honestly a bit surprising to me to see symbiotic ship it before or ship it in Devnet, at least before eigenlayer has. But I believe that, you know, these teams are focused on, I think this will be a very powerful mechanism to increase security properties. But like, I think the space has gotten a little carried away with restaking at large just because there's not slashing. And if there is no slashing, there is simply no utility in restaking as an idea, because the whole idea is that you can slash a bunch of ethnic if people misbehave. So that's one of these kind of more exciting applications. I see that we can improve the security properties and the other one is ZK, but a lot of that comes down to making it quick enough to generate the proofs and cheap enough to generate the proofs that they can compete with some of these other verification methodologies.

Challenges and Future Developments

Got it. By the way, did the rest taking and like the deprioritization of slashing kind of concern you guys or delay your plans in any way? Yeah, I mean, speaking transparently, like, we expected this to be shipped much sooner. That's like simply the case, you know, I think the teams working on shipping slashing for their restaking protocols have just faced delays that they weren't anticipating. But originally we thought slashing was going to be available to us, you know, earlier this year. Got it. And are you guys open to exploring other options or is it just going to be eigenvalue? I'm not sure how it works, so I don't know what to expect as the answer.

Exploring Options and Projects

Yeah, I mean, I can tell you from like a pretty pragmatic business perspective, what matters to me is creating a system that can actually scale the on chain economy in a secure way. And so I think the Eigen layer team has done a very good job kind of introducing this idea of restaking, which originally came from Cosmos and Polkadot, but they did a good job bringing it into the ethereum ecosystem. But having said that, it's not there. There are contracts that people can deposit eth into, but there is no slashing. And without slashing, there is no restaking. And so seeing that teams like symbiotic are shipping slashing is very exciting for me to see because I think it's just healthy for the ecosystem at large in a way similar to Solana applying pressure to the Ethereum ecosystem.

Open to Collaboration and Development

I think it's healthy to just see that in markets at large. And so, yeah, we're looking at these different options. We're chatting with a number of the teams, and really what we care about is just like, ship the thing. The teams that get us a usable product, we are very happy to explore with them. Makes sense. Lambs, any thoughts on that? No thoughts. Okay, so maybe, like, just to change topics a little bit. So I see your post around intents and chain abstraction, and you're kind of one of the few messaging bridge builders that's a proponent of chain abstraction and intents.

Intents and Chain Abstraction

Right. So I kind of want to know, what are your thoughts on these two technologies or, like, the different concepts that are emerging? And do you see intents as this competitive technology or as a collaborative tech that can be used with messaging? And I know lamps has thoughts on intents, so maybe he can share them as well. Yeah, I've seen lamps get spicy on intense on my timeline. So I'm actually happy that you're here, man, to discuss this. I mean, from my perspective, I mostly see it as more from like, a layers of abstraction perspective. I don't think that these are necessarily competitive.

Comparing Intent-based Systems

You know, you do at the end of the day, for any intent based system to work, okay, for anybody who's not super familiar with, like, what are intents versus messaging, probably the best way to view it is there are two ways to view the world. One is through the lens of a chain, and one is through the lens of a person who can just go look at these different chains. And so historically, we have done all this messaging stuff through the lens of a chain. So it's, you know, like, if I want to move $10 from Ethereum to Solana, I have to put that in the messaging protocol, wait for the messaging protocol to verify that I did that, and then give me my money on Solana. But with this intense type paradigm, what we can do is we can view the world through the lens of a person before we look through the lens of a chain.

Enhancing User Experience through Intents

And so that means that I can go make a transaction on Ethereum saying, like, hey, I'm going to go lock $10 in a box. Whoever gives me $10 on Solana and sends proof that they did that back to Ethereum, you can take the $10 out of this box. What this enables is effectively like short term loans, where for the end user, the experience can be much faster. And so the people who get blocked on having to wait for these messaging networks to communicate really are the people who front the capital. So in this case, the person who gave me $10 on Solana. So I really see this as like a natural, just like simply a superior design paradigm.

Discussion on the Feasibility of Intents

Maybe that's like a spicy way to state it. Lamps, I'm curious to hear your take, but in order for any intent like system and chain abstraction system to work, at the end of the day, you do need to have the ability for chains to see what is going on other chains. But the really cool part is that you don't have to block the user experience on it. That's how I think about it. Lamps, I know you have covered. I've seen you discussing with a few people in threads. I'm curious to hear if you disagree with any of that, or if you think there's nuance that's left out there. I mean, I think the first point that you made is like undeniably true that there are trade offs to both systems and there are some places in which intents play an important role.

Clarifying Misconceptions about Intents

But I guess one of the things that kind of frustrates me is the idea that intents are necessarily faster than messaging. I think the idea that, like, every intent transaction is faster than every messaging transaction is just like verifiably false. There's a reason that a lot of intense protocols like to use median over average time, like the average fill time on some messaging, sorry, intense based bridges is like above two minutes for a lot of chains.

Risk Management in Transactions

And this is because for a one, because what they're ultimately doing is moving risk to the, or the fillers, or whatever you want to call them. And so a filler is able to fill a $1 transaction in, call it two to 3 seconds, because they're not actually taking any risk. Like every filler is able to fill a two dollar or, sorry, repay a $2 transaction. But this actually, for example, this just got announced. Galaxy just launched their gravity alpha main net and are using Stargate's hydrae. We were looking at a lot of the transactions that are coming through. A lot of the first transactions, users are bridging out of gravity, or bridging into gravity in like 9.8 seconds from gravity to arbitrum. And that happens whether you're bridging $1 or whether you're bridging $55 million. There's no difference in size here. The risk is always the same.

Speed of Transaction Mechanisms

I think the idea that intense bridges are necessarily faster than messaging bridges is just like, just incorrect. And as chains move towards kind of single slot finality or faster finality, messaging bridges will just be able to come down like the restraint or the. Sorry. The constraint on messaging bridges is not like the messaging protocol itself. It's just the number of block confirmations that's set. Like when you're bridging from arbitrum to optimism. Stargate waits for ten blocks before sending that message over to destination. But as that finality comes down to one block, single slot finality, then that message will be sent over in 2 seconds or 1 second or whatever it is, as every single chain is trying to get to faster finality. And as that happens, messaging bridges will be able to compete on time. I don't see where.

Intent-Based and Hybrid Systems

I don't necessarily think that intents will always be faster than messaging. And so I don't think that argument holds for very much longer. One thing that I'm curious to ask you is, so, basically, what I'm hearing is that, like, yeah, there are times that based systems today, okay, but often there are times where it's like, especially when the capital that is trying to be moved around is scaled up, intent based systems are slower. And, you know, this probably comes from, like, immaturity in the, like, solver marketplace today. But, like, thinking about just where we're at currently. Have you guys ever considered building a hybrid, like system where, you know, like, for these smaller order or, like, smaller fill sizes, it does use an intent like system, and then for larger, it's like, if the intent is nothing filled in, like 5 seconds, it defaults to something else. Have you guys considered anything like that?

Designing Hybrid Protocols

Yeah, I mean, we obviously speak about it and think about it a lot. Like, anybody could build or design a protocol that, for example, for all transactions below $1,000, waits for zero block confirmations and immediately fills the transaction on destination and just has some reserve fund for in case of any reorg ever. In fact, like, Stargate could do this today. It could set all block confirmations to zero across all chains, and then just use the treasury that it has to refill any reorg transactions at any point in time. There's x amount of million dollars in flight on Stargate, and Stargate has enough treasury to plug those gaps. So it could be done today. I just think the question is, what is the acceptable latency when doing a cross chain transaction? And I think sub 10 seconds is absolutely acceptable.

Latency and Transaction Speed

You can't even get an Ethereum transaction submitted in that time. I think there's kind of this rush to improve the experience before the technology is actually built to handle that latency and handle that cross chain experience. I think, as I said, Stargate can get to the point where it does incredibly fast bridging in the next three to six months. With a lot of the single slot finality stuff coming up, I don't think there necessarily needs to be a rush. We see most Stargate transactions go through in sub 40 seconds. From my perspective as a user of Stargate, that's pretty acceptable. And this again happens at like $1 or a million dollars or $3 million.

Liquidity Concepts in Bridging

Just to add a little bit on top of it, I think. Just in time. Liquidity is not a foreign concept. In bridges, this was also explored, I think, one year or two years ago. But then at that time, because people were trying to pull liquidity from on chain sources, so the yield would never make sense. So you would technically be paying aave for the loan and the yield that you would have to pay back to aave would just be so high that the capital loan would never make sense. But now with intents, because we have off chain liquidity and you can say market maker inventories being pulled up for capital, this just works a little bit better.

Considerations on Multisig Use

This is not the right. I have a lot of things to say about that and that specific topic. But the topic of our bridge is just multisigs. It's kind of not the right title. Maybe you and I, Arjun, can have a conversation about that another day. Yeah. Yeah, that's fair. So, yeah, just getting back topic then, like, any last thoughts on what you guys would like to see built in terms of security for bridges and, I don't know, last thoughts as builders in this space of what you wish to see in this space.

Incentives and Security in Bridges

I mean, I'll throw out a funny comment. I want to see restaking, specifically, I want to see slashing shipped. But speaking more seriously, I think a lot of this is about balancing incentives. sorry, can you guys hear me? My phone just told me that we. Can hear you. In a, in a cheap way with high security. And so, the more we can improve the technologies at large to make it easier to provide comprehensive coverage to these different smart contract platforms while providing high security benefits, that I think is the path. And so that's why I like, even though I'm not super, I don't know, I'm a bit seeing these massive hacks happen previously.

Looking Ahead to Future Developments

I'm definitely concerned with the amount of capital being moved around that has fragile security properties. But I don't know, I prefer to be positive, especially on twitter, and just like only focus on exciting things that are happening and progress that we can make. So what I care most about is just contributing to dimensions that can balance out these incentives more so that it is easier and cheaper to provide high security corridors across all these different platforms, as opposed to kind of critiquing where we're at today. And in the meantime, I really just hope there's not, like, another large scale hack, because, let's be real, it'll probably be North Korea who did it again, and that'll just pull in more negative attention to the space.

The Role of Multisigs in Bridges

And so, yeah, that's kind of where I'm at with things. It's like, are bridges just multisigs? I think the answer to this is, well, a lot of bridges are multisigs, and even some bridges are kind of like multi sigs and kind of not like layer zero, for example, maybe classifying it as an interop network, not specifically a bridge, but some of layer zero is multi sig, some of it is not. It's a slightly nuanced answer to the topic of the space. Makes sense.

Layer Zero Perspective

Lambs, any last thought as someone building on top of layer zero? No. I think in terms of what I want to see built in the space, I think the answer is always cheaper, faster, bridging. That's what we focus on, at least in terms of, like, the topic of conversation. Our bridge is just multisigs, as I think you two have, like, very accurately discussed throughout the spaces. A lot of. There's so much nuance to it, and a lot of defi is just multi sigs.

Understanding the Nature of Bridges

Like, ultimately, some contracts have to be controlled by multisigs, and that is the nature of things. I think it's difficult to, like, broadly say, are bridges just multisigs? I think if you need them to be, they can be. But if you need them to be more, they can be more, at least when built on top of. As a builder on layer zero. Makes sense. I think calling bridges multisigs is just unfair at this point.

Progress and Community Input

You can argue that Ethereum is a multisig, and that's just also very unfair. Right. Like, we've definitely come a long way since three years ago, and like Austin said, I just hope we keep moving in the right direction, and it's. It's nice to be here with you guys, and I'm glad to see that you guys are also thinking about these things. Yeah. And appreciate all the work. Awesome. So we'll just close this out. Sorry, I got a call in the middle of that.

Closing Remarks

Thanks for having me on. I appreciate it. Awesome. Thanks for joining, guys. All right, thank you all. Hope you all have a good day.