Space Summary

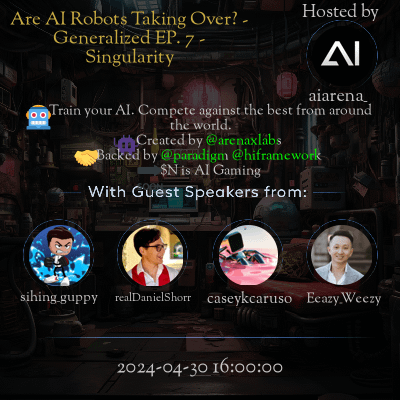

This space “Are AI Robots Taking Over? – Generalized EP. 7 – Singularity” is hosted by aiarena_. The Twitter space explored a diverse range of topics, including global conflicts, personal experiences, free speech, and content moderation. Participants shared challenges and viewpoints on world wars, online expression, and algorithms, diving into philosophical questions about AI, AGI, labor displacement, and ethical considerations. Real-life examples were used to illustrate the complexities of content moderation and the struggle to preserve free speech in digital spaces. The space served as a platform for engaging with these complex issues and sharing diverse perspectives within a lifestyle context.

For more spaces, visit the AI page.

Questions

How diverse were the discussions within the Twitter space?

The discussions were diverse, covering topics from global conflicts to personal experiences and product mentions.

What notable company and product were mentioned during the space?

There was mention of an international movie film company and products available for purchase.

What personal experiences were shared regarding living situations and legal issues?

Participants shared personal challenges and experiences related to their living situations and legal matters.

How was a ‘world war’ being defined and discussed?

The participants reflected on the definition and nature of ‘world war’ and global conflicts.

What questions were raised about free speech on platforms like Twitter?

The discussions raised questions about the feasibility of free speech on social media platforms like Twitter.

What challenges were highlighted regarding expressing opinions online?

Participants discussed the limitations and repercussions of expressing opinions online.

Why does context play a crucial role in online content moderation?

Context is essential in online content moderation to understand the meaning and intent behind the content shared.

What issues arise with algorithms and AI in content understanding?

Challenges with algorithms and AI include difficulties in interpreting nuanced content and context.

Do participants believe true free speech is achievable without censorship?

Participants shared varied viewpoints on whether achieving true free speech without censorship is feasible.

How was the complexity of content moderation illustrated through real-life examples?

Real-life examples were used to demonstrate the complexities and challenges faced in content moderation.

Highlights

Worldcoin’s Global Identity System

Time: 00:19:18 Discussion on Worldcoin aiming to create a global identity system using iris scanning technology.

Economic Disruption by Generative AI

Time: 00:34:25 Debate on the economic impacts of generative AI and its potential to disrupt various professions.

Humanoid Robots Debate

Time: 00:56:25 Exploring whether humanoid robots will benefit society or cause significant disruption.

Closing Remarks and Feedback

Time: 01:22:47 Encouragement for audience feedback to enhance the show and improve future sessions.

Reflection on Net Benefits of Technology

Time: 01:23:59 Discussing the balance between the advantages and disadvantages of technological advancements.

Key Takeaways

- Diverse discussions spanning global conflicts

- personal experiences

- and product mentions.

- Sharing of personal challenges and legal experiences related to living situations.

- Reflections on defining ‘world war’ and examining the nature of global conflicts.

- Consideration of free speech on social media platforms like Twitter.

- Discussions on expressing opinions online and the limitations thereof.

- Emphasis on the importance of context in online content moderation.

- Challenges with AI algorithms in understanding nuanced content.

- Varied opinions on achieving free speech without censorship.

- Real-life examples illustrating the complexity of content moderation.

- Engagement with complex issues and diverse viewpoints within a lifestyle context.

Behind the Mic

Debate on AI and Humanoid Robots

“Is debate is that like it’s scary versus it’s inevitable. And I want to call that out is like we keep going back and forth like every week. It’s like, this is really unfortunate and we don’t know the truth of this or we don’t know the future of this. And then the other side is like, yeah, but technology is going to happen and so we need to lean into it. And I don’t, I think that’s like a lazy argument here. So let me just jump in on the against side on the disruptive forces. Is there an argument to be made that this is actually kind of really scary? Just on theme of like Hollywood’s portrayal of humanoid robots, that is, things like Terminator, et cetera. Is there like a, isn’t there like a potential human safety concern here as well? Or do you think that’s overblown? No, I think that is real. I think that’s totally real. And I think to our conversation two weeks ago of if we can codify empathy, is like, if that ends up happening, right, we pair these AI’s with physical force as well. It’s an extremely frightening picture of what could be accomplished then, like that. That’s like the always, like the comforting thing about computing is it’s software. And so, like, it’s not, it’s not represented in the physical world or there’s like a limitation. But then the problem with these humanoids is like, you’re really giving them all capabilities that human uniqueness has from, like, evolution has led us to. And so it opens a door that’s, yeah, very frightening.”

Disruptive and Responsible Use of AI

“Toi’s point, and I completely agree. Would you mind if I do something a little different and almost switch sides for a second to argue against myself? I mean, that wasn’t a question, but go ahead. So I’m also gonna say that it’s potentially very disruptive, and I irresponsible to deploy humanoid robots to do a lot of tasks. Specifically, I spent a lot of time doing uncertainty modeling for a number of years. And when you do that, you realize how most of the machine learning models actually work is that they’re optimizing for the expected value, which means that what they’re basically doing is they’re just taking the mean. And when you’re taking the mean in a very complex nonlinear space, that means that you have to, whatever your loss function is or whatever it is that you’re out of optimizing for, whether it be like, you know, policy or actions that you’re taking. You, it’s random almost. A lot of what we’re seeing is the technology advance and sort of unmeasured improvement of models. It’s, it’s focusing on things like time series data or user specific models or language models. These things are like, you know, domain of compute. It’s sort of AI explosion and nobody in this space has thought about the broader sweeping implications on other areas where it’s like there’s, there’s none of that seemingly from that field that are looking at like how every decision actually feeds back into the model. And so when you’re in this realm, it’s like the traditional AI sense, yes, but it’s not. It’s, you take an amount of input data and through the objective function, you try to figure out what’s like the latent space and then can, you know, make the right decision. But as soon as the dynamics of that change, right? Everybody’s looking at every single Bitcoin transaction for the last, you know, minute or every single thing happening on chain. And you see it, like it’s, it’s not possible to then start predicting it in the same way and feedback the same way and model it the same way, right? Sorry for going on that tangent. But then when you’re really talking about this humanoid stuff, I think that is the scariest part because negative case is starting to replicate itself.”

Future Challenges and Ethical Considerations

“And it’s more about, if you’re getting ridiculous amounts of variance inside the environment as a humanoid does certain tasks, if it’s just purely learning off of a neural net that was trained on, on a dataset, it’s like, its ability to actually make decisions start to become unbounded or rather too bounded by past actions which were not happen. And then that structurally starts to really strike at the argument, can there be useful stuff happening there, right? Or is it just very disruptive and irresponsible to deploy them in. And I’m worried about how little foresight people have when they, when they train humanoid robots to stay. I’m curious if you have a reaction to this argument. Oh yeah, absolutely. I mean, that was a really long question. But I’m gonna answer it with three words. And I agree with that 100%. But I’m going to go back to my uncertainty modeling thing and assume you can model uncertainty. Well, let’s say you can. What you can do is you can bake into the policy. You can add in a kind of safety heuristic to not take actions that exceed a certain variance threshold. Right. For what you think the expected return will be for this thing. and so, there are. I don’t know if I want to get into this, but there are two types of uncertainty. One of them, has to do with, like, uncertainty in your actual model, and the other one has to do with uncertainty in the environment. Right. And so if you detect that there’s high uncertainty in the environment, it usually means, that you’ve never seen anything like this before. And so you shouldn’t know how to react in this certain situation. And so what you can do is you can actually bake that into the policy to say, if you have this level of uncertainty, this type of uncertainty, then I’m just not going to take this action and I’m going to go to what I perceive as the safe route.”

Ethical Implications of AI Development

“I both love and hate that because, what always fascinates me about this stuff is it’s like, what you’re describing there, it’s like feedback loops and how they influence decision making. And it always makes me a bit more scared by it because if you look at like we talked about the other day, right? If you look at governments and how they tend to approach it, it’s almost like the center or centralization becomes a key focus. If you have too much of that uncertainty modeling capability, we will actually get to know how to react. It will basically drag you in one direction, right? That’s like that history will have all, is what we called, will have just played out. And so you get dragged into safety. And it worries me a little bit more when I think about how much centralization is happening, and that’s like the only brake on progress. Well I’m struggling to, the thing that I’m struggling with is, I’m trying to come to a conclusion. Here in this conversation about like, I don’t have, I haven’t sat down and thought through the best way to actually construct something that I can both sides feel that there’s something to be said about embracing humanoid robots to solve for future problems. And then equally, you see that there’s a lot of challenges that you would, you would dislike about it. So I get both sides whenever we talk. It’s, it’s tough, it’s a tough one. I think it’s actually so new at the level of implementation, you know, that it’s, it’s going back to your point about inertia earlier. That’s what worries me, I don’t think it’s happening anytime soon. No, but I like the contra example of like governments and centralization of approaches to handling things too. I like that a lot, but yeah, I feel, I guess I feel more minute by minute in the week that it’s like, isn’t the most convenient answer always the argument for inevitability? It comes a lot back to like those classic old arguments that technology has always started to invade.”

Future of AI and Ethical Considerations

“And I feel more and more there’s gonna be this trade off. Scaling the double-sided in the near future. Interesting, yeah. I just struggle with us in our own thoughts of, of, of leaning into, I’m gonna say one sentence and we should wrap up this call. Like for a lot of people who will in the beginning of this space they’re saying, let’s lean into inevitability. And the thing that we will deal with later, it will be the fallout of how centralized, how consumer driven we want it to be. Like, that’s, it’s a pattern. I’ve realized throughout. This is like, it’s like a cop out answer. It’s like, oh, but it’s scary. And then the other one is like, oh, but you know, technology prevails. And I think, yeah, what you just said is exactly how I feel. So maybe this is a question for us to explore in a future debate. Then maybe the framing of a debate should be, should we outright stop progress in some of this stuff? That’s like, not, I don’t know if that’s possible. Like, can you give me any example in history where we’ve seized progress? It’s like the myth of progress has become so overwhelming in the west that’s not even, I don’t even know if we’re capable of that anymore. No, I understand. Not from a practical sense of can we? Is the kind of the philosophical sense of should we. So anyway, something that we would have to explore in future conversation. I love to take the time to thank everyone for joining us on this episode. The 7th episode of Generalized Time is certainly flying when you’re having fun talking about intellectually stimulating topics and these debate structures and covering all of the things happening within AI and web three.”

Closing Remarks and Audience Engagement

“We’ll be back next week on Tuesday at the exact same time with a new episode and new content. To the extent that you guys have suggestions on how we can improve the show, definitely let us know. Happy to take that suggestion on board. Maybe even mini formats that we can integrate into the show, things that we should potentially retire and other things that we should add. We’re open to all those suggestions. As always, thank you very much for taking the valuable time out of your day to spend that with us. We’re very appreciative of that. Thank you to Casey, Guppy, and Daniel for joining us every single week and imparting your thoughts, ideas and wisdom on the audience. And with that, we’ll wrap this up as episode seven, where we will see you next week. Take care everyone, and have a great rest of your week. Thank you. Thanks everyone. Thank you.”