Space Summary

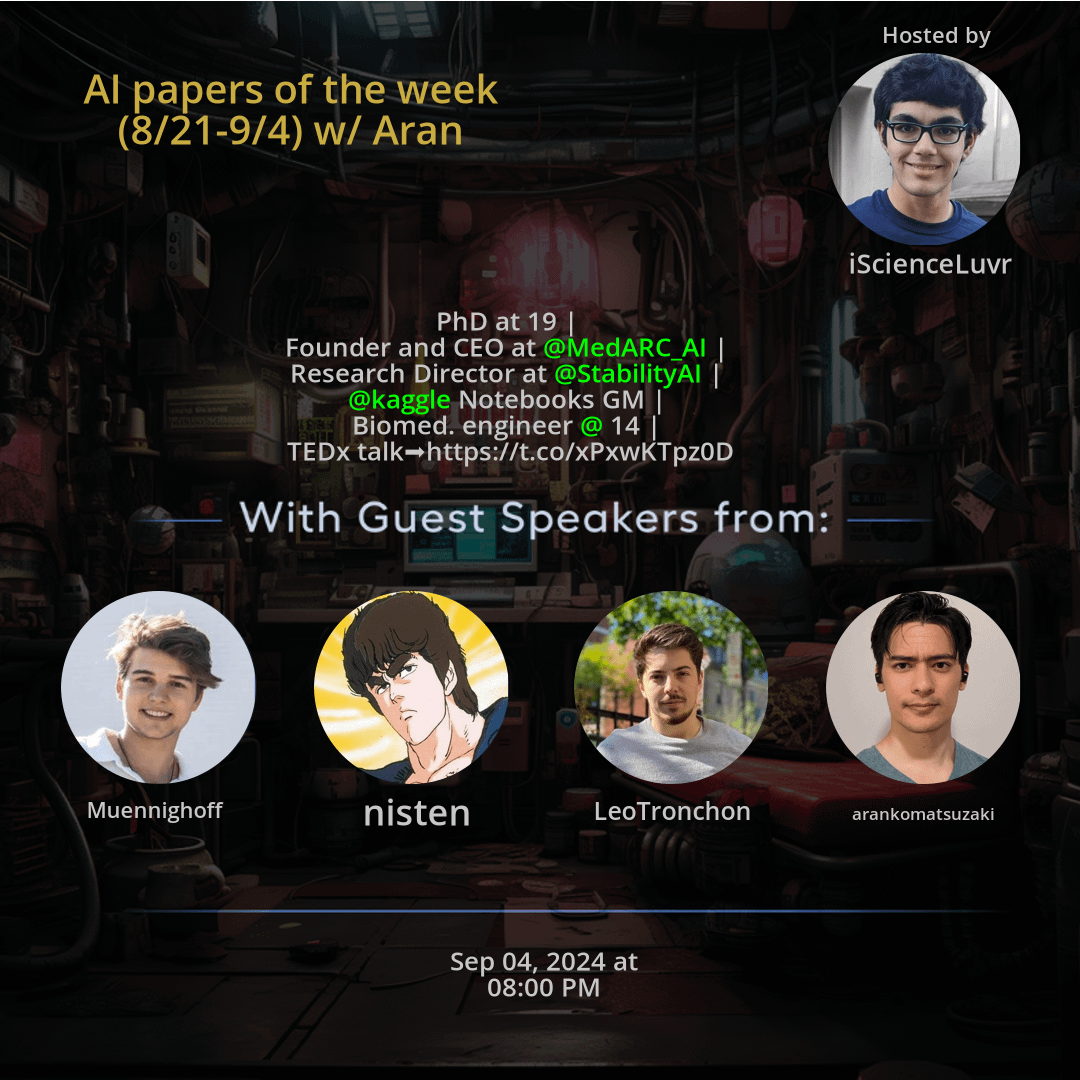

The Twitter Space AI papers of the week (8/21-9/4) w/ Aran hosted by iScienceLuvr. Delve into the dynamic world of AI research with Aran as he navigates through the latest papers of the week, offering insights and fresh perspectives. Explore the journey of a young prodigy to a CEO and research director, highlighting the significance of age diversity and early achievements in the AI landscape. Gain valuable knowledge on the intersection of biomedical engineering and AI expertise, along with the essential role of platforms like Kaggle Notebooks in driving collaboration and innovation. Discover how TEDx talks influence knowledge sharing and the impact of networking with experienced AI professionals on industry growth and learning.

For more spaces, visit the AI page.

Questions

Q: How does Aran contribute to the AI community as a CEO and research director?

A: Aran shares insights into impactful AI papers, fostering knowledge sharing and discussions in the AI space.

Q: What role does Aran's biomedical engineering background play in his AI expertise?

A: Aran's background highlights the intersection of biomedical engineering and AI, bringing a unique perspective to his AI work.

Q: Why is it important for industry professionals to stay updated on the latest AI research?

A: Staying informed on recent AI trends is crucial for professionals to remain competitive and innovative in the field.

Q: How does platforms like Kaggle Notebooks contribute to AI innovation?

A: Kaggle Notebooks provide a platform for AI enthusiasts to collaborate, share ideas, and drive innovation in the field.

Q: What can the AI community learn from the journey of a prodigious talent like Aran?

A: Aran's journey exemplifies the impact of young talents and diverse backgrounds in shaping the future of AI.

Q: How do TEDx talks influence knowledge sharing in the AI domain?

A: TEDx talks, like the one delivered by Aran, play a significant role in sharing expertise and insights within the AI community.

Q: What is the significance of age diversity in the AI field?

A: Age diversity brings varied perspectives and early achievements, contributing to innovation and progress in AI research.

Q: What can individuals gain from networking with experienced AI professionals?

A: Networking with experts like Aran provides valuable insights, mentorship, and learning opportunities in the AI domain.

Q: How do young talents impact the future of AI and research?

A: Young talents, through their contributions and innovative ideas, play a crucial role in shaping the future of AI and research.

Q: How does early success in the AI field influence future achievements?

A: Early accomplishments in AI, as seen in Aran's journey, can set a strong foundation for future success and innovation in the field.

Highlights

Time: 00:15:42

Aran's AI Journey and Achievements Exploring Aran's path from a young prodigy to CEO and research director in the AI realm.

Time: 00:25:18

Insights on Significant AI Papers of the Week Delving into the most impactful research papers and trends in AI for the highlighted period.

Time: 00:35:51

Biomedical Engineering Influence on AI Expertise Understanding how Aran's background in biomedical engineering contributes to his AI proficiency.

Time: 00:45:27

The Role of Kaggle Notebooks in AI Collaboration Examining how platforms like Kaggle Notebooks foster innovation and collaboration in the AI community.

Time: 00:55:14

TEDx Talk Impact on AI Community Analyzing the influence of TEDx talks, like Aran's, in sharing knowledge and insights within the AI domain.

Time: 01:05:39

Networking and Learning in the AI Domain Emphasizing the importance of networking with experienced professionals for growth and learning in AI.

Time: 01:15:22

Youth Impact and Innovation in AI Highlighting the significance of young talents in driving innovation and advancements in the AI field.

Time: 01:25:17

Importance of Staying Updated in AI Research Discussing the crucial need for industry professionals to stay informed on the latest AI developments.

Time: 01:35:50

Diverse Perspectives and Achievements in AI Recognizing the value of age diversity and varied backgrounds in shaping AI research.

Time: 01:45:28

Mentorship and Knowledge Sharing in AI Exploring the benefits of mentorship and learning from experienced individuals in the AI community.

Key Takeaways

- Insights into the most impactful AI papers of the week.

- The journey of a prodigious AI talent who became a CEO and research director at a young age.

- The intersection of biomedical engineering and AI expertise exemplified by Aran's background.

- Valuable knowledge sharing and discussions on the latest AI research trends.

- The significance of age diversity and early achievements in the AI field.

- The role of platforms like Kaggle Notebooks in fostering AI innovation and collaboration.

- The influence of TEDx talks in sharing expertise and insights in the AI community.

- The importance of staying updated with the latest AI research for industry professionals.

- The impact of young talents in shaping the future of AI and research.

- The power of networking and learning from experienced individuals like Aran in the AI domain.

Behind the Mic

Introduction and Overview

Hello. We will start in a start soon. Waiting right now for r two join. Hello. Yeah, I guess we will get started now that Aaron is here. Yeah, yeah, we didn't have last week so we actually are kind of covering two weeks of papers but I'll put up the list of papers on the space and actually the first one that we have is actually we have an author who will be presenting their paper. Yeah, Aaron, maybe you want to introduce.

Paper Presentation Introduction

Oh yeah. So the first paper is about building and better understanding vision language models. This is from hacking press and yeah, it's a very interesting paper about vision language models. I'm personally very interested in their exploration. Obviously we can just ask. Yeah, Leo is going to give more details so yeah, let's. I'm just waiting for more people to join. Do you think we should wait a bit? Yeah, we can wait a couple minutes. Yeah. In the meantime I'll try to put the paper on the top of the space here. Thinking of covering shou o paper. You know, they basically combine regressive model and diffusion model.

Discussion on Paper Methodology

I noticed that their strong performance is strong performance on bijong understanding task but thanks to the use of Phy 1.5. So this baseline was very good, which is why it performed even better than 32 billion camera. Yeah. Do you want to wait a little bit more or should we get started? I guess we can start. Yeah, Leo, I guess you can take it away. I guess. Okay. Thank you. Hi, I'm Leo. So I'm one of the authors of IDfx two and IDfx three. So this paper is about, is a little bit of a brain dump of all the learnings we accumulated as a team building the I defic series. So in the paper we talk about the different architectural changes that are important and that have been compared across the literature.

Architectural Insights and Technical Details

I think right now I don't know how technical I should get, but I guess I'll just wing it. So the architectures that are being used right now are very much autoregressive. So basically you have a vision tower, you have an LLM tower, and the vision tower provides image tokens. And there's kind of a convergence of the vlms around that, this type of architecture. There's a debate on how you should connect the vision tower and the other land. And so we explore this as well and we look at what's been done around. In idefix, a perceiver was used to pull the different image states brought by the vision tower, but it's been shown that you don't need that much parameters actually, and that big of a connector to pull those tokens.

Finding the Right Model for Vision Tasks

I think the teams with intern VL have shown that quite strikingly. Recently we explore how the images should be passed and how resolution impacts the performance. This is mostly interesting for tasks that involve OCR. And so what's been really interesting is that, for example, if you give lay on plus image text pairs and standard image text datasets in a VLM, and you compare with, and you look at the benchmarks like Textvqa, OkVQA, VQa v two, et cetera, those that were standard for a while. You don't see much of an improvement if you add a lot of tokens per image or if, but you do when you look at tasks that involve OCR heavily, like doc, VQA, etcetera.

Challenges in Tokenization and Task Specificity

And this has been a big pain point because this task is quite specific. And sometimes you see it only in the last stage of training. And we've made mistakes in the past, thinking that only a few tokens per image was enough, when actually for some fine grained tasks and tasks that require good OCR understanding, it was very important to scale the number of image tokens per image. There's a bit of dive in on the data sets that have been used, the interest of having image text pairs, web documents. So image text interleaved, as you could find on the Internet, and synthetic like PDF documents with a lot of characters and reading involved, and synthetic data.

Variety of Data Set Sources and Performance

So all of those have their different strengths and they bring different type of performance to the VLM. So they're like, they really target specific benchmarks. Sometimes you can be really good at some benchmarks, like with interleaved image text documents and image text pairs, for example, you will reach the very good performance on VQAV two and OKVQA, but unless you incorporate some PDF documents, you will be incapable of reading. That makes sense, but it's just, it has to be ablated. And also the image text pairs that you find on the Internet, they're often quite bad.

Importance of Synthetic Data

So actually synthetic data set that recaption, the images have shown to be a lot better and helping performance a lot across benchmarks. So those are like the biggest, the big findings that we find across the literature and that we have found as a team as well. Yep. Is it? Yes. That's very interesting. Yeah, yeah. If this, I guess if anyone has any questions, by the way, they can reply to the space with their questions, like tweeting. Yeah. So I'll let you know if there's any questions.

Questions and Directions for Future Research

One question I had was if you guys had analyzed, you know, looking at multiple vision encoders, I know that's become a recent trend in the literature recently, you know, passing in features from multiple vision encoders and kind of ensembling them. Is that something you've looked into at all? So this is something that other teams have looked into, I think somewhat successfully. But this is not something that we've tried in our team. Generally, we would just ablate the different vision tower, but we wouldn't combine them. One of the reasons for this is that it's already quite expensive to run one vision tower.

Technical Limitations in Multi-Encoder Approaches

So if you have to run multiple of them, like long series of images, it becomes a bottleneck. One of the big bottlenecks we've had, actually is passing a lot of images because now we split them. So for very big images of PDF’s, for example, you have to pass long series of images and it becomes quite a bottleneck. So, yeah, that's one of the reasons weren't too interested in having multiple vision towers, because it was already kind of a bottleneck for us. Does that make sense? Yeah, that makes sense. Arun, did you have any questions?

Future Directions in Research and Development

Oh, yeah. Do you have any, like any directions we should go? Any. Sorry. Do you have any suggestions for what kind of directions we should take for the future? I think synthetic data should be explored heavily, because the thing is, data for which you have a detailed explanation of what an image looks like or like what's going on in an image is not available for free on the Internet. It's not something that people do often like you send an image, you don't describe everything that's going on in there and post it on the Internet.

Creating Synthetic Datasets

And so you don't have that available freely. And I think creating it synthetically is by either using models or a combination of models or other things, is the way to go to create very rich datasets. Yeah, I totally agree. This is something that's really under explored, in my opinion, given the boost in performance, it should bring a, for example, like the image text data set that we're using in iDeFX two. And I think an ideFx rather as well, is Leon Coco. But the captions are made by very small models.

Modeling for Better Captions

If you make the captions with a much better model, you constrain it to describe specific things in the image. I think you can get really good regardless of the architecture or anything. So there's a. There's a big gap here that shouldn't be too hard to. To solve. It just cost a lot of money. A little bit of money if you're using inference on paid models. Yep. That would be my go to. Yeah, makes sense.

Further Discussion and Questions

Cool. Nisten has a question, but I don't know, like, again, it's about like mixed towers or multiple vision towers. He's saying, did the order matter when using mixed multiple vision towers together? I guess you don't really necessarily know the answer to this question. Yeah, actually. Yeah, unfortunately. Yeah. I don't think it would matter too much. Yeah, I don't think it would matter too much. Because the thing is, your target is the text, right?

Understanding the Processing of Visual Context

So you don't even look at the, you don't target anything until the text comes in. So you would have your two vision, your vision towers outputting the image tokens. You don't get any feedback from, like, the image tokens, but as soon as you have the text starting, then it takes into context what, what you got from the division towers. So I would think that it wouldn't matter. Yeah. Yeah. Otherwise, maybe something else is. Right now there's a lot of splitting images.

Resolution and Processing Efficiency

I don't think that's very, like, I feel like it's a little bit of a hack. And using a vision tower that can take in very high, like, very high resolution images is probably the way to go. There's just not that many available that are really well pre trained. So I think the hybrid one that I would like to try is the. Oh, I don't remember. It's a vision transformer, but it's a hierarchy, hierarchical transformer. Sorry, I lost the name.

Choice of Vision Towers for Optimal Performance

But yeah, basically, I think there are vision towers that can take images of a lot bigger resolutions without requiring so much compute. And they may be very good ways. Good to explore. So maybe I didn't catch it, but what are the vision towers or vision encoders that you guys have experimented with and saw were the best for edifice. We tried, we tried Siglip, we tried the clip vit age, we tried the evaclip.

Evaluating Vision Encoding Methods

But the issue is, when we tested evaclip, we didn't resize the image to the same resolution. So the idea of eclipse six b, I'm talking about, because there's multiple of them, but because we didn't resize the image, there's a conflating factor. Because the evaclip has been trained on 224 by 224 images, and the Siglip has been trained on 448 by 448 images, I think. Or 378, maybe 378. Yeah.

Addressing Image Resolution in Experimentation

Anyways, so yeah, you have to be really careful. And at the time when we did it, I think this is one of the mistakes we made. We wanted to take the resolution that they use for pre training, but you should actually put, I think the resolution of that you want to use at inference. So which one was the best performing one or which is the one that you finally went with for eight. So has been the best all around.

Contemporary Insights on Model Performance

Yeah, definitely. We've got Lucas here, so. Yeah, he'd be very happy to hear that. Cool. Okay, so, yeah, I guess Nistin is just following up, saying, for a single tower, if the order, like he's asking you, what about for a single tower? I guess order matters there. Wondering if we use approach, how in the mosaic can scale with variable amount of horizontal, vertical chunks until it saturates all context.

Exploring Image Chunking and Scaling

I don't know if. Not sure I fully understand, actually. We can invite Aniston if he wants to come and ask the question himself. I've invited him to speak, but, yeah, I guess. Is there anything about order for. For image encoder? I guess you could technically do like you said to do. You're saying about doing image first and then. And then. And then. And then text.

The Context of Input Ordering

But technically, you could probably also do text first in that image. Right. But your. Your annotation is the text. You're not. You're not getting any. Any. Like, your labels for the image tokens are masked, so you don't get any feedback from this. Your annotation is the text. I'm just outside, but this might be noisy.

Practical Considerations and Model Application

But you know how Fuyu would go through the image row by row. I was wondering if you just trained the model to go through any image there, then. Then your only limit would be, like, how much context window you have. You understand what I mean? Let's say you have, like, a 324 by 324 chunk of the image. Yeah. And you have an eight k big picture.

Contextual Constraints During Input Processing

You should be able to go through it for as big as the image is and not necessarily have to train the. The rest of the LLM projection layer to be able to handle. Yeah, I'm wondering. I'm just. Maybe also it's about handling any size images. Yeah. Yeah. Yeah. I wonder if it can be the.

Resolution Settings for Effective Processing

Way it's done right now. The ray is done right now because the vision towers are trained with a. With a specific resolution. And then also, you don't want to put too high a resolution in a vision tower because the number of patches increases very quickly. For example, if you put a 980 x 980 image in a siglib. So you get 4900 hidden states, and that's a lot of it.

Challenges in High Resolution Processing

Scales like the. The attention becomes very expensive. So what. What people have been doing is splitting the image in multiple different chunks and passing each of those small images into the vision encoder and then passing each of the hidden states produced into the DLM after. So you can still do like any size image, but it's like the. The way it's done right now is.

Efficiency in Image Processing Workflow

Is not ideal, in my opinion, because you have to split those images. Yeah, because like the 224 clip 224 by 224, I think neural magic made a sparse version of it. It gets so tiny that it will run like really fast. And then because of the on chip cache and stuff, you. You don't get that much penalty of it. I was just wondering if it would make more sense that way.

Optimizing Image Processing Techniques

And then. Yeah, you also get a. I guess, yeah, the hidden size would be smaller too. So also how you talk to them, the rest of the LLM, it's a lot lighter. I'm just wondering all that needs to be like, tested and stuff. Okay. Not sure. Not sure how to answer this one. I don't think I fully understood the last question.

Assessing Encoder Performance

Does like having a smaller vision encoder not have like somewhat exponential benefits in terms of performance? Like, for example, the. I think, yeah, having a smaller vision encoder, why would it have exponential benefits? Well, when you pair it with a. Rest. I mean, when you're running inference at scale, if you have a tinier vision encoder, then the rest of the LLM has a much easier time.

Inferences on Smaller Encoder Usage

So, for example, right now, the main thing that slows it down on other devices is just because you have to. With some of them, you have to just pass down 2000 tokens or more. And if, depending on what type of device people are on, they have to wait quite a bit for it to respond. I was just wondering if it makes more sense to use a smaller vision encoder, but take more chunks out of the image, if that would actually kind of help.

The Impact of Encoder Size on Performance

I don't know if it's the size of the image encoder, but it's more like the resolution of the images that you pass to the image encoder that makes the difference. And there's a sweet spot to find, I think. But having a smaller vision encoder is just like hurts performance as well. You still have the advantages of scaling the vision tower for performance.

Finding the Right Balance in Model Scaling

Of the vision tower. Where exactly is the sweet spot? I'm not. I'm not sure, but I think probably Lucas would know that better. Yeah. And those are just questions. Cool. If you're interested, let us know. Yeah. Lucas, if you want to chat, feel free to request to speak. Otherwise, are there any other questions about this paper? Aaron, did you have anything else you wanted to ask?

Closing Thoughts and Acknowledgments

No, I think that's all I wanted to know. Yeah. Thank you so much, Leo. Yeah, this was a really interesting paper, I think. Yeah, it's an interesting paper. I think it would be a good resource for people who are building in this space. So, yeah, very cool. And thank you for sharing your work. No problem. Thanks for inviting me.

Transition to Next Paper Presentation

Awesome. So the next paper we have is about Olmo, which, it's an moe. So it's like, you know, the Allen AI people have been developing their Omo set of models, but this one is their latest one, which is an Moe. And so we have Nicholas, who is going to present that. Let's see, I'll add him as a speaker. So, yeah, Nicholas will be presenting this recent work. This is just from yesterday.

Introduction to New Project

So it's like pretty brand new project here that Nicholas will be talking about. So let's see, should be added as a speaker now. There we go. Yeah, Nicholas. Yeah, I guess take it away. Whenever, whenever. Yeah, whenever you're ready. Sounds good. I just sent the thread as well to the chat, which I'm going to go through essentially at a high level. The motivation for this work is that, as you all know, like large language models can be really expensive.

Mixture of Experts in Model Training

And so with mixture of experts, we've seen that we can make them a lot cheaper, at least at inference. Like, the model has a lot of parameters, but it doesn't use all of them, which makes it cheaper to use. But current mixture of experts model have largely been closed, so we haven't been able to access them and use them a lot in academia and for other applications. For example, Gemini 1.5 is using a mixture of experts, according to their paper, and reportedly also GPT four is using moes and many other models. So here we tried to make it a bit more open and share a hopefully good moe with the community. And this resulted in Omoe. So Omoe has 1 billion active parameters and 7 billion in total. So this means that for each forward pass, it only uses 1 billion out of those 7 billion, but it has access to all the 7 billion.

Dataset Preparation and Training Process

And we pre trained it for 5 trillion tokens on an open mix of data sets. So we also released a data set. It essentially consists of data comp. So Datacomp was an effort earlier that released a new data set earlier this year based on common crawl, and we use their data set plus some Wikipedia, some books, some code from the star coder effort, and mix that all together into a dataset of like 4 trillion tokens, and then also repeat it a bit at the end for a total of 5 trillion. And we find that after pre training, the model does pretty well given its size. So within its size we find that it is the best model available. So at 1 billion active parameters, if we take that as the cost. So this is the cost that you need to pay for each forward pass, you need to use those 1 billion active parameters.

Comparison with Other Models

We find that it exceeds all other models. So for example, the previous OMO one B, which was a dance model released by Elnai, as you just mentioned, Omo and tiny Lama one B. So these are models that have roughly the same cost per forward pass, because they use the same active parameters, and compared to them it's quite a bit better. So one of the metrics we measure is MMLE, where we find it has a score of like 54, and Omo one B, for example, is around 30, and tiny lambda one B is near the random baseline of 25. And we find it is even competitive with some larger models, like Gemma two 3 billion or Gemma 2 billion, though it has 2.6 billion parameters.

Open Source Model and Community Engagement

So this one is a bit bigger. It's more than about twice as many active parameters, 2.6 billion. And the difference from most of these larger models is that OMoe is fully open. So we released the model weights with intermediate checkpoints every 5000 steps. So on the hugging face repository, there's like 244 checkpoints in that repository, any of which you can download. The data is also fully open, and then the code is of course fully open. So anyone should be able to reproduce a pre training, assuming you can access the necessary compute. And then we've also released all the logs, the weights and biases and everything, so everything should be able to, everyone should be able to use that.

Model Improvement through Adaptation

And so now that we have this pretty good open moe, we further adapted. We do some instruction tuning some DPO, and find that after that it gets even better. So it matches some prior moes which were quite a bit larger. So after our adaptation, we find that it's better than, for example, Quen 1.53 billion. So this MOE has 3 billion active parameters out of 14 billion total. So roughly twice on both of those axes, Omoe has 1 billion active 7 billion. But nonetheless we found that Omoe is a bit better, probably because of the long pre training duration and also possibly the better pre training dataset. So the DCLM dataset is pretty good.

Specialization of Experts in Different Domains

In their paper they showed that it's the best data set, the best open data set in terms of how much performance you get for a given amount of training flops. And so using that I think gave a big boost. And then also the post adaptation pipeline adopted from Tolu and other works. So this is the performance. But I think what's also really interesting is now that we have this open model, we can do a lot of cool analysis with it. So I'm going to briefly go into that. So one thing we look into is whether there's specialization in those experts. So one hypothesis would be, since only a subset of the model parameters are activated for a given input, maybe they specialize on different things.

Activation Patterns and Domain Specializations

So we explore specialization across different domains, looking at GitHub archive, Wikipedia books c four and plot the activation patterns. And we find that for some of those domains, like some experts really spike. So for example, for GitHub there's like a lot of experts that are active almost all the time for any tokens that come from GitHub, and then those same experts are hardly active for tokens that come, for example from Wikipedia. So there appears to be some domain specialization in those experts. A big factor here is probably that we have a lot of experts, so there's 64. So that allows them to be more like, they're more fine grained, which means that they could focus on more smaller subsets of the input data.

Token Specialization and Routing Patterns

We also look at token specialization. So whether there's certain token ids that always get routed to specific experts and find there's some experts that for example, process a lot of geography related tokens or a lot of measurement tokens and so on. So some of these tokens they like, with 100% probability, they kind of always get routed to a certain expert. There was an interesting previous work called Openmoe where they also found that some experts are almost exclusively used for certain token ids. And they found that the routers, so the router which decides which expert a certain input token id processes saturates pretty early. We also find the same thing, like very early during pre training, the router doesn't change as much the assignments anymore.

Pre-Training Experiments and Routing Algorithms

And so you kind of have the same token ids get routed, keep getting routed to the same experts, and this is why they find the specialization and everything's open. There's also lots of pre training experiments we ran. So looking at how many experts, you want to use how the routing algorithm works. So one common paradigm is token choice, where you select the experts per token, whereas expert choice, which many other moes, at least that have not been fully released, are apparently using. For expert choice, the expert selects the tokens instead. And the advantage here is that if the expert selects a token, you can set a fixed amount of tokens per expert and thereby achieve perfect load balancing.

Exploring Enhancements and Collaboration

Like every expert gets the same number of tokens, so you don't run into any issues where maybe all tokens want to go to the same expert, and then it's very unbalanced. And yeah, a bunch of more experiments. One that is also cool, I think, is sparse upcycling, which is a great paper from Aaron. We tried that, but we found that in our regime it doesn't seem to work as well because we're trying to. So we try to upcycle Omo one B, which is this dense language model that LNi previously released, and it was already pre trained for 2 trillion tokens. And so upcycling this dense model at 2 trillion tokens into an moe.

Challenges with Upcycling Methodology

So it works like the performance keeps on improving a little bit, but very quickly, a model trained from scratch, an MoE from scratch catches up with this upcycled model. And one hypothesis is that if you upcycle a model that has been overtrained to this extent, it is already in a very, like its parameters already in a very optimal space. So trying to have the experts learn different things will be very difficult because they're initialized from this really super optimal MLP. Right? Like for sparse upcycling, you copy the MLP multiple times and then keep training it as an moe. And so if they're already in this very optimal space, they'll probably have trouble learning very different things and specializing.

Future Research and Usage Options

And that's possibly why it didn't help here. But I'd be very curious to hear Aaron's thoughts or other people's thoughts on upcycling. Yeah, and I think that's it. So if anyone has questions, feel free to jump in. There's a lot more in the paper. And yeah, feel free to use all the resources. I hope it's useful to the community. Yeah, thank you. Again, if people have questions, feel free to reply to the space. We do have a few questions, but maybe Arun, if you want to start out, if you had any questions first.

Discussion on Upcycling and Performance

Yeah, so I saw one of the plots about first upcycling versus training from scratch on your paper, and I totally agree that the instability of upcycling method. Yeah, it's very unstable and yeah, that's one of the reasons why it does not work in some instances. And I saw some meta paper that sort of says spice up cycling worked pretty well, which I don't know why. So the thing is, on the larger scale, I didn't really get stable results. So yeah, honestly, probably those meta guys know way more than sparse upcycling in large scale. And also, you know.

Insights on Expert Choice vs Token Choice

Yeah, so my experience in this scale was comparable to yours. So yeah, I agree with your conclusion. And as for token choice versus expert choice, we actually tried it on like a t five and vision transformer. So. Not decoder, transformer, decoder. And we found that expert choice performed better. But yeah, it's interesting to know that token choice is better in this setting. Oh yeah. Thanks for the thoughts. I think on the expertise versus token choice, one difference is probably that we're using a dropless token choice, so no tokens are dropped, whereas I think it's very common to use the dropping variant.

Further Exploration on Training Techniques

So if there's like a capacity factor per expert and if you, if too many tokens get sent to one expert, then I think in many prior works they just drop them, whereas we're using mega blocks, which avoids this dropping issue and leads to better performance. And on the upcycling, that's very interesting. I think the paper you referred to is the MoMA paper from meta. And yeah, I think they upcycled very early during training, so they just train like the dense model for a little bit and then upcycle after like a few thousand steps.

Performance With Alternative Methods

So maybe that's the better way to approach it. And I think they get like some flop improvements from that. Yeah, it'd be very curious to experiment more with that. You can also just add noise too. Oh, doing to the upcycling. Yeah, we tried that. I think they also do that in Quen, but we found that it doesn't help. So there's an ablation, an experiment on that in the appendix of the paper, I think we added like 50% of random gaussian noise to the MLP's.

Exploring Merger Techniques and Scaling Challenges

I think also in the sparse upcycling paper you mentioned that you tried adding noise and it did not help. It's pretty like that one you got to do like either. Well, some people tried an evolutionary merge, but it's pretty hard to find like the sweet spot of the config. And others have also tried to do like ties merge and stuff between the layers. But again you don't know how much that is going to hurt the MLP. Wait, how long did you guys train for? Like what was the, yeah, like how long did the whole thing take to pre train?

Training Duration and Experimentation Insights

Actually not that long. So it was 5 trillion tokens. It took a bit more than ten days, I think like twelve days. It's just the experiments. Like running all the experiments took a lot of time and then writing everything out and compiling everything, uploading all the checkpoints. But yeah, the actual run only took like 14 days, I think, on turn 56 h 100s. Yeah. We have a couple other questions here. As replies to the space. Someone was asking if you've explored other routing methods in addition token based and expert choice based like Syncorn.

Exploring Additional Routing Strategies

Oh, great question. I think. Yeah, we did not explore other routing algorithms. Yeah, but those are those that will be really promising, I think also, yeah, synchron base layers. I think there's many other cool routing strategies that could be tried. And then, so, and then the same person also asked if, what's the reasoning behind 5 trillion tokens in trading? That's way beyond compute efficient frontier in terms of total parameter.

Efficiency Considerations in Model Training

Budgethouse. Yeah, good point. I think it's similar to the reasoning behind the Lama models where we hope that these models get used at inference. And then if you account for the time that people will spend using these models for inference, actually it will be compute efficient because it will have been worth it. By overtraining, we kind of reduce the inference cost and so hopefully people will use it and it'll be useful that way. So we're kind of taking the cost on us for the training so that hopefully people can use it more cheaply during inference.

Future Directions in Token Utilization

I think we can go even more. I was actually thinking of doing 15 trillion tokens at the beginning, but then we thought we'd start with 5 trillion and maybe follow up or something at some point. Go ahead. Will people be able to do like continued pre training? Like let's say they made a Ydezen an architectural change or I guess they're able to, right, because you guys did the first like full 100% open source.

Continued Training and Expansion Opportunities

Yeah, I think since all the checkpoints are open, you can definitely do continue pre training. One problem is that we use a cosign schedule, so it's, which makes it a bit difficult, maybe. So you probably have to warm up the learning rate schedule again and then kind of restart it so you could, it might work. But if we, one thing we want to change actually in the model is the tokenizer we want to make it a bit bigger, so we might have to do another from scratch run.

Future Model Changes and Research Potential

But if people want to continue appreciating it, I think that would be super exciting. I'd love to hear more about it. Someone also asked what is the reasoning behind selecting the size of the model? Good point. I think we wanted it to be small so it's very accessible. So with 1 billion active parameters it's very useful for a lot of use cases where, you know, you can't afford those big models.

Accessibility and Configuration Insights

And then also, of course it's a bit easier if you train a small model rather than going too big. But we hope that we can scale up more in the future. And then it seems like a nice combination. So the prior OMo models, the dense Omo models that were previously released were 1 billion and 7 billion. So we kind of combine them both. We have 1 billion active and 7 billion total.

Exploring Model Configurations

So right in between. But yeah, hopefully we'll be able to have future models with different configurations. Okay, that's cool. Yeah. Well, one question I had was, so you did this sort of analysis of the domain specialization, if I recall correctly. I think, you know, for the mixture model they also did analysis of domain specialization and didn't really find any domain specialization.

Analysis of Domain Specialization Factors

What do you think determines when a model uses experts to specialize for different domains versus when it doesn't? Is there any sort of research looking into that? And is there anything yet you guys have seen in your work that analyzes this? Yeah, great point. I think we also plotted mixture and compared with it in our paper and find that, as you say, there's hardly any specialization in mixture or much less.

Understanding Specialization in Mixture Models

And my current hypothesis is that it's because of the op, because they upcycled from Mistral. I think they haven't officially confirmed it, but a lot of people have like compared the weights and proposed that. And maybe Aaron has some thoughts on that, but I think that maybe because of the upcycling, the experts can specialize a bit less. And so if you upcycle from a model, they kind of, they're still very similar and they get used for very similar tokens.

Factors Influencing Model Specialization

So that's why we see less specialization in mixture. And another factor I think is the number of experts. So in omoi we have 64 experts in every layer, which is quite a lot. So they can, it makes a lot more sense for maybe just you can segment kind of the knowledge into smaller pieces and those experts can possibly specialize more. Right. Because in the limit, if you have just one expert, then of course there's no specialization because everything goes to just one expert.

Specialization Dynamics with Multiple Experts

So the more experts you have, maybe the more specialization it encourages. For sparse upcycling, if you take a look at the entropy of rotoring, then it was originally very low and then it gradually improves. But if I recall correctly, it never reaches to be at the same level as the training moe from scratch. So yeah, that's, that means it's less diverse than Leo Moe.

Exploring Specialization Techniques

Yeah. Someone asked, I guess if there's like, yeah, if there's any way to force more specialization in some of the experts. I mean, I guess part of it is just like, yeah, maybe just having more experts like you say, but yeah, I don't know, maybe that's an interesting research question, if there's a way to force more specialization. Yeah, I think so too. I think one big problem right now is that we force the models to load balance inputs equally.

Load Balancing and Specialization Challenges

So in our setup we have a load balancing loss which essentially will force the model to try to allocate all tokens equally across experts during training. And that is kind of directly, it goes directly against specialization because now the model can't. I always use a certain expert for some input data. And with expert choice it's somewhat similar. Like you force all experts to always take the same token. So even with expert choice where you don't have a load balancing loss, I think it might not be great for specialization because all experts are forced to take the same number of tokens.

Research Opportunities for Dynamic Routing

So figuring that out I think is a great research question, like how can you maybe make it more dynamic? But then the problem is if you remove the load balancing loss, we have an experiment on that actually in the paper. If you just remove the load balancing loss and do token choice, then all tokens will get routed to just one or two experts and all the other experts become like dead weights. And so it's very inefficient for training and also will lead to worse performance.

Combining Performance with Efficient Routing

So how can you kind of keep the good performance but at the same time allow more variable allocation, more variable routing to the different experts? If people have thoughts on this or want to research this, I think it's a super interesting. How come you guys went with picking eight experts per token instead of doing like the more traditional mou where you just have eight MLP's and you pick two was like deep Seq's approach where they pick like two plus four.

Expert Selection and Routing Decisions

Did that influence it? Or I guess it just helps with the MLP so it doesn't matter as much since they're all initiated from the same way. Yeah. That was inspired by Deepsea. So in their paper, they show, in the deep seq moe paper, they show that you want to have very fine grained, small experts, at least a better performance. And the intuition there is, the more experts you have, the more combinations are possible via the routing.

Potential Advantages of Increased Expert Count

So if I have only one expert, right, then there's no possible combination. Like, it always goes to that one model, one expert. If I have two experts and one is activated, then there's two different ways we can route. And so the more, as I increase the experts and the number of activated experts, there's more possible combinations for the model to route input tokens. And we find that this leads to better performance because you're kind of giving the model more flexibility.

Experiments on Expert Configuration

And so we have like an experiment on this where we compare, I think eight experts, 32 and 64 was a lot better. We could probably go with even more, but at some point, like, the router gets very big. Like the more experts you have, of course, the bigger your router is because it has to produce one probability for each of those experts. So probably don't want to go too big. But definitely more experts helps a lot.

CPU and GPU Performance Insights

The other thing I noticed which should help here is that with deep seq, you basically have like the best intelligence you can run on a cpu. And because you have a lot of like tiny weights, it makes very good use of the big cpu cache. And that should help here a lot too. I just. Setting up a hugging face space also for. Yeah, I posted it here if anybody else wants.

Exploring Deployment Options on Hugging Face

There's a GPU space, but also a cpu one. I'm just interested to see how well it'll run. Oh, that's really interesting. Yeah, I think someone was also looking into integrating it in some CPP library or something. Maybe that'd be useful. Yeah, they will do it pretty fast. Couple of days. But yeah, this one needed the. If anyone else is going to use it needed the latest, the pull request that you guys listed in the instruct version on hugging face.

Compatibility Challenges with Model Workflow

And that was the only thing that worked. Force upgrading transformers to latest didn't work. Anyway, I've been trying the model out. It's pretty funny actually, how it. It follows the system prompt really well. Oh, nice. Like the instructor model, it works well. Yeah, because I told it to be like andre Karpathy who's a step function machine and answers with full code and stuff.

Interesting Training Scenarios

And then I just wrote now and then he tried to write code around meowing, and it was pretty funny. That's great. I had some question about the training. How was that? Because when people have over, like 100 gpu's, or when you go 200 or 1000, it becomes a complete headache. Did you guys have help, or you just rod?

Collaboration and Support in Training

Yeah. So AI two is a great cluster team. They're really amazing, and they made the whole cluster work. And then also, I have to say, weren't super big, so we trained on 256 h 100s. It's still probably more manageable than some larger runs, like, for example, for DBRX. I think they probably used a ton more. That's probably even more tricky.

Discussion on Training Performance

So, overall, it went pretty well. I think we had one downtime during the training, and then the loss, and everything was very smooth. There's also loss plots, I think, in the paper, and, of course, the weights and biases is open, so people can take a look at the loss if they're interested, and there were no major spikes or any other problems. Is there any other question? Okay, so, yeah. Thank you for coming, Nicholas. Yeah, this was a very good discussion. Thank you, Nicholas. Thanks so much for having me. Yeah, I guess we'll move on to the next papers. Yeah, we just have a few other papers that we wanted to just briefly cover.

Introducing the Next Paper

So I'll start with the next paper, and then Aaron will continue. So, the next paper I wanted to cover was, diffusion models are real time game engines. I think maybe many people have seen this paper already, but I thought I'd do a little bit of a deeper dive into the paper, so I'm going to just put it on the top of the space right now. Yeah, so this paper. Let me just pull up my notes. Yeah, this paper is a paper from Google, actually, and it's a very interesting paper. It's asking the question, can a neural model run in real time? And, like, simulating a sort of complex game? And. Yeah, basically try to kind of have a neural network kind of act like a. Like a game engine.

Goals of the Paper

So that's what their goal with this paper is, to. To answer this question. And they do this by trying to simulate the game doom, which. Yeah, I guess it's a game that the authors are familiar with and have played a lot with, as well, they mentioned. But, of course, it's also a commonly used game for research as well. And I guess they want to answer the question, if diffusion models or if neural networks can run doom. I guess it's also part of the goal here. As well. So yeah, basically at the core of it, this is just a diffusion model. And it's just a diffusion model that is given the previous frames and actions and predicts the next frame that should be rendered.

Game Simulation and Model Training

In order to do this, what they do is they first need to generate some gameplay to train on. To do that, they want to generate a different user levels. You want to have beginner gameplay versus hard gameplay or expert gameplay. They actually train a separate agent model to learn how to play doom in some sort of doom environment that already exists. And then they take those runs with the agent as it was learning. And that's what they use as creating data for their diffusion model. So the agent is itself like a pretty simple agent. It's just like, you know, it's trade with PPO, proximal policy optimization. You know, it's just a simple CNN that is taking in the current frame and also the last, you know, 32 actions that was performed. And then it predicts what the next, you know, what the next action should be taken.

Agent Training Methodology

And yeah, it's a pretty simple PPO kind of agent model here. And then all the gameplay by that PPO model while it was being trained. And finally, when it's done training, all that is saved and used as training data for the diffusion model. So the diffusion model is just stable diffusion 1.4 that is fine tuned on this data. The goal here is that, yeah, basically it's conditioned on the actions of the previous frames. So the text conditioning is removed. Instead, the model learns an embedding for the actions. And that's in, it's basically put in a single token. So there's like an action token and it has an embedding regarding what is the action that's taken.

Data Processing and Model Adjustments

Like they need some sort of specific key press. And the cross attention from the text is instead replaced with the cross attention with the actions. And then also you have all the previous frames, those are passed through the VaE and you get the latents for the previous frames. And those are concatenated in the latent channel dimension to the noise latents. So they're part of the channel dimension of the latents. And then the other thing is that they fine tune the decoder. So typically so the model of course will produce latence. And then that gets passed into the decoder to get the final pixel frame. And that is the vae decoder that comes with stable diffusion.

Fine-Tuning and Model Performance

But here they actually fine tuned that decoder on some of the doom images as well. So they have, they fine tune it with LPips loss. And so they have that, you know, slightly improves the quality of the output images as well. And then the other thing is that there is also divergence between the autoregressive application of the model where, you know, it's just like, you know, you generate one frame and then you try to generate the next. Whereas before, like with the actual trading, you know, you already have like the previous ground truth, frames and that's what you're using to generate the next. So it's kind of like, you know, there's this sort of. difference between the training versus, which is like autoregressive versus, sorry, the training, which is like more like a teacher forcing, whereas the inference is more autoregressive.

Addressing Divergence Issues

So there's some divergence that happens wherever actually you get some drift and decreasing quality as you are generating the images over multiple frames. So they actually try to fix this by corrupting some of the previous frames during training time. And so they add some noise to the previous frames that the model is conditioned on. And that seems to help prevent some of this divergence and improve the quality as you're doing this sort of autoregressive inference of the model. And the model has a context length of 64. So what this means is that it's looking at the last 64 frames. And so during training, that's the ground truth frames. During test time and inference, that's the last 64 predictions of the model.

Model Context and Actions

And then also it has the last 64 actions. And the actions both during trading and inference come from the existing trajectories from the agent model and the agent gameplay that they trained. So they have the actions that agent took and they use that during training and also during inference. And so that's the. But yeah, I mean, it's kind of surprising with only this sort of short context length of only 64 that able to get. That seems to be enough to get decent results. so then, yeah, during training. So during inference, you have used different tricks, again, like classifier free guidance.

Model Techniques and Findings

Interestingly, they only apply it to the conditioning on the previous frames and not the conditioning on the actions. Because apparently, yeah, the conditioning on the previous actions doesn't seem to help, you know, having classifier free guides on that. So that's something interesting that, you know, it only seems to help with the frames. then the other thing is they only use four ddim sampling steps. But that seems to be enough to, you know, there doesn't seem to be much improvement if you have more steps, which, yeah, with stable diffusion, like, you know, this is like, kind of, you know, this is kind of expected, you know, with the stable diffusion models.

Performance Results and Challenges

Yeah, even a few number of. Of steps actually seems to. It can get decent performance. But, yeah, four does seem to. Is definitely on the lower side, which is kind of interesting. But because they're able to use only four sampling steps, that gives them. This is being run on a TPU, and it gives them a performance of 20 frames per second. So that becomes kind of real time generation at that point. And in terms of their results, you know, they can do analysis of the image quality. So, like, looking at individual frames and seeing the quality of that, they can look at analysis of the video quality.

Qualitative Assessment of Image Results

So when they look at the image quality, you know, they're basically looking at what is the image generation, you know, for that cartoon frame and comparing it to the ground truth. And they show that the quality is basically similar to what you would get with, like, a lossy JPEG compression with quality settings of 20 to 30. So, yeah, it's pretty decent. It's like you're just compressing a JPEG image. That's the sort of quality that you're getting. So it's pretty comparable. And then in terms of video quality, the problem that makes it hard to analyze is, like, there is this sort of divergence between the predicted trajectory and the ground truth trajectory, and it diverges after just a few steps.

Challenges in Video Quality Assessment

and, you know, it's just. It's just like, small differences in, you know, the. The movement. But that can still lead to. It can make it hard, like, even though the trajectory itself will look similar to actual gameplay. but it is diff. It is still kind of different from the original trajectories, the ground truth trajectories. So it is a little bit hard to compare directly to the ground truth trajectories. but if you. If you did, they did like, a sort of human rating analysis, and they show, like, the human raiders do rate this as pretty comparable, where it's like, they only choose the actual game, like, 58% of the time over the simulation.

Human Ratings and Model Comparison

So it is pretty comparable in terms of the generation from the model. Does look very similar to the actual gameplay. And then. Yeah, like, I think overall, like, the conclusion of this paper is like, yeah, like, diffusion models can, I guess, simulate gameplay pretty well? Like, what's surprising, I think, is just the fact that it's actually a very simple approach. It's just a sort of stable diffusion model that's conditioned on past frames and actions. And even then it's like only about like 3 seconds of past frames and actions.

Overall Insights and Potential Directions

So like it's not even that much of a time horizon and it's not that much of like you don't have that much history and even then it's based on all that, it's able to generate like decent gameplay. So I think that's kind of a bit surprising. But also of course this isn't perfect. There's a lot of work to improve this, I think, you know. You know there's a lot of discussion about this paper on Twitter and you know, some people were saying, yeah, the gameplay isn't you know, fully representative of actual doom gameplay. And like there's still lots of mistakes and stuff like this.

Exploration of Game Simulation Limitations

So you know, I like any sort of like, yeah, like an actual doom player could probably see like it would notice all these different issues. So it's not obviously perfect. And then also like you could imagine like, yeah, like there's also a problem if you like, you know, you move in one location and then you try to come back. It's like the generation is not going to be like it won't kind of, you won't get that sort of same state if you try to come back or things like this. So like there's a lot of like things where like I guess mainly because the model doesn't have any good way of like storing state, for example.

Future Perspectives

Like it's, it's not, it's not very, there's lots of still issues with the model. But I think, you know, the point is like this is just a proof of concept and like just to demonstrate like you can. Yeah, this is like something that can be done where you can you know, simulate games with neural networks and then you can imagine like obviously like simulating an existing game by itself with a neural network isn't particularly interesting. But I think in the future, you know, what's, what will be interesting is you being able to like, you know, generate games on the fly basically.

Long-Term Vision for Game Generation

And you know, the neural network can be conditioned on all kinds of things about what, you know, what you're interested in. And you know, there could be more like more personalized aspect to the game and things like this. Like, so I think this is just like a paper that kind of is a proof of concept exploring the potential of utilizing neural networks as a gate engine. And I think that's what makes this paper really exciting. I think there's a lot of. There's a lot of discussion about oh, what's the point of this paper?

Critical Perspectives on AI Research

All it's doing is simulating an existing game with significantly more compute and resources and what's the point? And you know, I think a lot of people. I was a bit kind of disappointed that a lot of people aren't looking at the bigger picture here and what can be done in the future with this paper. And I think that's what's more exciting. And yeah, certainly it's an interesting demonstration that it's able to simulate doom, but obviously by itself it's not a very useful use case. But in the future I think there's lots of opportunity and lots more to explore.

Transitioning to the Next Paper

So yeah, that's what I have to say about that paper. Aaron, did you want to add anything? Yeah, so I guess we are supposed to use this to simulate like the Leo world, which is not possible to like. So for the video game environment we can easily just use video game as setup, but for Leo World we cannot simulate. So I guess that's going to be what's, that's going to be like the prime application.

Discussion on AI Application Domains

I don't know if that's like that. That just becomes kind of more like a video diffusion model where you have like. I mean, that's certainly something as well where it's just like. That's kind of more of a video diffusion model and interacting with that. I think. I think they're still like more so like applications and like. Yeah, like, I thinking about it as a game engine and then that is kind of like flexible and adaptable is also kind of interesting.

Future Applications of Game Engines

And there's a lot of maybe opportunities there which will need to be explored further, so. But yeah, I mean, also like simulating the real world and yeah, I guess creating a simulated environment of the real world that you can interact with is. Would be very interesting. And I mean, some of the video diffusion model stuff starts to get there, but like, yeah, there's no like sort of way of like taking actions in that sort of simulate in the video diffusion models.

Integrating Agents in Simulations

So maybe that's something that needs to be explored further too is like how you can take actions and. But the thing here is like they also did use like this agent to like, you know, get gather trading data. So also maybe you need some sort of agents like. Yeah, I don't know, like with especially some of these robotics models and things like this. Maybe there's something to explore there but. And I'm not entirely sure, but I think this paper wasn't really thinking about that too much, and it was more thinking about the potential applications for utilizing neural networks as kind of a very flexible game engine.

Conclusion and Future Exploration

And they talk a little bit about this in the conclusion and future work of the paper at the end, where it's like they talk about this kind of being a new paradigm for interactive video games where, you know, you could imagine, like, you could, for example, maybe design your own playable levels by creating a few frames and then passing that into the diffusion model without having to write up code for the separate level altogether. Things like this, where like, the model is able to take information and generate this sort of, you know, new gameplay or new environments for gameplay. And that's kind of where the opportunity maybe comes into play for gaming applications specifically.

Synthetic Data Generation Discussion

But yeah, maybe there are other applications as well. Yeah, that makes sense. Yeah, I hope that there's other domains of applications as well, if not something as complex as real world. So, as you know, I'm very. I'm a big fan of synthetic data generation argumentation, because, for example, in the case of logotics, there's not much data to begin with. And typically in this settings like this, data augmentation is very powerful.

Potential for Data Augmentation

So if we could use something like this to augment the data, even if it's not super accurate, it could be pretty useful. Yeah, I don't know, let's see how this will turn out. Yeah, yeah, that's. I agree. I think there may be some potential synthetic data applications as well. But, yeah. If anyone has any questions, by the way, feel free to reply again to the space with your questions. Yeah, I don't know if there's anything else to add about this paper, but yeah, there was a lot of discussion about this paper online, and yeah, I think it kind of also went past the AI circles in some sense.

Broader Implications of the Paper

So there was a lot of discussion beyond the AI circles as well, of this paper, and I think a lot of people were maybe missing the point of this paper, I feel. So that's what I wanted to clarify, at least in my opinion, what I thought I. The point of this paper. And so that, I just wanted to clarify that. So, yeah, I'm looking forward to seeing what will be done in this area, and if there's building these sorts of flexible game engines with neural networks, and like, especially since this is such a basic model that they utilized here with a stable diffusion that's just conditioned on previous frames and actions, it's like, honestly, like the most simple approach you could get.

Future Enhancements and Innovations

So I think, you know, with some you know, some more additional thought into how. Yeah, I think like just even adding something like a way of storing state in the model, like all simple changes like that could really probably improve the performance significantly and could be very interesting explore. So yeah, very excited about this paper. Okay. Yeah. And if there's no other questions, then I guess we will move on to the next paper which, Aaron, you're presenting.

Transition to the Next Presentation

Yeah, so I'm presenting physics of language models part 2.2, how to learn from mistakes on grade school math problems. So this series, physics of language models, is very good in my opinion. I actually, I've been reading all the papers in this series and yeah, this one's also as good. So yeah, I highly recommend you to read all these papers because, yeah, it's worth it. So basically, this paper, so this particular part 2.2 explores the possibility to enable models to collect errors immediately after they are made.

Insights into Learning Mechanisms

So it does not use multi round prompting. Okay, here's like a motivation. So say if large language models can self collect, be multiround prompting, so why can we correct mistakes immediately as well? So this is prevent generating users tokens and speed up inferences. So yeah, so they got, they found like four results. So here's the first one. Even when pre trained with error free data, actually large language models may know they have made mistakes.

Evaluating Accuracy Improvements

And actually this is detectable via proving internal states. Maybe you've seen some results like this in like some studies like this. So maybe from anthropic. So allowing the model to retry upon regret gives a minor accuracy improvement from 78% to 80%. So 2% improvement. So retry upon regret basically regenerates from the previous chain of thought steps after detecting a mistake. So, and once. So the mistake is detected. Once the mistake is detected, the model generates from there.

Limitations of Retry Mechanism

So this retry upon regret approach is actually not good enough if you know, 78% to 80%, there's not much improvement here. Actually, even with a 99% accurate detector, you get only 2% accuracy improvement. If you have like 100% accurate detector, the improvement is bigger than that. But, you know, 100% accuracy is unrealistic. So this approach doesn't seem quite promising. So here's the second result, preparing retry pre training data like so.

Training Data Configuration Insights

Okay, let's say the model's data looks like a implies b. Oh wait, I made a mistake. Actually a implies c. So data like this in pre training data set significantly boosts reasoning accuracy without encouraging mistakes. And this improves from 78% to 95%, which is huge improvement. This approach has many desirable properties like no change to trying inference process. And there's no, and more the error included in this free training data, the better the accuracy.

Surprising Findings from the Model

Adding retries and errors do not encourage model to generate long and unnecessary reasoning changes. So yeah, this is a very surprising result. You know, basically you just have to include a bunch of these, like some inactive false result and followed by, oh wait, I made a mistake. Here's actual result. Does that make sense then? So you just have to generate, you just have to create a bunch of data like this synthetically in the pre training data.

Inference Process Improvements

And once your model is trained and you can just ask the model to solve math questions, then the model doesn't even, model actually generates correct trajectories without even making a mistake at the beginning. So typically you would expect that these models, like this would generate a false statement first and then say, oh, I made a mistake, actually followed by correct trajectory. But the model doesn't do that. It is so weird.

Further Discussions on Reasoning Processes

Interesting. Tanish, does that make sense? Yeah, so it's just kind of like, yeah, trading on the sort of the thought process. It's like a little bit like chain of thought. Right. And, but like trading on those sorts of like thought traces. Right. And, but you want to have like, in this case. Yeah, mistakes in there and to show the model how to learn from those mistakes.

Building Corrective Learning Mechanisms

Yeah, yeah. Yeah, yeah, that makes sense. I think, yeah, I think it's, yeah, you just need to, it's just kind of, just generally having more of these sorts of reasoning. Thought and reasoning in the pre trading is going to be very important and it's just about like showing the language model what you need to learn, I guess. And yeah, it doesn't surprise me that you would need to show it the thought process of learning from a mistake.

Conclusions on Performance Enhancements

And once it does that, it can perform better. So I think that makes sense. Yeah. So the interesting thing is that as long as you show like the learning from mistake data in the pre training, the model doesn't even have to model can like immediately get correct results at the, during the inference without even having to make mistake at the beginning. So, yeah, that is interesting.

Chain of Thought Traces Analysis

But I'm curious, like, I wonder if people have done, like have people seen something similar with chain of thought, just more generally as well, like training on chain of thought traces and then during the actual inference, you don't need to do the chain of thought. I'm not sure if people have done that sort of analysis, if anyone's aware.

Context Distillation Types Work

Oh, yeah, they did. So. Well, actually, it may not be exactly like that, but it was like a context distillation types work. Yeah, could be a bit different here. Okay, anyway, let me move on to the next result.

Listening Accuracy Improvement

So this, so this significant listening accuracy improvement cannot be obtained with beam search or lola fine tuning from a model pre trained with error free data. So yeah, apparently you really have to do this during pre training, which kind of makes sense because pre training phrase, typically, you know, you are learning new knowledge and like a making mist, what is considered mistake and what is not. That kind of knowledge is also a knowledge of tools. So yeah, apparently this should not happen during fine tuning.

Substantial Weight Changes

I mean it can happen during fine tuning, but it's not enough. So yeah, so you need substantial weight changes basically. For example, if you do pre training with this approach, you get 95%. And if you do Lola fine tuning with this kind of data, you get only 83%. And if you use beam search with beam equal to 32, then you only get 90, sorry, 79% and the baseline is 78%. So yeah, pre training makes a lot of improvements.

False Statement in Pre Training

Okay, here's the final result. So they, so, you know this false statement, oh, I made a mistake actually, and followed by true statement. This approach, you know, is so how do we make this false statement for pre training? In practice it's quite non trivial. You cannot just simply state some nonsense because that's too obvious. So how do we do that in practice? They tried a few, some ideas.

Exploratory and Proof of Concept

So for example, they tried inserting like some randomly chosen later sentence. So like going to like the later stage of solution which is not supposed to happen. And it actually achieves 91%, which is great. Anyway, it seems that this part is more exploratory and proof of concept. So I guess, yeah, they have to continue doing exploration in this domain. Yeah. Anyway, that's all.

Importance of Paper

So yeah, this paper is just as interesting as any of the papers in the same series. And I liked it. What do you guys think? I think David had some questions here. Let's see. He said, is it kind of like expert iteration, allowing model to perform search algorithm within the context? They can learn from, they can learn how to recover from mistakes, I suppose.

Differentiating from Expert Iteration

Expert iteration, you are basically training your model on correct trajectories and in this case you are training your model on a bunch of bad trajectories as well, like a suboptimal trajectory. And then during inference phase you are not even making mistakes, many mistakes. Like so it's like kind of different from it. Let me take a look at his comments. Yes. So actually the model doesn't even do such algorithm.

Correct Predictions during Inference

Oh, it says allowing the model to perform such algorithm within the context. So, yeah, it doesn't. The model doesn't even recover from mistakes during inference. They make the correct prediction in one shot. Yeah. The very first attempt, which is quite interesting. So basically, pre training datasets has to include some like, mistakes and recovery.

Questions and Thoughts

Yeah, there's a couple other questions too. basically one of them was asking like, is it like. Because language models are better detecting mistakes after they make them, they're not making them in the first place. And could that be a good steering technique? And I'm not sure if necessarily that's true. I don't know if that's, what's happening here is because, I mean, it's true that like, it's easier to detect than.

Insights on False and True Statements

Than actually not do it. I think that's probably true, but I'm not sure if that's what's happening here specifically or in. Can you. What are your thoughts on that? Yeah, so then I wish the paper sort of digged into like a sort of more ablations. Well, they already did quite a lot, but yeah, this part is a bit unexplained in my opinion.

Making Effective False Statements

But yeah, I think this false statement to true statement. So you really have to make your false statement very good. And yeah, apparently so I guess the important thing is that because this happens during the pre training and you're letting the model to make significant weight change, which does not happen during fine tuning. So I guess, yeah, the model is very.

Exploring Next Papers

Is able to allocate a lot of resource to detecting the mistakes part. So. Yeah, that makes sense. Okay, cool. I think. Should we move on to the next papers? Yeah, we don't have much. We spend a lot of time, all these. So. Yeah, yeah, the next papers, I think, are maybe less.

Discussion on New Paper

Not much time needed to cover them. so yeah, I'll go to the next paper, which is sapiens, a foundation model for humans. A fan. Let me just pull up the paper. Vertigo. I posted it on. I think it was from. And was it from. Oh, here. I guess maybe I didn't post it.

Foundation Model for Human Vision

AK posted it. So, yeah, this paper is a. This paper is. Yeah. Foundation. Foundation for human vision models. So that's what it is, a foundation for human vision models. And it's basically they train a bunch of nast auto encoder models on images of humans. And that becomes their sort of base for fine tuning on a whole bunch of human centric vision tasks.

Training Dataset and Model Structure

Things like pose estimation, body part segmentation, depth estimation, etcetera. And, yeah, so they train a whole family of models. So they trained, yeah, they trained a whole family of models. Their largest model is 2 billion parameters. They trade on a total of 1.2 trillion tokens. These are like, I guess it's when they say tokens, it's because these are images.

Mass Auto Encoder Approach

And so I guess it's like the image patches rather than like, yeah, there's no, like, there's no actual like language stuff that's happening here. So it's like we're referring to the image patches, but yeah, it's like a mass auto encoder. So, you know, the typical approach is like, this is like a vit, and then you basically are, you know, you take an image, you mask out most of the patches in the image and you pass it to the vit, and the vit has to predict the masked out patches.

Pre Training Techniques for Human-Centric Tasks

And so, like, they will mask out like 90%, 95% of the patches in some cases, like, you know, during trading, like it will, you know, you can, that's how much will be masked out. And the model has to predict from just that, whatever remaining 510 percent of the patches that are remaining predict the image. And so that's a strong kind of self supervised pre training task.

High Quality Annotated Datasets

And they're able to use that to learn useful representations for these sorts of human centric tasks. They have this large scale pre training, but then they also have for the individual tasks, they have a much smaller data set that's very high quality, heavily annotated. So for example, when they're looking at the pose detection and looking at the key points of the human, they have a more comprehensive collection of 308 key points that are including all these points from the body and the hand and the feet and the face.

Comprehensive Dataset for Body Points

And it's like more comprehensive than what other datasets have been using and other datasets have, and it's very heavily annotated. The data set that they use is like a proprietary data set of 1 billion images, basically filtering exclusively for human images. I assume they probably come from like Instagram or something like this.

Release from Meta

Like this is papers from meta, if I didn't make that clear already. So like, yeah, it's probably like coming from Instagram or something like that. But yeah, they have that's their pre training data set. And yeah, they train it on, you know, on that.

Training in Resources and Results

Like, they trained their biggest model, which is a 2 billion model. They traded on 1024 gpu's, a 100 gpu's for 18 days. Basically, yeah, they just show like they have this model, and basically it outperforms all these other existing approaches for these different tasks.

General Domain Model Performance

Basically, the point being that this general domain model that is then fine tuned on these specific tasks is outperforming all these more specific approaches or things like 2d pose, where they're talking about the state of the art. For 2d pose utilizes a complex student teacher framework with feature distillation tailored for the task, whereas their approach adopts a general encoder decoder architecture with large human centric pre training.

Performance of the Smaller Models