Space Summary

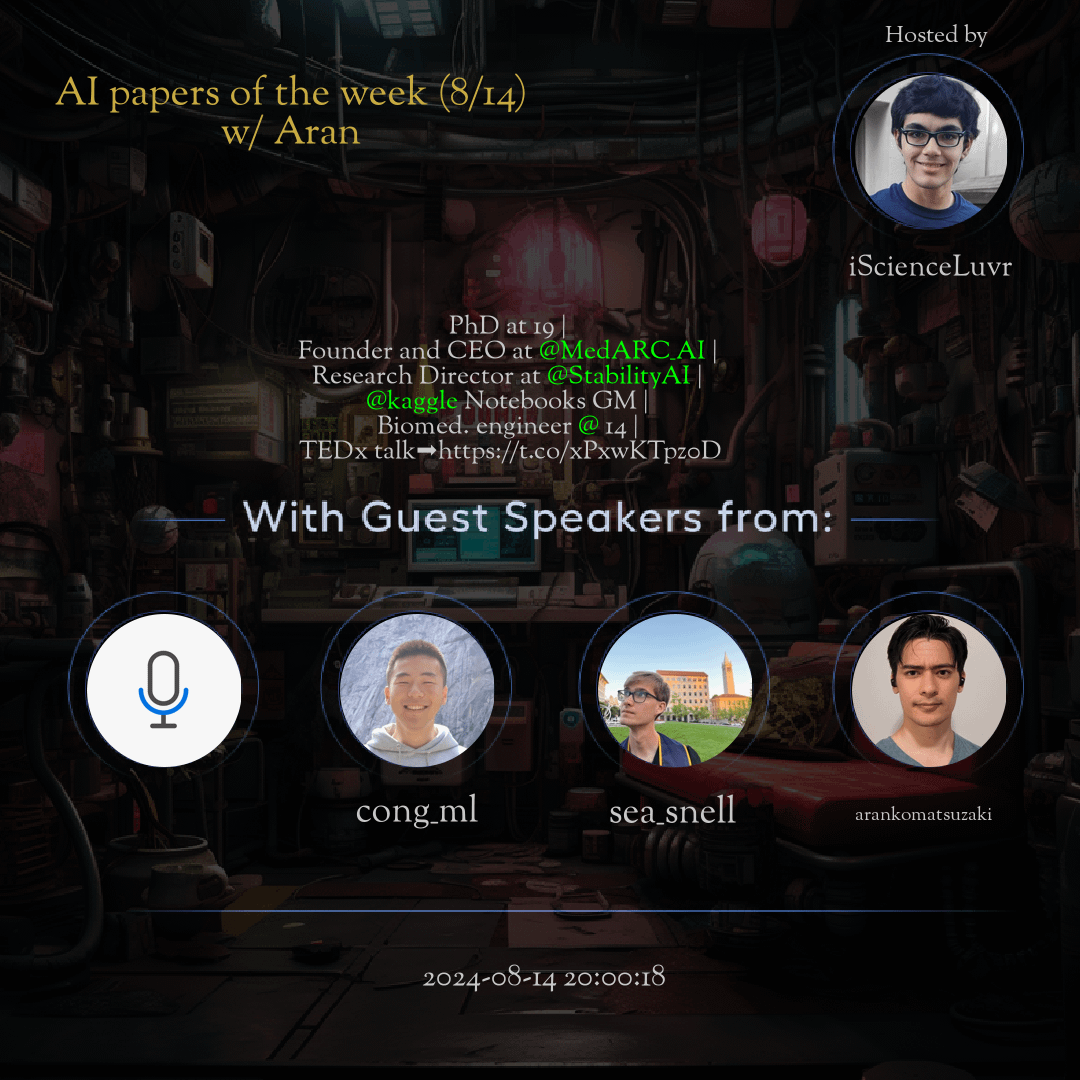

The Twitter Space AI papers of the week (8/14) w/ Aran hosted by iScienceLuvr. In the 'AI papers of the week (8/14)' Twitter space with Aran, the discussion revolved around the latest advancements and ethical considerations in the field of Artificial Intelligence. This space emphasized the importance of collaboration, continuous learning, and staying updated with AI research for professionals. From industry applications to future trends, the conversation delved into the transformative potential of AI across various sectors. The insights shared highlighted AI's impact on healthcare, finance, and the importance of mitigating biases in AI algorithms for equitable outcomes. Overall, the space provided valuable perspectives for AI enthusiasts and professionals seeking to navigate the ever-evolving landscape of Artificial Intelligence.

For more spaces, visit the AI page.

Questions

Q: Why is collaboration important in the AI field?

A: Collaboration fosters innovation, accelerates research, and encourages diverse perspectives.

Q: What are the implications of AI ethics in research?

A: Ethical considerations are crucial to ensure AI algorithms are fair, unbiased, and equitable.

Q: How does AI impact different industries?

A: AI is revolutionizing sectors like healthcare, finance, and technology with improved efficiency and decision-making.

Q: Why is continuous learning essential for AI professionals?

A: Continuous learning ensures professionals stay updated with the latest trends, tools, and advancements in AI.

Q: What role does knowledge-sharing play in the AI community?

A: Knowledge-sharing promotes collective growth, encourages best practices, and fosters a culture of collaboration.

Q: How can businesses leverage AI for growth and innovation?

A: Businesses can harness AI to optimize operations, enhance customer experiences, and drive strategic decision-making.

Q: What are the benefits of staying abreast of AI research papers?

A: Being informed about the latest research enables professionals to implement cutting-edge solutions and contribute to advancements in the field.

Highlights

Time: 12:14:35

AI Ethics and Bias Mitigation Exploring the challenges of ethical AI development and strategies to mitigate biases.

Time: 12:25:41

Industry Applications of AI Discussing real-world use cases of AI in healthcare, finance, and beyond.

Time: 12:38:19

Continual Learning in AI Emphasizing the importance of lifelong learning and skill development in the AI field.

Time: 12:45:55

Community Collaboration in AI Highlighting the benefits of a collaborative AI community for research and innovation.

Time: 12:52:02

Future Trends in AI Predicting the upcoming trends and technologies shaping the future of AI.

Time: 13:05:29

AI Education and Training Addressing the need for structured education and training programs in AI for aspiring professionals.

Key Takeaways

- AI research is rapidly evolving, with new breakthroughs and advancements every week.

- Ethical considerations and biases in AI algorithms continue to be crucial topics of discussion.

- Collaboration and knowledge-sharing within the AI community are vital for progress.

- The integration of AI in various industries is transforming how businesses operate.

- Understanding the latest trends and developments in AI is essential for professionals in the field.

- AI has the potential to revolutionize healthcare, finance, and other sectors.

- Staying updated with AI research papers is key to staying ahead in the field.

- AI education and continuous learning are necessary for harnessing the full potential of AI technologies.

Behind the Mic

Introduction and Channel Subscription

Subscribe our channel. Hello, everyone. We'll get started shortly. I hope everyone's doing well. We are doing the space after, I think maybe like four weeks now. Three or four weeks. I was busy traveling. You know, I was at ICML and other travels, so were unable to host the paper space for these past few weeks. But, yeah, we're getting back to it and should be exciting. I think we'll also be covering a few of the. It will, of course, be covering some papers from the past week, but also a few papers from previous weeks as well. So. Yeah. Erin, anyone to add anything?

Meetup Announcement and Presentation Update

Oh, yeah. So I just moved to San Francisco, and I'm organizing a meetup at Douglas park with lucid length. So if you guys are interested, please join us. Yeah, also, actually, the AI scientist. The presentation is going to be a bit late. It's going to be from, like, in 20 minutes. So instead, the second paper is going to be the first paper for us. Yeah. So I guess, yeah, we'll get started. Is Charlie in the space? I don't see him here. I can ping him.

Paper Presentation and Technical Issues

So, yeah, the first paper. So I've pinned the. It's not coming up. Oh, yeah. There we go. So the. The list of papers is pinned to the top of the space. So we're actually going to start with the second paper instead of the first one because the authors aren't here yet. And so we're just waiting right now for Charlie to join the space, and he can then talk about his paper. Oh, yeah. Okay. Chad is already in the loom, so can you find him? Let's see. Emergency. Maybe he can request. Yes, if he requests, that'd be easiest. Okay. He just did request.

Continuing with Technical Difficulties

That is beard. I don't see anything. Are you able to see him in the room? I actually did not see him in the room at all. Yeah. Okay. I don't know what's going on, but we cannot. You know, why he's not in the. It's like it's not showing up that he's in. In the room in the space in the first place. I think that is really weird. Okay, let's see here. Does anyone else see him in the room, or is it just a. Is it just some sort of issue on our end, maybe Charlie, if he's in the. In the space, he should leave and rejoin.

Device Issue and Joining Problems

Oh, okay. So he said he tried to sort of react, but he couldn't. Is he joining from his laptop or his phone? Like, sometimes you. Like if you're joining from. Yeah, maybe one device you should try a different device, because I know, remember, like, I think in the past, Aaron, you tried joining before, and it wasn't one time, it wasn't working. You have to join from a different device. Yeah, actually, he just said he. It worked. So. So looks like there was some advanced.

Charlie Joins the Space

Oh, well, okay. I do see him now, as in the space, so I can invite him to speak. Okay. He should be a speaker now. Oh, hello. Nice. Okay, good. Were you joining from like a different device and it was not working from there or something like that? I was on my laptop, and then I just left and rejoined I. And it worked the second time. Okay. Okay, cool.

Discussion on Paper and Key Points

Perfect. Yeah. Thanks for joining us, and, yeah, we'll love to hear about your paper. Yeah. So feel free to start. Yeah. So I'll begin. So, yeah, so this is a paper I just put out with some collaborators from Google called Scaling LLM. Test time compute can be more effective than scaling model parameters. Basically, what we're interested in is on sort of challenging input questions that we might ask a language model. Can we enable the language model to use additional test time computation to improve the accuracy of their responses?

Implications of Test Time Compute

So this sort of has two potential implications, or two big ones that I'm interested in. One is the potential for using smaller models plus test time compute to perform better or on par with much larger models that can only fit on, like, a data center gpu. And then the second interesting use case for something like this is for essentially generating synthetic data. So if you have a process where the model can sort of improve its own outputs at test time, then you can sort of use those outputs to then train the model and sort of improve itself in a little bit of an Alphago style way.

Exploring Effective Scaling Mechanisms

So we explore, how can we most effectively scale test time compute. And we look at sort of two mechanisms by which this can be done. One is by searching against a verifier, which predicts a score at the end of each step. I guess I should specify that we're focused on math problems in this setting, because these are sort of very challenging reasoning problems for lms, though we expect this many of our techniques should apply in other similar reasoning settings. Yeah.

Mechanisms for Scaling Test Time Compute

So we do search against a verifier, a process based verifier which looks at each individual step, and then the other mechanism by which we scale test time compute is with revision. So having the model propose answer, look at its previous answer, and then propose a new, improved answer, and doing this iteratively. and we want to know how to most effectively scale test time compute so for each of these mechanisms, there's sort of different decisions you can make.

Hyperparameters and Scaling Strategies

There's some different hyper parameters. So with search, we could do sort of best of n, which is a very naive approach where you sample n complete solutions and then pick the best under a verifier. Or we could do like a tree search against the intermediate steps. And we can also vary like the beam width and all these different parameters with respect to the search. Similarly, with the case of revisions, we could sample long chains of revisions, or we could sample like shorter chains of revisions, but have multiple of them in parallel.

Optimal Strategies and Performance Insights

So we actually find that depending on the question difficulty, different decisions with respect to scaling, difficult question with respect to scaling test time compute perform better in the case of search, on easier questions, it's often the case that best event performs the best or on par with more sophisticated search techniques, whereas on harder questions, doing tree search is much more preferable to doing best event. Similarly, in the case of revisions, we find that there's an optimal ratio of an ideal ratio of sequential revisions to parallel like revision rollouts, depending on the question difficulty.

Defining Compute Optimal Scaling

So in easier questions, it's slightly more preferable to use like longer revision chains, whereas on harder questions, you need to find there's sort of an ideal balance point. So using this insight, basically what we do is we set up a, we define what we call compute optimal scaling, where we select the best set of hyper parameters depending on the question difficulty. And once we do this, in the case of the PRM search, we're able to improve test time compute scaling by, in some cases like a factor of four.

Evaluating Performance Against Larger Models

So we can reach the same performance with four x less compute that we would normally get with like a more naive scaling strategy. And we find a similar thing in the case of revisions, also about a forex efficiency improvement by optimally allocating compute. And then at the end of the paper, we put this all together to see, okay, so now we figured out sort of the different dimensions by which we can scale test time compute and how to most effectively allocate test time compute.

Model Comparisons and Outcomes

Now that we've done this, how can we actually outperform models that are trained with larger parameters? So we want to compare like a model trained with m times more parameters. In our case, we look at a much larger model trained with 14 times more parameters. If we use instead a small model but apply additional test time compute, can it be more effective? And we find that, again, sort of, the answer depends on question difficulty.

Defining Difficulty in Model Performance

So, and there's also some assumption you have to make about how much inference compute you're expecting to do in your setting. So in a production setting you might have a very large inference budget, whereas if you're nothing, shipping your model to like a production endpoint, maybe it's much lower. So what we find is on easier questions, or in settings where you have sort of a lower inference load, test time compute can outperform scaling parameters hover on much harder questions.

Implications for Inference Strategy

Or in settings where you have like a very large inference compute expectation, then scaling parameters is often more effective. Yeah. So I'd say those are the main conclusions I'm happy to take. Any questions? Yeah, if people have any questions, they can reply to the space with a tweet and you can put up any questions. Did you have anything you wanted to add or any questions?

Question on Paper Comparisons

Oh, can I ask? Okay. Yeah. Thank you. So you know there's a similar paper released recently, slightly similar, I guess large language monkeys scaling infinite compute with repeated sampling. What are the differences between your paper and their paper? A digital, yeah, I've read their paper. Yeah. So I think there's a number of differences.

Interactive Discussion on Research Papers

I think their paper is mostly concerned with this question of. So they mostly focus on the setting where you have like an oracle verifier, which is like if I can look at the ground truth correctness of my answer or run the code against some test cases and determine like somewhat objectively if it's correct or not. And so in this setting, if you scale just like best event or whatever, you can get like with 1000 samples or whatever, in math you can get to like 95%.

Key Differences in Verifier Approaches

However, if you use instead a learned verifier, which is in reality probably the more practical thing in a real production setting, you're likely not going to have a ground truth verifier that you can check against. So if you instead use a learn verifier, you can only get half of that. You can get up to 45% instead of 90% by scaling test time compute. And this is just because the model makes errors. So their paper was sort of focused on the oracle setting.

Focus on Learned Verifier Setting

And then they point out that there is this gap between a learned verifier and ground truth. Our paper is more focused on in the learned verifier setting. How can we most effectively scale tests and compute? We don't concern ourselves too much with like the oracle setting. I would say that's sort of the difference between the two papers.

Skepticism and Confidence

That makes sense. Thanks for your answer. It's interesting that, you know, there are two papers coming from the same group, I mean, Google, with very similar ideas, obviously. I think. I personally think yours is more sort of sophisticated. There's actually a third one that came out. I didn't even notice it until after it came out like a few days before ours as well.

New Research Introductions

It's called an empirical analysis of compute optimal inference for problem solving with language models. So also tell people to check that one out. It's relatively similar to our paper. It does some different things, but, yeah. So, yeah, I'm very bullish on research and verifier. There was actually another project by multi Ong about agent Q where they showed mcts and verifiers effectiveness on agent.

Prospects of Test Time Compute

So, yeah, I'm very excited. Yeah, I think there's a lot of potential here. I'm particularly excited about the angle of using test time compute to generate data that can then be used for training. At some point you run out of tokens on the Internet, so you need to be able to generate tokens. I think that's very exciting.

Defining Difficulty in Question Contexts

Yeah, absolutely. By the way, there's a question by Ben Schrod. So what is the criteria to consider for what exactly are the harder questions? Oh, how do we define difficulty? Yeah, that's a good question. So in the paper we do two different things, but we basically, we define difficulty relative to the base model itself.

Explaining Difficulty Metrics

So how much does the base model struggle with a given question? So the first version of difficulty we consider is oracle difficulty, which is simply, I sample 2000 outputs from the base model on each question and look at how many of them does it get correct. And then we create sort of five difficulty bins. So the questions that it gets right 90% of the time are the easy ones, and the ones that only get to write 5% of the time are the hard ones.

Using Verifiers to Define Difficulty

This of course uses oracle information. So you have to know the ground truth to know this difficulty, which is why we call it the Oracle bin, the Oracle difficulty. And then there's also the predicted difficulty, which instead of using the ground truth correctness, we use a verifiers prediction to bin the difficulties. And I guess both of these are like pretty naive.

Future Considerations for Difficulty Metrics

You could do like more sophisticated things to predict the difficulty, like fine tune a model to directly predict it, which would be much cheaper directly predict it, given the question. But yeah, that's how we define difficulty in the paper. Makes sense. Any other question? Hanish, do you have any questions?

Closing Remarks and Technical Issues

No, I think our also asking similar things that I was thinking of. So, yeah, I think, I mean, I'm very, yeah, this is very exciting. Exciting work. And it's interesting to see how much of like, yeah, how much of the pre trading budget can be shifted to the inference budget. I think this is a very interesting avenue of, I guess, trying to gain some extra performance from these models.

Challenges in Application Beyond Math and Code

I guess one thing is like, you know, certainly like this is interesting for math and I guess also code where I guess, I think you make this point of like learn verifier versus an oracle ground truth verifier where I think in math and code, obviously those are much easier to probably have these sorts of ground truth oracles and ground truth verifiers. But then in other domains, I guess that's my biggest question is, outside of math and code, how can this be applied?

Approach to Learning Verifiers

And I think the answer to that is probably that you need to have some sort of learned verifier, some sort of learned critique model or something like this, basically. But I'm curious if you could maybe speak to that a bit like how these sorts of approaches would be applied outside of the math and code domains. Yeah, for sure. So in our setting we are focusing on learned verifiers and it's a little bit easy to generate the verifier data because we have a training set where we know the ground truth and we can just generate answers and then fine tune a verifier on it.

Scaling and Data Requirement for Verifiers

I imagine though that you can fine tune a verifier for mini domains. You would just need, I mean you might need to hire a bunch of humans to help you collect some labels, but you could imagine similar to how you can instruction tune a model to do many different tasks, you could do something similar to get a verifier that can verify many different tasks. If you collect a decent amount of data, it doesn't require a ton of data, it's on the same scale as normal fine tuning datasets.

Contributions to Agent Tasks

let's see, what else? Oh, I guess the other thing that's interesting to note is I think, early on there was a lot of papers sort of prompting models to do like critique and revision. And this works well on like fairly easy tasks like summarization, harmlessness, like things that don't require like really complex reasoning. However, once you apply these ideas where you're just like few shot prompting a model to do revisions or critiques to harder tasks like math or code, it doesn't work very well or like it fails to beat simple baselines like majority.

The Limitations of Current Models

So in these settings we actually have to fine tune verifiers. However, it is possible that as models get better and the pre training data is further refined towards capabilities that we care about. it's very possible that like, many models will be like, effective critiquers or verifiers on much harder tasks. Kind of out of the box.

Technical Difficulties and Workarounds

But with today's models, we have to fine tune them. I forgot to mention, but this agent two model uses self critique. So. Yeah, yeah. I think, I think it's easier to critique on. It might be easier to critique on some like, agent tasks because like, it's kind of like if your task is say, like, buy me a, buy me this item, it's pretty easy to check if you did it correctly.

Verification in Simpler Tasks

You can just look at like the Amazon receipt email. It doesn't require complex reasoning to do the verification. In that case. Yeah, it's kind of pretty crazy that, you know, via almost, you know, solving IMO questions before solving web navigation, it. Is kind of interesting.

The Challenges of Data Collection

Yeah, I think it's harder to, I think the real, the answer is that it's like harder to get the data for web navigation. Yeah.

Final Remarks and Thank You

Okay, cool. Yes. I don't have any other questions and I don't know, I don't think there's any, other tweets in the space about it. But, So, but yeah, I think this is really interesting. Thank you, Charlie, for presenting this paper. And I think the surrounding discussion about it too was very interesting too.

Appreciation for Participation

So, yeah, thanks. Thanks for joining us, Charlie. Yeah, thanks for having me. Okay, so cool. Actually, Kong at the first, the AI scientist paper, Otter is now on space.

Continuing Conversations in the Space

Can you find him? Okay, let's see here. I don't see him, I guess, I don't know. We're having the same problem as before. Can he request to speak? Let's see here. So, so, yeah, we're trying to have, so we had the, we covered the paper by Charlie, which is actually the second paper on our list.

Continuing Discussion on Paper Presentations

And now the first paper on our list, the author of that paper is going to talk about the, about his work, but now I need to have him join the space and request to speak. He already requested. Yeah. Again, I think it's a similar issue of maybe he can try to leave and rejoin, but I think it's having, we're having a similar issue that he's not showing up.

Technical Challenges Persist

Yeah, it's crazy that, you know, we cannot even let people speak even though we can solve IMO questions now. Yeah, it's, I guess, yeah. Look, XCI is doing great things, but they also need to work on, you know, the X team needs to work on the spaces more, I think.

Attempting to Resolve Technical Issues

Okay. He rejoined and requested. Yeah, I don't see him. Do you, do you see him in, at all, in the space? No. Yeah, or like, is he joining from his laptop or his phone? Like if he tries joining from a different device.

Exploring Device Options

I don't know. Okay, let me ask him. Yeah, sorry everyone, about these technical difficulties. Always the problem with these basins. Okay, so he's using laptop.

Final Attempts at Contact

Okay. Maybe he can try to use his phone if possible. Yeah, so he showed me his screenshot and he's definitely in the space. Yeah, I think it's just a problem with. I think also desktop has a web, also has a lot of issues, so.

Technical Difficulties and Collaboration Requests

Yes. I have sent you a request to be co host. Okay. He's on his phone and request it. Yeah. I don't know, it doesn't seem to be working. Do you want to move on and we can try again later or something? I don't know. Yeah, that works too. Okay. Okay. I can probably give a talk on my next paper then. Meanwhile you guys can sort of resolve that problem. Yeah. Wait, wait. Yeah, I'll dm him and see. Yeah. Okay, so yeah, let me talk about Gemma two and polygemma.

Discussion on Gemma 2 and Polygemma

These are each of these kind of short papers in terms of like contents, because they are like extensions of existing models. Well, I'm sure you guys already know well about these papers, which were released a month ago. So let me start with Gemma two. So obviously this is the successor to Jamma 1.5. So yeah, it's more or less minor update, but the performance improvement is quite significant and achieved state of the art performance on their respective scale. So they released from 2 billion to 27 billion models, and 27 billion models were trained on 13 trillion tokens. So for training they used distillation knowledge. Distillation led to significant improvement in training compute efficiency over the baseline. They observed significant improvements in terms of score on their benchmark, which was like from 60 to 67 points when they distilled their two B model from seven b.

Performance Metrics and Training Insights

So this advantage of distillation actually persists across different model sizes. So this is a very promising method and their post training procedure is very standard. They use SFT and LLHF. Architecture wise they just use GQA and they also used local attention with full attention interlibed for better efficiency. And they use logic soft capping, which makes the logic of each attention layer stay within the certain inappropriate stability. My question is, why is the ratio of feed for dimension to D model solar? So it's typically set to around four. Right. But jammer 227 B has 16 times. So they have 16 times larger feed for dimension than D model. If anyone knows why, let me know. That sounds very big. And Google model tends to use this very large feed for dimension for some reason.

Further Considerations on Model Architecture

Obviously it's very efficient in terms of GPU's, but I feel like it's kind of restful if you're using MOE. MoE sort of behaves as a large feed for dimension, but MOE is doing conditional computation, so it's efficient. But in this case their model is dense, so it doesn't make sense to me. Yeah, so that's for Gemma two. So, yeah, actually there's not much to talk about Gemma two anyway. For polygemma, basically it's an open vision language model that is based on the Siglib. So 400 million vision encoder and the Gemma two V language model. So it achieves strong performance on a wide variety of open world tasks. Architecture wise, it consists of Siglib image encoder with linear layer projection after that, and its output features along with the corresponding text fed to the gemma two.

Features of Polygemma

Sorry, sorry. Gemma decoder for textual output, each component is initialized from publicly available checkpoints and it uses prefix language model masking on the decoder side, meaning no masking on attention across image tokens and text input tokens. So I think, yeah, this sounds kind of interesting because there are a. They are using decoder model, but they are doing prefix and masking. So in terms of training phase, stage zero is just unimodal pre training. So they trained Jammer and Siglib separately? Well, they obviously are using publicly available checkpoints, so they didn't really do it in this paper, so. And stage one, they are multimodal pre training. So it's a long pre training on a carefully chosen mixture of multimodal tasks and notably nothing is frozen in this phase.

Training Phases Explained

And stage two, they increased resolution, continued pre training at higher resolution, and at the end they did transfer, so turned the base model into a task specific specialist. So they performed a bunch of ablations, which was very insightful. I really loved ablations. So they found that freezing is typically worse than unfreezing, especially when the language model is frozen. And freezing the image encoder leads to significant improvements on tasks requiring spatial understanding. So this was kind of interesting. So the thing is, there have been many ablation results from many vision language model papers for the last four years about freezing versus unfreezing, with different contradictory results, even among the same institution like Google.

Consensus Questions in Research

So I'd like to know what led to this lack of consensus and if we should believe this, their claim is sort of like the final consensus. Yeah. What do you guys think? Yeah. Does anyone have any questions about Gemma and poly Gemma? Maybe people want to reply with a comment or anything. Feel free to do. So. One thing interesting, kind of like a separate, like, paper or something about Gemma. Like, they also did like the sparse auto encoder training of Gemma, which is. Which is quite nice that they trained those SAE models and released it openly.

Thoughts on SAE Models

So that's also worth checking out if you're interested interpretability research. And, yeah, they did that for, I think, the 2 billion and 9 billion models where they did it for all the layers. And then I think the 27 billion model, they did it for a select number of layers. And so, yeah, someone was saying, oh, someone should build a. Someone should build a Golden Gate Gemma. So, you know, maybe that could be a fun project for someone. Someone to do with the Gemma models and the Gemma. Gemma ses, which is called Gemma scope. Yeah, that was the only other thing I kind of wanted to add here.

Community Engagement and Future Ideas

Yeah. So I'm reading a comment and David says a larger hidden dimension could just be about more expressiveness and storage capacity. Yeah, I think so. But, you know, I wonder if that's better than, you know, larger D model. That, from my experience, that typically leads to better performance for given compute value. Anyway, so Kong is now joined as speaker. Thanks, Tanish. Yeah, just so everyone knows, our list has been a little bit kind of out of order because of all the difficulties. So went the scaling LM test and compute. We did that first with Charlie, then we covered Gemma two and polygemma, that orange is covered.

Transition to New Topics

And now we're going to cover the first paper AI scientist, which the first author, Kong, Will. Will present that right now. So just for everyone to. To know what the order is, because it's. It was a bit out of order. So sorry about that. But, yeah, Kong, I guess you can take it away. Yeah. Awesome. Thank you. Yes. Yep. All right, nice. Nothing like a few technical difficulties to start the presentation. Thank you for laugh reacting. Yeah, so thank you so much for having me.

Introduction to AI Scientist Discussion

And I'm super excited to talk about the AI scientist and we're incredibly excited by the reception from the community so far. And yeah, I wanted to shout out my co authors as well, Chris and Rob, as well as Jacob, Jeff and David, who've been awesome collaborators on this work. And let me just dive right into the details, I guess. So, the focus of our work is envisioning whether or not current frontier LLMs can entirely take over the scientific process. So this stretches from idea generation, from implementing experiments, iterating on them, visualizing intermediate results, writing the paper all the way to reviewing, all powered by recent advances in frontier LLMs.

The AI Scientist Operating Framework

And moreover, this kind of process that we create in the AI scientist is iterative and open ended. So already what we have is the language model can start out with a few ideas, try them out, see what works, and based on that, can refine and select the next best proposition from the list based on that. And we're already blown away by what the AI scientists can already do. Like, we've been impressed by the creativity of its ideas. The fact that through our process, the model is able to sort of communicate its insights in a sort of human readable format has been really useful for us to sort of debug and get inspired, I guess, by what's been output.

Open Source Code and Future Potential

And all of our code, I guess, is open source. And we truly believe that this is sort of, this is like the Will Smith eating spaghetti moment of AI science. This is the worst it will ever be. And right now, the paper qualities are sort of basic kind of ideas building on established frameworks, maybe what are two steps above the baseline, but we really envision sort of like whole AI communities forming out of this in the future. So more advanced paper generation, more deep ideas with, you know, with the vast amount of progress that's coming in, foundation models, we're, I guess, like slightly taken aback by what we can currently achieve.

Expectations for Future AI Models

But we think that, you know, next generation of models, we could even just be blown away by the quality of ideas and experiments that come out of this system. And I, I guess, yeah, one other thing that I kind of want to discuss is that we're sort of just, were surprised at what current systems could already achieve. And it was super straightforward, sort of, for us to sort of like bootstrap from earlier prototypes of the AI scientists. So early on, we had, I think Chris shared quite a few things, photos of our earlier mock up of AI scientists, where it's literally just one or two experiments, write up the idea and markdown, display it to us, it's like four pages long.

Building on Past Prototypes

But we just kept adding it. We just kept asking the model to do more stuff so like go deeper on the ideas, revisit prior experiments, keep a logbook of progress even up to, you know, writing a full paper in latex format which we thought was like impossible for current models to do together with, you know, pulling sites from the Internet. And I guess, you know, for us we see, you know, lots of future improvements. So, you know, the standard prompt engineering perhaps like fine tuning models to be able to do this. But I guess we are also interested in kind of starting a conversation around what should the role of AI be in future science. Can we have these models at a point where we can trust the science, where we can build on it?

Trust in AI Generated Research

Can these papers even be submitted to iclear as just a regular paper? Can we even like produce stuff that's good enough to be presented as a full paper at a certain conference? And at that point, you know, we don't even know like who's going to sign in from the poster and present it. So yeah, lots of future work and we've been super excited by just the reception so far. Lots of people commenting on the GitHub and yeah, we're really excited to see what the community thinks about this. And one thing I do really want to stress is we really do believe that this is the worst it will ever be and we cannot wait for what it's going to be like with a few future.

Open Discussion on Community Engagement

What is v two, what is v three going to be? This is really the hand with seven fingers version of AI papers. So yeah, happy to take any questions about it. Awesome. So are you sort of envisioning this AI scientist project as sort of like a community driven project, like open Devin and suite agent? We definitely welcome it. Like all of our code is online right now and just today we accepted maybe like three pull requests. I think. I think people are really excited to build on it. So integrating more models into it.

Potential for Enhanced Collaboration

I think people have been suggesting you have different ways of getting the idea generation process going. So right now it's really just bootstrapped off of the LLM's own thoughts. But I think we have a pull request right now which is even can we take in existing literature like a literature review, and based off of that come up with, you know, pressing questions to answer about a code base? So yeah, I would definitely love to see a community involvement. Awesome. So another thing is, you know, typically difficult questions, like IMO questions, let's say it's like they do use mcts and that kind of stuff.

Incorporating Advanced Problem Solving

Have you used, have you tried something like that. so I guess, yeah, there's many places we could see that, in the AI science pipeline. So maybe if I just hone in on for example, like coding, for example, right now it's basically just a sort of chain of thought self reflection loop. And it gets, say, n runs, and each run you get like NDE shots at perfecting the code. But this maybe works for the kind of hundred line modification to existing code base. But if it had to do more complex ideas, I think definitely more advanced search could be a great thing.

Looking Ahead in AI Development

Even with idea generation paper write up, the actual technology that we're using in LMS, we use adir, which has a lot of bells and whistles, but for a large part of that there is a lot of reflection and good python linting, but it could definitely be so much more advanced, for sure. Thanks for your answer, Tanish. Do you have any questions? Yeah, there's actually one question in the comment, which is the AI sanctus multimodal? Like can it draw figures, et cetera?

Inquiries on Multimodal Capabilities

So yeah, curious, I assume, of course, like you have all these figures sort of like data and stuff, but maybe also there's like potential for the model to create like other sorts of figures, like explanatory figures and things like this. So yeah, maybe you could touch a little bit about how it makes all these figures and, you know, its multi model capabilities. Yeah, for sure. So right now we don't use any multimodal capabilities and all figures are generated by a code. So we get visualizations just out of Matplotlib and Python libraries and these all get inserted into the paper via latex.

Methods of Data Visualization

But absolutely, like this process has a lot of flaws. For example, if you were like just blind making a figure for a paper and just put it in with some latex code, almost certainly it's not going to fit the screen. It's going to not even just be vspaced properly. And I think future versions of AI scientists are definitely going to need multimodal capabilities either for critiquing the paper for generating new figures, for example. You know, one of the main roadblocks, maybe even for submitting AI generated papers to ICLR, is that a lot of papers rely on, you know, strong visualizations to convey their point.

Future Enhancements in Research Processes

You could obviously do some stuff in like latex ticks, but that kind of stuff also needs a little bit of iteration. But I think this is quite a doable. All we got to do is upload the pictures of the PDF into sonnet, Gemini OpenAI's vision capabilities, and we can easily get critique from that. The other thing it would be good for as well is for the reviewer. So our reviewer right now is remarkably good for only being able to see the text. Might imagine that's just because, you know, all figures are probably described in the text and all the main conclusions are still there.

Improvements for Reviewer Performance

You can see tables, but it can't see figures even for the reviewer. I think we envisioned that if it could get all these pictures also in context, we might even be able to improve the performance, like vastly superhuman beyond already, like sort of matching human performance right now. Awesome. So another question from bench roots. Has there been an effort lubricating established papers that are on GitHub or hugging face? So what was the question?

Replicating Established Research

Oh, I see. The question, has there been an effort to replicate established papers? GitHub hacking pace? Yeah, so that's a really good question, and I think that would be an excellent application of this kind of technology. So, as it currently stands, I guess the idea generation of our paper is very much, you know, open ended, driven by the language model's own imagination. But it could definitely just take previous ideas, like other people's ideas, and just try and implement them.

Exploring Existing Ideas

So one roadblock right now to this is that the AI scientist is really trying to make modifications on an established code base. So, for example, the as edge right now just tries to make diffs based on existing diffusion. Code bases exist in transformer code bases. But if you wanted to replicate, then you might need to pull code from the Internet, you might need to write code from scratch and test things. We envision. This is definitely all very possible. Will need one more step from the current version.

Framework Limitations and Future Steps

And a lot of the design choices around AI scientists right now is based on speed and efficiency. So all of our experiments maybe only take a few minutes. Training nano GPT only takes three, four minutes on good gpu's. And if we wanted to replicate any kind of paper, we might need to expand quite a lot more, compute and paralyze quite a lot heavier, you know. So any, like heavyweight experiment, we're probably going to run the AI centers for days on that for it to get sensible results, but not fundamental limitations, but more, just room for further improvement.

Evolving AI Research Landscapes

Yeah, I actually have two more questions, so, yeah, this paper is so interesting that I can come up with many questions. So thank you. Yeah. And one question is, well, more like a suggestion or like something idea. So I think, you know, you could, we could be more ambitious like this paper and, you know, try AI or like say entrepreneur or something, obviously, and that sounds very ambitious. So like doing everything like writing page and everything, obviously each component was already sort of done by JPTFO, but as a whole.

Ambitious Ideas for Future Projects

So yeah, if somebody releases something like that, I'd say, you know, it's going to be so hard. Yeah, I think we've just being like super impressed like how well the AI centers can just generate ideas for anything. So this also connects to a lot of work and I guess like the open endedness, like evolution through large language model communities. And essentially by just iterating ideas you can generate like new ideas for anything. So you know, new, like RL agents, new RL environments, in our case like new paper ideas for like the entrepreneur case, like new business proposals, I'm sure like you could execute out of this.

Future Vision for AI Creation

What we'd really love to see like in the far future is you know like AI for like any kind of scientific discovery. Like could we just hook it up, could we propose ideas to like some kind of automated system and just like see it evolve. Right now I think a lot of the, you know, these models are very specialized to code and are really good at that. So right now we've been looking at a lot of like code applications for the AI scientist and also, yeah, for other like works in evolution through large language models.

Applications Beyond Code

Discussion on Future Implications of AI

Like, you know, were discussing like, imo before. I think. I think at that point we have to think, I guess, like what future implications for everyone in the world at that point, you know, mathematicians, AI researchers, anyone with a job. I think this will really tie like very closely in with the rest of the conversation with future generations of models and. Yeah, hard to predict for sure. Yeah, I agree. I naive guess is just, you know, around 2030 or something, which is kind of why I try to diversify my career, you know? Yeah, I think so. But I'm also like cautiously optimistic about like what happens one level up from this right now. It's really like it's still going to be 90% human. But what happens when AI comes up with not necessarily the paradigm-shifting ideas, but what if it can automate PhD level research, although that's a very broad category, because that could be also world-changing as well.

The Future of AI in Academia

What if they could like operate at the level of, you know, like the average PhD researcher, then what could you do that could you know, then at that point, maybe, like everyone can be a professor at that point and everyone can have like their own communities of AI researchers, like working on their ideas, which I think is a really exciting prospect. Yeah. And I kind of see that as like more what we need to contend with in the next few years rather than us all being put out of jobs. So I'm excited for that. Do you have any personal plan to sort of like prepare for the when know researchers are going to be replaced or something? I hope that won't be anytime soon. I don't know. Maybe I'll make. Maybe I'll make music. It's very good to know. Tanish, do you have any comments? No, nothing else. Yeah, but this was a very interesting paper and thank you Kong for joining us.

Covering Upcoming Papers

Thank you. Yeah, thank you so much for having me. Cool. Aaron, what paper do you want to cover next? Do you want to cover though? Do you want me to cover next? Yeah, you go ahead. Okay. In that case, I will cover two papers, which is Sam two, and segment anything in medical images and videos. So I will cover those two papers. Give me 1 second to, I guess pin it to the space first and then I will talk about it. By the way, we already have spent an hour, so we can probably make each paper a bit shorter. Yes. Yes. So of course the Sam two is from. From meta. And let me just pull up my notes. Okay. So for Sam two is basically, I'm sure many of you have heard of Sam, the original Sam model, which is segment anything model from meta from last year, April of last year, which is this pretty general sort of segmentation foundation model that they trained.

Details on Sam Two Model

And yeah, it was basically you can like, you know, label something with a point or a mask or a prompt or whatever and a bounding box, you know, and you could just like easily like segment with this model and it was, you know, achieving state of the art results and yeah, so, you know, it was very interesting work that they did and this paper kind of, you know, continues off of that and it's basically an improvement. But also more importantly, it allows you to segment videos as well. So it's not just like an improvement in the performance on the image capabilities, but also adds this whole new capability of video segmentation. So yeah, I'll talk a little bit about the architecture and also how the model was trained. So basically, you know, it basically for videos, for example, of course, you can put like, you know, you can put prompts in terms of like for example like points or bounding boxes or even like masks, you know, on individual frames of the video.

How the Model Segments Video

And then you can like for example, see then try to have it segment over the course of, over the course of the video. So you can see how the, you want to, like for example, you know, for example would label maybe the first frame and then try to get the segmentation mask for the remaining of the video or like, you know, label it throughout, you know, at different points in the video. But then for like image input, basically the input, you know, it basically behaves similarly to the Sam one in terms of how you input your, your prompt or your mask or whatever. And, and you know, get how you get the output segmentation mask. And so then for videos you have, so you have like an image encoder. And so that image encoder is run on each frame in the video. The image encoder is a, it's a, I guess it's called a hierarchical model.

Functioning of the Model's Encoder

I guess this is like a hierarchical transformer architecture also developed by meta. It was developed by Meta last year and it's, and this Hira encoder is pre trained with like a mask auto encoder task. So it's an Mae pre trained Hiraev. And so that's the image encoder that they use. So that's different from Sam one. And so this sort of hierarchy encoder is hierarchical and provides sort of multi scale features for the model to use. Okay, so that's the image encoder part then the other aspect is that those image encoder features are passed into the sort of attention or transformer part of the model. But the whole idea here is that because you have video, you want to basically condition on pass frame features.

The Memory Attention Mechanism

So you have the transformer blocks, you have a bunch of transformer blocks that are stacked. The first one takes in the, you know, the input, image encoding of the current frame coming from your image encoder, your higher realm model. And then each transformer block is performing self attention followed by cross attention. And the cross attention is then it's cross attending to what is called memories of previous frames. So we'll talk about how these memories are generated. And basically these memories are stored in a memory bank. And so I'll talk a little bit more about that later. But basically the idea is that in your transformer part of the model you have this cross attention that is allowing the model to look at previous frames and that influences the generated segmentation. Basically you have, the model has the image encoder features going into this memory attention.

Utilizing Mask Decoder in Segmentation

This memory attention then gets passed into this mask decoder, which takes, so the mask decoder takes an input from the memory attention and also takes an input from the prompt encoder. So this is kind of similar to the original Sam paper as well, where they also have this prompt encoder and this mask decoder. So basically you have to be able to encode the prompt in some way for the model to understand. So they have, you know, this sort of, you know, positional embeddings that represent, for example, the points or the boxes if that's how you're labeling your data, if that's how you label your image. So you have positional encodings, you know, you could, if you have like text and text that you're trying to encode, they have like a, you know, a clip text encoder.

End-to-End Segmentation Process

And then like if you're doing dense prompts, like a mask, like for example, if you're labeling the first 1st frame of the video and you want to, you know, you want to then segment out the rest of the video, you have, the first frame has a, you know, you would have to label a segmentation mask and that would be embedded using convolutions. And that's summed with the image embedding that you get for that particular frame. So they have these embeddings that are then associated with the prompt that you gave the model and that's passed into this decoder, which is also a bunch of transformer blocks. And so this is taking in. Yeah, so basically you have like cross attention between the prompt embeddings and the frame embeddings, the embeddings from the image frames that we got from our memory attention. So basically you have the frame embeddings that you come from your image encoder.

Mask Decoder Processing Output

They're processed by the memory attention part. Then they are passed into the decoder where there's cross attention between those frame embeddings and the prompt embeddings. And then, okay, so then that basically gives you a sort of segmentation mask for that image. Basically that uses, you have like an MLP that is producing an output token for each pixel, basically, or each location and the image. And so that is produced by that math decoder. So there you get your mask for that specific frame. But now the question is like, okay, you have the frame that you're getting the mask for, but now you want that to be part of your memory for the next frames. And so that's where the memory part of it comes.

Integration of Memory in Model Architecture

So you have a memory encoder and you have a memory bank. So the memory encoder basically just takes the output mask and down samples it with a convolutional module and then sums it element wise with the frame embedding from the image encoder. And so that you have this sort of, that's where you get your memory and this goes into your memory bank which basically stores like the N recent frames. And, yeah, and then that is, then those frames are, then those frame embeddings, the memory embeddings for those frames are then passed into the memory attention so that, you know, you can do the cross attention to the previous frames. So yeah, that was all very complicated, but hopefully you have some sense of like the general idea is like, you know, you have these embeddings for the images and they're being processed by transformer blocks and cross attending with the prompts that you have and that produces a mask.

Evaluating Model Performance

And also then you have this memory component which is I think the main component that's different from the previous SAM model because the memory component allows it to deal with videos. So those are the sort of, that's kind of the summary of how the model works. And of course the nice thing about this paper is like it all, you know, they do this sort of very data driven approach to, or data centric approach to training this model. So actually the model is trained jointly on image data and video data. So for example for the images they use the same data set that they use for the original SAM model. It's called SA one b. So they have like 1 billion images for that. But then for the videos they have this sort of data engine where you have like for example, initially you have to, you have the human annotations, they're annotating per frame.

Challenges in Training the Model

Of course it takes a long time. They're using the Sam one model to help them label per frame each image because the SAM model works for images. So they're using that to help them annotate per image in the video. But that's still going to take a long time. And so they only do this for like 1000 videos and they collect. So that's a pretty small data set that they have there. But then they basically train a small sort of SAM two model that only accepts masks as prompts. So basically you, so that's like this is their first version of their SAM two model and that's trained on their data set where basically, okay, it's, you know, you have the mask from your first image and then you have to utilize this SAm two mask model as they call it to propagate the annotated mask from the first frame to the other frames.

The Data Engine Pipeline Explained

And so that they get some rough data for the full video, for example. And then they start then training their full SAM two model which accepts a variety of prompts. And then they train the model on data and then they edit, and then they apply the model to data and edit of the predicted masks, if there's any issues. And so they actually say that they retrained and updated SAM two using collected annotations five times. So they keep doing this sort of data labeling, utilizing the current version of the model to label the data, adjusting it, they continue like that. So yeah, they have this whole data engine pipeline that's very sophisticated and allows them to collect data, a lot of data. So they have their data set that they have, which they release under a permissive license, by the way, so people can access it and use it.

Expanding Dataset for Enhanced Performance

Their data set has 50,000 videos with 642,000 masks. They call them masklet, which is basically the sort of spatio temporal mask. And so yeah, they have that huge data set. They also have an internally available license data that they further use to augment their trading set, which comprises of 62,000 videos and 69.6 thousand masks. So actually it looks like their internal data has more videos, but maybe it's more sparsely labeled and not very densely labeled. So that's an interesting thing to also note in terms of results. They talk about like, yeah, their model is generating better segmentation accuracy with three times fewer interactions. So basically seeing like, okay, if you are going to try to label a video, you have like this interactive segmentation where maybe, okay, you label the first frame, you see how it is labeling the rest of the frames in the video.

Model Efficiency and Interaction Reduction

Maybe you adjust some of the frames here and there while you're, while you look, you know, while it's, you know, segmenting out the image, the video. But what they're saying is that like you compared to maybe some of the other methods, you only need to kind of relabel maybe around three times less compared to maybe some of the other methods, for example. And then of course the other sort of like test scenario is basically just only prompting the first frame and not actually prompting any of them middle frames or any of the rest of the video. So if you only prompt the first frame, yeah, basically it outperforms baselines on 17 of the datasets. And again, it's outperforming all the previous methods. And so that's for video, but then also for just plain image segmentation, the model achieves better performance, so it achieves higher accuracy than the original SAM one model without using any extra data, and it's also six times faster to run.

Improvement Over Previous Models

And they note that this can be mainly attributed to the smaller but more effective hierarchical image encoder in SAM two. So it's because of this hierarchical multiscale image encoder that they are also able to just achieve better performance overall on image segmentation. So, yeah, that's Sam two. Before I move on to Sam two for medicine, do you have, does anyone have any questions or comments? I know that's probably a lot to take in. So obviously there are some like a very important practical implications of some two, like robotics and VR. Do you have anything in your mind, like how does this, you know, this is video and I, I don't think there's a lot of video data in medicine. How is it useful? Yeah, I mean, I get to the Sam, there's a separate paper about Sam two for medicine, so we'll get to that.

Transition to Medical Applications

And I can talk about how it's easy medicine, but. Yeah, I guess I'm not as familiar about like, I mean, robotics, I guess, is more like, yeah, you can like segment out the. I guess, yeah. When you have these videos of like the robot performing its actions, you know, you want to segment out the objects. I guess it's, that's the main use case there. But yeah, I guess. I don't know if that, if. I don't know what exactly what you're asking. If you're asking about like the applications of Sam two to medicine, I'll talk about that next. Like, besides medicine. So the paper says, like important applications in AR, VR, robotics, autonomous vehicles and video editing.

Discussing Potential Applications

Do you think of anything else, like any other applications? I don't know. That's a good question. I mean, you basically have all the same applications for image editing, of course, and image segmentation and all of that for image. And I think video editing. That's a. Yeah, that's a good point. That's going to be a very interesting application just to be able to like, you know, edit out maybe objects from a video or things like this. What other applications? Yeah, I don't know. Nothing. I don't know. Nothing immediately comes to mind as to other potential applications. Anyway, it's nice to, it's fun to think of applications could be useful for some startup ideas.

Anticipating Future Innovations

Yeah, yeah. I'm sure there's probably lots of interesting startup ideas that you could come up with for this. If I recall correctly, I think I saw a tweet somewhere. I think it was like Yasin who said that dingboard was heavily powered by the original Sam model and was very much inspired by when that model was released and things like this. So you could imagine that. Like, yeah, you know, in the future, Sam two could also be used to power some interesting products and startups. Yeah, yeah. So instead of using Dingbot for creating memes, you can use Sam two for creating video memes. Yes, exactly. Exactly. I think that would be a very fun application there.

Concluding Insights

Yeah. I mean, just generally, I think Sam two will be very useful for video editing in general. Yeah. Cool. If there are no questions about Sam, no other questions about Sam two and how it works because. Yeah, it's a bit overwhelming in terms of how it works. There's a nice, if you go to the Sam two paper, of course, there's plenty of figures that kind of better explain the flow of everything. So figure three, for example, shows the overall architecture. And then there's a very detailed appendix as well that goes over the architecture. So, for example, figure nine talks about the mass decoder architecture. Figure eleven talks about the annotations and how that works.

Importance of Visual References

So, yeah, if there's a lot of detail in the paper. So if this is interesting to you guys, you should definitely check it out. And I think it's also a good paper talking about the sort of data engine stuff as well. I think that's also a very interesting, kind of almost like a nice case study on how to do that really well. So, yeah, sorry, this is not really related to your paper per se, but I've seen a few people commenting that, you know, they would really appreciate if we could share visual information. Unfortunately, Xspace currently does not support visual information sharing.

Future of Video Streaming and Information Sharing

But, you know, another option is video streaming on X, which, you know, we discussed that before privately, that, you know, that sort of lacks the interactivity of space. But I think the interactive advantage is not that much, I guess. What do you think? Yeah, I think we have to explore this further. And I think, I guess, personally, I think maybe I'm hoping we'll see how it goes. But like, X will like improve some of these aspects on spaces and live streams. And I think some of these ideas in terms of doing screen sharing and video stuff on spaces is kind of on their radar and they're planning to potentially add that soon.

Evaluation of Future Features

So I'm also hoping that these sorts of things get added soon enough. But we will have to eval. Yeah, we can reevaluate if we want to use maybe live streams and things like this at one point. Yeah, we could try live streaming in the next session if that's okay. Yeah, we can think about it. Yeah. Yeah. Yeah. Someone is suggesting video X based and live stream which streams to accent. That was. We tried doing that actually. Yeah, David is saying this. We tried doing that and that didn't work for some reason.

Technical Difficulties Encountered

I forget, we had some technical difficulties getting that to work. So, yeah, we'll have to see what the best way of doing this is. Okay, next paper that I want to talk about. So, you know, saying about Sam two, and of course, you know, coming from a medically high background, so I'm obviously very interested in how these models are used in medicine. There was a recent paper that evaluated if Sam two is better for medical image and video segmentation.

Evaluating Medical Applications of Sam Two

So basically, people have already explored using the SAM model for medical segmentation, segmenting, for example, x rays and ct scans and mris and, you know, mammographies, microscopy images, ultrasound, you know, and people, you know, applying Sam to that, to these, you know, out of the box, or also fine-tuning SAM for medical, for these medical tasks. So, for example, they have a medsam model which was fine-tuned on a whole bunch of medical tasks, for example. And so now this paper that I'm talking about here, which I've pinned to the space, is, it is evaluating if SAM two out of the box is better than Sam one and also better than this fine-tuned MedSam model.

Benchmarking Across Modalities

So they are benchmarking over eleven different modalities. You know, I mentioned some of them already, like CT, MRI, pet ultrasound, mammography, light microscope, oct, there's several others as well. And then the way that they are prompting the model to do the segmentation is with a bounding box. So they found out that in the past that doing this bounding box prompting is more efficient and has fewer ambiguities and leads to better performance than doing like a point prompt. So, you know, with the SAM models, you can prompt it in different ways.

Choosing the Right Prompt

You can like put a point over the object that you want, or you can like put a bounding box over the specific object. So the different ways of prompting it. So they're saying, at least in this medical domain, the bounding box prompt is typically better. So that's what they use. And so then basically what they've observed is that, you know, Sam two is better than Sam one. On many different domains, like MRI, light microscopy, it has lower scores in some other domains, like PET and OcT. And then in many domains, it also has this comparable performance like CT, X ray, ultrasound, mammography, etcetera.

Performance Comparison

They have comparable performance to SAM one. This is, of course, like the out of the box models. But the other thing to note is that the fine-tuned Sam one model, which is this medsam model, this is Sam one that was fine-tuned on a whole bunch of medical tests. That model consistently outperforms both SAM one and SAM two on most of the modalities. So it seems like, you know, even if you have a better segmentation model, like out of the box general segmentation model, the fine-tuned model that was fine-tuned on the medical test is performing much better, even if, even though it was fine-tuned with an older model.

Processing and Evaluating Video Data

So that was looking at just images. The other thing is that, of course, Sam two has the ability to process videos. And the nice thing is then, okay, you can formulate 3D images as videos. You know, you could say, for example, like with the CT scan, you know, basically, then the CT slices are frames in a video and you can process it that way. And so actually, by doing that on CT and MRI images, Sam two does perform better. But for pet images, it's performing poorly and it's actually easier.

Insights on PET Image Performance

You get better performance if you just do Sam one on each individual slice by itself. Actually, it's interesting, these pet images overall, it looks like the Sam two seems to struggle with these pet images, even with like, if you just look at a 2D versus, and also the, so that's another thing. Maybe that's a domain that's, again, maybe not as much data and needs to be trained separately for pet images, something to consider. Okay. And then the other thing is like, okay, so they said if, you know, if the model is the MedSam model, which is a fine-tuned model, is performing better, then yeah, maybe this sort of fine-tuning is important to do.

Implications for Future Research

So they only did a very preliminary experiment where they fine-tuned one of the smaller variants of SAM two on a very specific 3D abdominal organ segmentation data set. And of course, they observed that the model does perform better if it's fine-tuned. But again, that's a very small sort of preliminary example. And I think future work would probably have to do a more comprehensive fine-tuning on different domains and larger data sets and I larger model and things like this. But yeah, I think it's a very interesting paper.

Conclusions on Medical Image Segmentation

Basically, the conclusion is that SAM two isn't always better. And then SAM one, in many cases it is. You just kind of have to evaluate it on a per case basis or per data set basis. And then on top of that, the fine-tuning on the medical domain is very useful. And like even the old SAM models, because you had the version that was fine-tuned on the medical domain, the MedSam model, that's outperforming everything. So, you know, fine-tuning, even though like you have these general models, it's very useful to still fine-tune on domains that like the medical domain where it's very different from general images.

Final Thoughts

Right. Like, you know, they look very different from natural images. So fine-tuning often seems to help for the medical domain. Yeah, so that's that paper. Are there any questions about it? Yeah, thanks for addressing some of my questions earlier. Yeah. Cool. If there are no questions about that, I guess we'll move on to, I guess. Aaron, you were going to cover the lama paper. Lama three. You know what? I can cover the agent Q paper, which is actually probably more new to many of you guys.

Transition to Next Paper

Okay, fair enough. Yesterday.

Agent Q and its Innovations

Okay. Two days ago, I think actually I. Yeah, yeah, this one's. So basically they released web. State of the art web agent. Let me see. So, okay, multi own startup, they present agent Q, the state of the art autonomous web agents that can plan and self heal. So self heal means that sometimes the agent goes to bad trajectories, then they can recognize that it is on the wrong direction, so they can move back to the correct path. So the current existing problem is that current methods such as supervised fine tuning on curated expert demonstrations often fall short on agentic multi step tasks due to compounding errors and limited exploration data. This is also the case for even embodied agent. These approaches yield suboptimal policies, particularly in dynamic environments demanding complex decision making and adaptive learning. So the solution they proposed is basically agent two combines guided MCTs and AI self critique with iterative fine tune and leveraging DPO.

Methodology of Agent Q

So this method enables LLM agents to learn from both successful and unsuccessful trajectories, which enhances the generalization capabilities in multi step listening tasks. So they proposed three main components, guided search with mcts AI self critique and DPO. So MHT expert basically autonomously generates data by exploring different actions and web pages. They balances exploration and exploitation. So MCTS expands the action space using high sampling temperatures and diverse prompting, ensuring diverse and optimal trajectory collections. So for self critique, at each step, AI based self critique provides valuable feedback, refining the agent's decision making process. So this step level feedback is crucial for long horizon tasks, whereas parse signals often lead to learning difficulties. So this is very similar to what Charlie was saying at the beginning. So yeah, basically it looks like everyone's going to the same direction. So sounds like that's the right direction. And also DPO. DPO fine tunes is the model by constructing preference pairs from mcts generated data.

Results and Achievements

This off policy training method allows the model to learn effectively from aggregate datasets, including the suboptimal branches explored during search. Improving success rates in complex environments. So the results are very impressive. In real world booking, experiments on opentable benchmark multi own agents drastically improved the zero shot performance of the lambda three model from 18.6% success rate to 81.7%, which is a significant jump and far better than GPG 4.0 or any like a model, any open model presented based on GPT four o. So yeah, I actually just one day of autonomous data collection they further improved to 95% with online search. These results highlight their methods, efficiency and ability for autonomous web agent improvement. Let me give you more details. First architecture they used what is called Agent Ohana Xlam model by Salesforce, which was released like four months ago.

Benchmarking and Comparisons

So actually this is a fine tune of the pre trained mixture instruct on a mix of agentic applications including Webshop SFT data. So as for the results on webshop benchmarks, success rate XlAM model performs worse than GPT four by ten points. So XLaM is baseline here, but after DPo is applied, it already outperforms GPT four slightly and applying beam search on top of DPO doesn't result in any meaningful improvement. But applying mcts to XLAM outperforms DPO by eight points, which shows how crucial mcts is. And I think DPO gets another 2% improvement, which ties with average human performance of 50% success rate and human experts achieve 60%, which is still ahead of their model. Yes, it looks like this benchmark is quite a bit hard even for human experts. On other benchmarks like opentable, the benefit of DPO on top of mcts is more significant, which led to the success rate of 95%.

Exploration of Agentic Settings

So I have a few questions. I believe the same approach works for various other agentic settings, including embodied agents. Whether in virtual space or real world, mcts in the physical world is not straightforward and less studied, so this should take more time. does anyone have any insights on this? Tanish, have you heard about it? So what was the question? Sorry, I just missed that for a moment. What were you saying? Like mcts for like physical world, like a lot of data? Oh, I'm not sure. Yeah, I feel like I heard something like this but I'm not, I don't remember now off the top of my head, but yeah, that's a good question. Yeah. Anyway, Yeah, I haven't seen many people I think it's like. Yeah, like the sort of like, path planning stuff, for example.

Potential for Future Research

Like, I think it's like, probably used more like, in a sort of like, traditional RL sense. Mcts. Yeah, maybe not so much. I think maybe it hasn't has much been explored in the sense of like, you know, utilizing these robotic foundation models and then applying mcts to that, which maybe there's some sort of opportunity there to do some interesting research. But I think. Yeah, like, mcts. Like, I may have seen it, like, more like some, like, path planning or like, more like standard sort of ro for robotics kind of things. Yeah, I agree. So this model is not open sourced and probably will not be by this startup. But I believe open sourcing this model by a third party will attract huge attention.

Future Directions and Discussions

So, yeah, I wonder who is going to do it. This is going to be very interesting. Maybe ls AI can do it. Maybe they don't have that much resource, but, yeah, that'd be. What do you think? Never mind. This is not. Yeah, I mean, I think it would be interesting if someone had an open source version of this. And I'm sure someone will make one soon enough. I will just keep reaching out to people and if. If they're interested or not. Yeah. Cool. So does anyone have any questions about this paper? Doesn't seem like it. Aaron, was there anything else you want to add about this paper?

The State of Research and Open Source

Not much, actually. This. I'm still reading this paper. It's such a new paper, so I still haven't. Yeah, yeah. I think this was just released just yesterday, so it's pretty brand new. Yeah, actually, this paper is actually probably like, most interesting paper. Like the most important paper. Like, it's kind of hard to say, but obviously the first two talks, like, Charlie and Kong's papers are obviously interesting, but this one and, you know, kind of like a very impact. Impactful in different directions. So I think all people should talk about. Yeah. Okay, I guess I'll just quickly cover this last paper, the cog video x.

Overview of Cog Video X

I guess we'll. We'll probably just give the llama paper for now, maybe, because. Yeah, it's a lot and we don't have much time. Like, we're already, like, pretty longer than usual. Maybe we can cover next week. Maybe we can talk about next week. Do you want to have time? Yes, I'll quickly cover that right now. So let me just. Let me pin it to our space and pull up my notes. So, yeah, I mean, I like this paper called video x is actually a pretty good paper. And it's basically, yeah, they have a. They're training a text to video model. and so yeah, I mean we haven't seen a whole lot of these sorts of text to video models open sourced.

Challenges in Open Source Video Models

And at least the ones that have been open sourced aren't particularly that good, I guess I would say. and there's still a lot of work to do in the space, mainly, especially in the open source area. And of course the reason a lot of it is because it does require a lot of compute to do this sort of work. But you know, videos, you know, hold other dimensions of compute, I guess, so much more compute you need. But anyway, this is a paper from I guess the sort of thudm group. Like this is like the group, they have like a lot of these sorts of like Cog series of models. Like they have Cog DLM which is like one of the best image capturing models.

Advancements in Video Generation Models

They do a lot of interesting sort of. They have the chat GLM models. Cog view was their image text image model. So yeah, they have some really interesting work that they've been doing. So now they're working in the video space. They had, I think I previously trained one of these Cog video models in the past, but this is a new one that they're doing Cog video X. And so the approach here is again, you know, a pretty standard approach that, you know, I think basically both in text image and in text to video, you kind of have kind of converge on, converged on this approach, which is basically latent diffusion with sort of diffusion transformer.

Technical Details of Cog Video X

But in the case of video, the way that they have this is they have a, they have to train a separate auto encoder of the. To compress the video into latent space. And you know, typically one of the approaches has been, you know, compressing each frame of the video. And so you just use like an image auto encoder and compress each frame. And then you also have your, your model architectures like trying to process this in like a temporal manner. And so that like all kind of is like, you know, very specific to the video domain and kind of maybe is a little bit kicked out, a little bit complicated. This approach here that they're doing is basically the VAE is compressing in a sort of spatial temporal manner.

Model Architecture and Training?